2022-01-18 20:32:45 +01:00

|

|

|

use crate::{

|

2022-05-05 17:10:03 +02:00

|

|

|

completions::NuCompleter,

|

|

|

|

|

prompt_update,

|

|

|

|

|

reedline_config::{add_menus, create_keybindings, KeybindingsMode},

|

2022-07-14 16:09:27 +02:00

|

|

|

util::{eval_source, get_guaranteed_cwd, report_error, report_error_new},

|

2022-05-05 17:10:03 +02:00

|

|

|

NuHighlighter, NuValidator, NushellPrompt,

|

2022-01-18 20:32:45 +01:00

|

|

|

};

|

2023-01-13 21:37:39 +01:00

|

|

|

use crossterm::cursor::CursorShape;

|

2023-01-24 21:28:59 +01:00

|

|

|

use log::{trace, warn};

|

2022-01-18 09:48:28 +01:00

|

|

|

use miette::{IntoDiagnostic, Result};

|

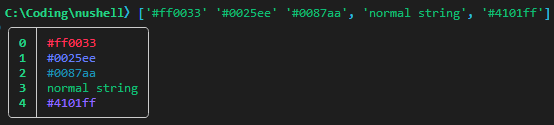

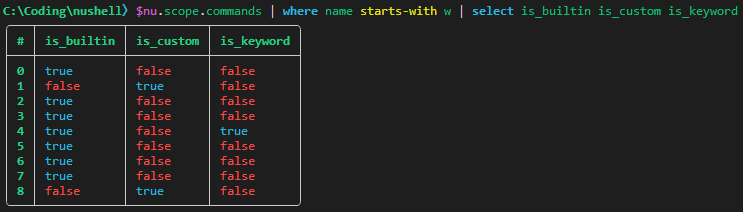

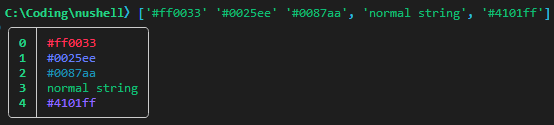

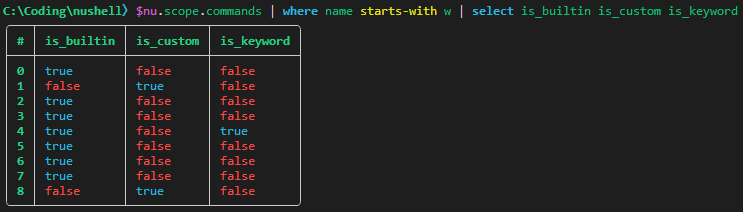

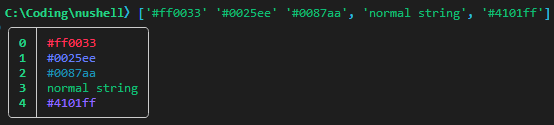

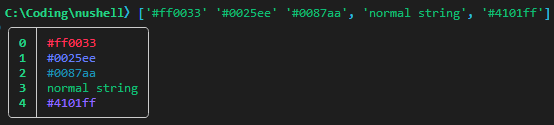

color_config now accepts closures as color values (#7141)

# Description

Closes #6909. You can now add closures to your `color_config` themes.

Whenever a value would be printed with `table`, the closure is run with

the value piped-in. The closure must return either a {fg,bg,attr} record

or a color name (`'light_red'` etc.). This returned style is used to

colour the value.

This is entirely backwards-compatible with existing config.nu files.

Example code excerpt:

```

let my_theme = {

header: green_bold

bool: { if $in { 'light_cyan' } else { 'light_red' } }

int: purple_bold

filesize: { |e| if $e == 0b { 'gray' } else if $e < 1mb { 'purple_bold' } else { 'cyan_bold' } }

duration: purple_bold

date: { (date now) - $in | if $in > 1wk { 'cyan_bold' } else if $in > 1day { 'green_bold' } else { 'yellow_bold' } }

range: yellow_bold

string: { if $in =~ '^#\w{6}$' { $in } else { 'white' } }

nothing: white

```

Example output with this in effect:

Slightly important notes:

* Some color_config names, namely "separator", "empty" and "hints", pipe

in `null` instead of a value.

* Currently, doing anything non-trivial inside a closure has an

understandably big perf hit. I currently do not actually recommend

something like `string: { if $in =~ '^#\w{6}$' { $in } else { 'white' }

}` for serious work, mainly because of the abundance of string-type data

in the world. Nevertheless, lesser-used types like "date" and "duration"

work well with this.

* I had to do some reorganisation in order to make it possible to call

`eval_block()` that late in table rendering. I invented a new struct

called "StyleComputer" which holds the engine_state and stack of the

initial `table` command (implicit or explicit).

* StyleComputer has a `compute()` method which takes a color_config name

and a nu value, and always returns the correct Style, so you don't have

to worry about A) the color_config value was set at all, B) whether it

was set to a closure or not, or C) which default style to use in those

cases.

* Currently, errors encountered during execution of the closures are

thrown in the garbage. Any other ideas are welcome. (Nonetheless, errors

result in a huge perf hit when they are encountered. I think what should

be done is to assume something terrible happened to the user's config

and invalidate the StyleComputer for that `table` run, thus causing

subsequent output to just be Style::default().)

* More thorough tests are forthcoming - ran into some difficulty using

`nu!` to take an alternative config, and for some reason `let-env config

=` statements don't seem to work inside `nu!` pipelines(???)

* The default config.nu has not been updated to make use of this yet. Do

tell if you think I should incorporate that into this.

# User-Facing Changes

See above.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace --features=extra -- -D warnings -D

clippy::unwrap_used -A clippy::needless_collect` to check that you're

using the standard code style

- `cargo test --workspace --features=extra` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-12-17 14:07:56 +01:00

|

|

|

use nu_color_config::StyleComputer;

|

New commands: `break`, `continue`, `return`, and `loop` (#7230)

# Description

This adds `break`, `continue`, `return`, and `loop`.

* `break` - breaks out a loop

* `continue` - continues a loop at the next iteration

* `return` - early return from a function call

* `loop` - loop forever (until the loop hits a break)

Examples:

```

for i in 1..10 {

if $i == 5 {

continue

}

print $i

}

```

```

for i in 1..10 {

if $i == 5 {

break

}

print $i

}

```

```

def foo [x] {

if true {

return 2

}

$x

}

foo 100

```

```

loop { print "hello, forever" }

```

```

[1, 2, 3, 4, 5] | each {|x|

if $x > 3 { break }

$x

}

```

# User-Facing Changes

Adds the above commands.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-11-24 21:39:16 +01:00

|

|

|

use nu_engine::{convert_env_values, eval_block, eval_block_with_early_return};

|

2022-10-14 23:37:31 +02:00

|

|

|

use nu_parser::{lex, parse, trim_quotes_str};

|

2022-01-18 09:48:28 +01:00

|

|

|

use nu_protocol::{

|

2022-07-10 12:45:46 +02:00

|

|

|

ast::PathMember,

|

2023-01-13 21:37:39 +01:00

|

|

|

config::NuCursorShape,

|

2022-09-09 22:31:32 +02:00

|

|

|

engine::{EngineState, ReplOperation, Stack, StateWorkingSet},

|

2022-07-29 19:50:12 +02:00

|

|

|

format_duration, BlockId, HistoryFileFormat, PipelineData, PositionalArg, ShellError, Span,

|

2022-08-18 11:25:52 +02:00

|

|

|

Spanned, Type, Value, VarId,

|

2022-01-18 09:48:28 +01:00

|

|

|

};

|

2023-01-24 21:28:59 +01:00

|

|

|

use nu_utils::utils::perf;

|

2023-01-13 21:37:39 +01:00

|

|

|

use reedline::{CursorConfig, DefaultHinter, EditCommand, Emacs, SqliteBackedHistory, Vi};

|

2022-09-19 16:28:36 +02:00

|

|

|

use std::{

|

|

|

|

|

io::{self, Write},

|

|

|

|

|

sync::atomic::Ordering,

|

|

|

|

|

time::Instant,

|

|

|

|

|

};

|

2022-06-14 22:53:33 +02:00

|

|

|

use sysinfo::SystemExt;

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-07-20 22:03:29 +02:00

|

|

|

// According to Daniel Imms @Tyriar, we need to do these this way:

|

|

|

|

|

// <133 A><prompt><133 B><command><133 C><command output>

|

|

|

|

|

// These first two have been moved to prompt_update to get as close as possible to the prompt.

|

|

|

|

|

// const PRE_PROMPT_MARKER: &str = "\x1b]133;A\x1b\\";

|

|

|

|

|

// const POST_PROMPT_MARKER: &str = "\x1b]133;B\x1b\\";

|

2022-06-03 00:57:19 +02:00

|

|

|

const PRE_EXECUTE_MARKER: &str = "\x1b]133;C\x1b\\";

|

2022-07-20 22:03:29 +02:00

|

|

|

// This one is in get_command_finished_marker() now so we can capture the exit codes properly.

|

|

|

|

|

// const CMD_FINISHED_MARKER: &str = "\x1b]133;D;{}\x1b\\";

|

2022-04-24 02:53:12 +02:00

|

|

|

const RESET_APPLICATION_MODE: &str = "\x1b[?1l";

|

|

|

|

|

|

2022-03-16 19:17:06 +01:00

|

|

|

pub fn evaluate_repl(

|

2022-02-19 21:54:43 +01:00

|

|

|

engine_state: &mut EngineState,

|

2022-03-16 19:17:06 +01:00

|

|

|

stack: &mut Stack,

|

2022-06-14 22:53:33 +02:00

|

|

|

nushell_path: &str,

|

2022-08-18 11:25:52 +02:00

|

|

|

prerun_command: Option<Spanned<String>>,

|

2023-01-24 21:28:59 +01:00

|

|

|

entire_start_time: Instant,

|

2022-02-19 21:54:43 +01:00

|

|

|

) -> Result<()> {

|

2022-01-18 09:48:28 +01:00

|

|

|

use reedline::{FileBackedHistory, Reedline, Signal};

|

2023-02-02 00:03:05 +01:00

|

|

|

let use_color = engine_state.get_config().use_ansi_coloring;

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-09-05 13:33:54 +02:00

|

|

|

// Guard against invocation without a connected terminal.

|

|

|

|

|

// reedline / crossterm event polling will fail without a connected tty

|

|

|

|

|

if !atty::is(atty::Stream::Stdin) {

|

|

|

|

|

return Err(std::io::Error::new(

|

|

|

|

|

std::io::ErrorKind::NotFound,

|

2022-10-23 08:02:52 +02:00

|

|

|

"Nushell launched as a REPL, but STDIN is not a TTY; either launch in a valid terminal or provide arguments to invoke a script!",

|

2022-09-05 13:33:54 +02:00

|

|

|

))

|

|

|

|

|

.into_diagnostic();

|

|

|

|

|

}

|

|

|

|

|

|

2022-01-18 09:48:28 +01:00

|

|

|

let mut entry_num = 0;

|

|

|

|

|

|

|

|

|

|

let mut nu_prompt = NushellPrompt::new();

|

|

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

let start_time = std::time::Instant::now();

|

2022-01-18 09:48:28 +01:00

|

|

|

// Translate environment variables from Strings to Values

|

2022-03-16 19:17:06 +01:00

|

|

|

if let Some(e) = convert_env_values(engine_state, stack) {

|

2022-01-18 09:48:28 +01:00

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

report_error(&working_set, &e);

|

|

|

|

|

}

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"translate env vars",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-02-26 14:57:45 +01:00

|

|

|

// seed env vars

|

2022-01-21 20:50:44 +01:00

|

|

|

stack.add_env_var(

|

|

|

|

|

"CMD_DURATION_MS".into(),

|

Reduced LOC by replacing several instances of `Value::Int {}`, `Value::Float{}`, `Value::Bool {}`, and `Value::String {}` with `Value::int()`, `Value::float()`, `Value::boolean()` and `Value::string()` (#7412)

# Description

While perusing Value.rs, I noticed the `Value::int()`, `Value::float()`,

`Value::boolean()` and `Value::string()` constructors, which seem

designed to make it easier to construct various Values, but which aren't

used often at all in the codebase. So, using a few find-replaces

regexes, I increased their usage. This reduces overall LOC because

structures like this:

```

Value::Int {

val: a,

span: head

}

```

are changed into

```

Value::int(a, head)

```

and are respected as such by the project's formatter.

There are little readability concerns because the second argument to all

of these is `span`, and it's almost always extremely obvious which is

the span at every callsite.

# User-Facing Changes

None.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-12-09 17:37:51 +01:00

|

|

|

Value::string("0823", Span::unknown()),

|

2022-01-21 20:50:44 +01:00

|

|

|

);

|

|

|

|

|

|

Reduced LOC by replacing several instances of `Value::Int {}`, `Value::Float{}`, `Value::Bool {}`, and `Value::String {}` with `Value::int()`, `Value::float()`, `Value::boolean()` and `Value::string()` (#7412)

# Description

While perusing Value.rs, I noticed the `Value::int()`, `Value::float()`,

`Value::boolean()` and `Value::string()` constructors, which seem

designed to make it easier to construct various Values, but which aren't

used often at all in the codebase. So, using a few find-replaces

regexes, I increased their usage. This reduces overall LOC because

structures like this:

```

Value::Int {

val: a,

span: head

}

```

are changed into

```

Value::int(a, head)

```

and are respected as such by the project's formatter.

There are little readability concerns because the second argument to all

of these is `span`, and it's almost always extremely obvious which is

the span at every callsite.

# User-Facing Changes

None.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-12-09 17:37:51 +01:00

|

|

|

stack.add_env_var("LAST_EXIT_CODE".into(), Value::int(0, Span::unknown()));

|

2022-02-26 14:57:45 +01:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

let mut start_time = std::time::Instant::now();

|

2022-04-01 00:16:28 +02:00

|

|

|

let mut line_editor = Reedline::create();

|

2022-09-19 16:28:36 +02:00

|

|

|

|

|

|

|

|

// Now that reedline is created, get the history session id and store it in engine_state

|

2023-01-21 14:47:00 +01:00

|

|

|

let hist_sesh = line_editor

|

|

|

|

|

.get_history_session_id()

|

|

|

|

|

.map(i64::from)

|

|

|

|

|

.unwrap_or(0);

|

2022-09-19 16:28:36 +02:00

|

|

|

engine_state.history_session_id = hist_sesh;

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"setup reedline",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-09-19 16:28:36 +02:00

|

|

|

|

|

|

|

|

let config = engine_state.get_config();

|

|

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-06-14 22:53:33 +02:00

|

|

|

let history_path = crate::config_files::get_history_path(

|

|

|

|

|

nushell_path,

|

|

|

|

|

engine_state.config.history_file_format,

|

|

|

|

|

);

|

2022-03-31 23:25:48 +02:00

|

|

|

if let Some(history_path) = history_path.as_deref() {

|

2022-06-14 22:53:33 +02:00

|

|

|

let history: Box<dyn reedline::History> = match engine_state.config.history_file_format {

|

|

|

|

|

HistoryFileFormat::PlainText => Box::new(

|

|

|

|

|

FileBackedHistory::with_file(

|

|

|

|

|

config.max_history_size as usize,

|

|

|

|

|

history_path.to_path_buf(),

|

|

|

|

|

)

|

|

|

|

|

.into_diagnostic()?,

|

|

|

|

|

),

|

|

|

|

|

HistoryFileFormat::Sqlite => Box::new(

|

|

|

|

|

SqliteBackedHistory::with_file(history_path.to_path_buf()).into_diagnostic()?,

|

|

|

|

|

),

|

|

|

|

|

};

|

2022-04-01 00:16:28 +02:00

|

|

|

line_editor = line_editor.with_history(history);

|

2022-03-31 23:25:48 +02:00

|

|

|

};

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"setup history",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-03-31 23:25:48 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-06-14 22:53:33 +02:00

|

|

|

let sys = sysinfo::System::new();

|

|

|

|

|

|

2022-07-29 19:50:12 +02:00

|

|

|

let show_banner = config.show_banner;

|

|

|

|

|

let use_ansi = config.use_ansi_coloring;

|

|

|

|

|

if show_banner {

|

|

|

|

|

let banner = get_banner(engine_state, stack);

|

|

|

|

|

if use_ansi {

|

2023-01-30 02:37:54 +01:00

|

|

|

println!("{banner}");

|

2022-07-29 19:50:12 +02:00

|

|

|

} else {

|

2022-11-04 19:49:45 +01:00

|

|

|

println!("{}", nu_utils::strip_ansi_string_likely(banner));

|

2022-07-29 19:50:12 +02:00

|

|

|

}

|

|

|

|

|

}

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"get sysinfo/show banner",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

2022-07-29 19:50:12 +02:00

|

|

|

|

2022-08-18 11:25:52 +02:00

|

|

|

if let Some(s) = prerun_command {

|

|

|

|

|

eval_source(

|

|

|

|

|

engine_state,

|

|

|

|

|

stack,

|

|

|

|

|

s.item.as_bytes(),

|

2023-01-30 02:37:54 +01:00

|

|

|

&format!("entry #{entry_num}"),

|

2022-12-07 19:31:57 +01:00

|

|

|

PipelineData::empty(),

|

2023-02-02 00:02:27 +01:00

|

|

|

false,

|

2022-08-18 11:25:52 +02:00

|

|

|

);

|

|

|

|

|

engine_state.merge_env(stack, get_guaranteed_cwd(engine_state, stack))?;

|

|

|

|

|

}

|

|

|

|

|

|

2022-01-18 09:48:28 +01:00

|

|

|

loop {

|

2023-01-24 21:28:59 +01:00

|

|

|

let loop_start_time = std::time::Instant::now();

|

2022-02-10 22:22:39 +01:00

|

|

|

|

2022-07-14 16:09:27 +02:00

|

|

|

let cwd = get_guaranteed_cwd(engine_state, stack);

|

|

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-07-14 16:09:27 +02:00

|

|

|

// Before doing anything, merge the environment from the previous REPL iteration into the

|

|

|

|

|

// permanent state.

|

|

|

|

|

if let Err(err) = engine_state.merge_env(stack, cwd) {

|

|

|

|

|

report_error_new(engine_state, &err);

|

|

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"merge env",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-07-14 16:09:27 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-04-19 00:28:01 +02:00

|

|

|

//Reset the ctrl-c handler

|

|

|

|

|

if let Some(ctrlc) = &mut engine_state.ctrlc {

|

|

|

|

|

ctrlc.store(false, Ordering::SeqCst);

|

|

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"reset ctrlc",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2023-01-24 21:28:59 +01:00

|

|

|

|

|

|

|

|

start_time = std::time::Instant::now();

|

2022-06-09 14:08:15 +02:00

|

|

|

// Reset the SIGQUIT handler

|

|

|

|

|

if let Some(sig_quit) = engine_state.get_sig_quit() {

|

|

|

|

|

sig_quit.store(false, Ordering::SeqCst);

|

|

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"reset sig_quit",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-07-10 12:45:46 +02:00

|

|

|

let config = engine_state.get_config();

|

2022-04-11 20:19:42 +02:00

|

|

|

|

2022-04-06 14:25:02 +02:00

|

|

|

let engine_reference = std::sync::Arc::new(engine_state.clone());

|

2023-01-13 21:37:39 +01:00

|

|

|

|

|

|

|

|

// Find the configured cursor shapes for each mode

|

|

|

|

|

let cursor_config = CursorConfig {

|

|

|

|

|

vi_insert: Some(map_nucursorshape_to_cursorshape(

|

|

|

|

|

config.cursor_shape_vi_insert,

|

|

|

|

|

)),

|

|

|

|

|

vi_normal: Some(map_nucursorshape_to_cursorshape(

|

|

|

|

|

config.cursor_shape_vi_normal,

|

|

|

|

|

)),

|

|

|

|

|

emacs: Some(map_nucursorshape_to_cursorshape(config.cursor_shape_emacs)),

|

|

|

|

|

};

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"get config/cursor config",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

|

|

|

|

|

|

|

|

|

start_time = std::time::Instant::now();

|

2023-01-13 21:37:39 +01:00

|

|

|

|

2022-03-31 23:25:48 +02:00

|

|

|

line_editor = line_editor

|

2022-01-18 09:48:28 +01:00

|

|

|

.with_highlighter(Box::new(NuHighlighter {

|

2023-01-11 02:22:32 +01:00

|

|

|

engine_state: engine_reference.clone(),

|

2022-01-18 09:48:28 +01:00

|

|

|

config: config.clone(),

|

|

|

|

|

}))

|

|

|

|

|

.with_validator(Box::new(NuValidator {

|

2023-01-11 02:22:32 +01:00

|

|

|

engine_state: engine_reference.clone(),

|

2022-01-18 09:48:28 +01:00

|

|

|

}))

|

2022-02-18 19:54:13 +01:00

|

|

|

.with_completer(Box::new(NuCompleter::new(

|

2022-04-06 14:25:02 +02:00

|

|

|

engine_reference.clone(),

|

2022-03-28 19:49:41 +02:00

|

|

|

stack.clone(),

|

2022-02-18 19:54:13 +01:00

|

|

|

)))

|

2022-02-04 16:30:21 +01:00

|

|

|

.with_quick_completions(config.quick_completions)

|

2022-03-03 10:13:44 +01:00

|

|

|

.with_partial_completions(config.partial_completions)

|

2023-01-13 21:37:39 +01:00

|

|

|

.with_ansi_colors(config.use_ansi_coloring)

|

|

|

|

|

.with_cursor_config(cursor_config);

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"reedline builder",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-01-27 08:53:23 +01:00

|

|

|

|

color_config now accepts closures as color values (#7141)

# Description

Closes #6909. You can now add closures to your `color_config` themes.

Whenever a value would be printed with `table`, the closure is run with

the value piped-in. The closure must return either a {fg,bg,attr} record

or a color name (`'light_red'` etc.). This returned style is used to

colour the value.

This is entirely backwards-compatible with existing config.nu files.

Example code excerpt:

```

let my_theme = {

header: green_bold

bool: { if $in { 'light_cyan' } else { 'light_red' } }

int: purple_bold

filesize: { |e| if $e == 0b { 'gray' } else if $e < 1mb { 'purple_bold' } else { 'cyan_bold' } }

duration: purple_bold

date: { (date now) - $in | if $in > 1wk { 'cyan_bold' } else if $in > 1day { 'green_bold' } else { 'yellow_bold' } }

range: yellow_bold

string: { if $in =~ '^#\w{6}$' { $in } else { 'white' } }

nothing: white

```

Example output with this in effect:

Slightly important notes:

* Some color_config names, namely "separator", "empty" and "hints", pipe

in `null` instead of a value.

* Currently, doing anything non-trivial inside a closure has an

understandably big perf hit. I currently do not actually recommend

something like `string: { if $in =~ '^#\w{6}$' { $in } else { 'white' }

}` for serious work, mainly because of the abundance of string-type data

in the world. Nevertheless, lesser-used types like "date" and "duration"

work well with this.

* I had to do some reorganisation in order to make it possible to call

`eval_block()` that late in table rendering. I invented a new struct

called "StyleComputer" which holds the engine_state and stack of the

initial `table` command (implicit or explicit).

* StyleComputer has a `compute()` method which takes a color_config name

and a nu value, and always returns the correct Style, so you don't have

to worry about A) the color_config value was set at all, B) whether it

was set to a closure or not, or C) which default style to use in those

cases.

* Currently, errors encountered during execution of the closures are

thrown in the garbage. Any other ideas are welcome. (Nonetheless, errors

result in a huge perf hit when they are encountered. I think what should

be done is to assume something terrible happened to the user's config

and invalidate the StyleComputer for that `table` run, thus causing

subsequent output to just be Style::default().)

* More thorough tests are forthcoming - ran into some difficulty using

`nu!` to take an alternative config, and for some reason `let-env config

=` statements don't seem to work inside `nu!` pipelines(???)

* The default config.nu has not been updated to make use of this yet. Do

tell if you think I should incorporate that into this.

# User-Facing Changes

See above.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace --features=extra -- -D warnings -D

clippy::unwrap_used -A clippy::needless_collect` to check that you're

using the standard code style

- `cargo test --workspace --features=extra` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-12-17 14:07:56 +01:00

|

|

|

let style_computer = StyleComputer::from_config(engine_state, stack);

|

|

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-04-11 20:19:42 +02:00

|

|

|

line_editor = if config.use_ansi_coloring {

|

color_config now accepts closures as color values (#7141)

# Description

Closes #6909. You can now add closures to your `color_config` themes.

Whenever a value would be printed with `table`, the closure is run with

the value piped-in. The closure must return either a {fg,bg,attr} record

or a color name (`'light_red'` etc.). This returned style is used to

colour the value.

This is entirely backwards-compatible with existing config.nu files.

Example code excerpt:

```

let my_theme = {

header: green_bold

bool: { if $in { 'light_cyan' } else { 'light_red' } }

int: purple_bold

filesize: { |e| if $e == 0b { 'gray' } else if $e < 1mb { 'purple_bold' } else { 'cyan_bold' } }

duration: purple_bold

date: { (date now) - $in | if $in > 1wk { 'cyan_bold' } else if $in > 1day { 'green_bold' } else { 'yellow_bold' } }

range: yellow_bold

string: { if $in =~ '^#\w{6}$' { $in } else { 'white' } }

nothing: white

```

Example output with this in effect:

Slightly important notes:

* Some color_config names, namely "separator", "empty" and "hints", pipe

in `null` instead of a value.

* Currently, doing anything non-trivial inside a closure has an

understandably big perf hit. I currently do not actually recommend

something like `string: { if $in =~ '^#\w{6}$' { $in } else { 'white' }

}` for serious work, mainly because of the abundance of string-type data

in the world. Nevertheless, lesser-used types like "date" and "duration"

work well with this.

* I had to do some reorganisation in order to make it possible to call

`eval_block()` that late in table rendering. I invented a new struct

called "StyleComputer" which holds the engine_state and stack of the

initial `table` command (implicit or explicit).

* StyleComputer has a `compute()` method which takes a color_config name

and a nu value, and always returns the correct Style, so you don't have

to worry about A) the color_config value was set at all, B) whether it

was set to a closure or not, or C) which default style to use in those

cases.

* Currently, errors encountered during execution of the closures are

thrown in the garbage. Any other ideas are welcome. (Nonetheless, errors

result in a huge perf hit when they are encountered. I think what should

be done is to assume something terrible happened to the user's config

and invalidate the StyleComputer for that `table` run, thus causing

subsequent output to just be Style::default().)

* More thorough tests are forthcoming - ran into some difficulty using

`nu!` to take an alternative config, and for some reason `let-env config

=` statements don't seem to work inside `nu!` pipelines(???)

* The default config.nu has not been updated to make use of this yet. Do

tell if you think I should incorporate that into this.

# User-Facing Changes

See above.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace --features=extra -- -D warnings -D

clippy::unwrap_used -A clippy::needless_collect` to check that you're

using the standard code style

- `cargo test --workspace --features=extra` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

2022-12-17 14:07:56 +01:00

|

|

|

line_editor.with_hinter(Box::new({

|

|

|

|

|

// As of Nov 2022, "hints" color_config closures only get `null` passed in.

|

|

|

|

|

let style = style_computer.compute("hints", &Value::nothing(Span::unknown()));

|

|

|

|

|

DefaultHinter::default().with_style(style)

|

|

|

|

|

}))

|

2022-04-11 20:19:42 +02:00

|

|

|

} else {

|

|

|

|

|

line_editor.disable_hints()

|

|

|

|

|

};

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"reedline coloring/style_computer",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

2022-02-10 22:22:39 +01:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2023-01-21 14:47:00 +01:00

|

|

|

line_editor = add_menus(line_editor, engine_reference, stack, config).unwrap_or_else(|e| {

|

|

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

report_error(&working_set, &e);

|

|

|

|

|

Reedline::create()

|

|

|

|

|

});

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"reedline menus",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-03-27 15:01:04 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-05-22 19:32:52 +02:00

|

|

|

let buffer_editor = if !config.buffer_editor.is_empty() {

|

|

|

|

|

Some(config.buffer_editor.clone())

|

|

|

|

|

} else {

|

|

|

|

|

stack

|

|

|

|

|

.get_env_var(engine_state, "EDITOR")

|

|

|

|

|

.map(|v| v.as_string().unwrap_or_default())

|

|

|

|

|

.filter(|v| !v.is_empty())

|

|

|

|

|

.or_else(|| {

|

|

|

|

|

stack

|

|

|

|

|

.get_env_var(engine_state, "VISUAL")

|

|

|

|

|

.map(|v| v.as_string().unwrap_or_default())

|

|

|

|

|

.filter(|v| !v.is_empty())

|

|

|

|

|

})

|

|

|

|

|

};

|

|

|

|

|

|

|

|

|

|

line_editor = if let Some(buffer_editor) = buffer_editor {

|

|

|

|

|

line_editor.with_buffer_editor(buffer_editor, "nu".into())

|

|

|

|

|

} else {

|

|

|

|

|

line_editor

|

|

|

|

|

};

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"reedline buffer_editor",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

2022-04-30 16:40:41 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-03-31 23:25:48 +02:00

|

|

|

if config.sync_history_on_enter {

|

2022-07-23 18:35:43 +02:00

|

|

|

if let Err(e) = line_editor.sync_history() {

|

|

|

|

|

warn!("Failed to sync history: {}", e);

|

|

|

|

|

}

|

2022-03-31 23:25:48 +02:00

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"sync_history",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-01-18 20:32:45 +01:00

|

|

|

// Changing the line editor based on the found keybindings

|

2022-05-05 17:10:03 +02:00

|

|

|

line_editor = match create_keybindings(config) {

|

2022-01-19 14:28:08 +01:00

|

|

|

Ok(keybindings) => match keybindings {

|

|

|

|

|

KeybindingsMode::Emacs(keybindings) => {

|

|

|

|

|

let edit_mode = Box::new(Emacs::new(keybindings));

|

|

|

|

|

line_editor.with_edit_mode(edit_mode)

|

|

|

|

|

}

|

|

|

|

|

KeybindingsMode::Vi {

|

|

|

|

|

insert_keybindings,

|

|

|

|

|

normal_keybindings,

|

|

|

|

|

} => {

|

|

|

|

|

let edit_mode = Box::new(Vi::new(insert_keybindings, normal_keybindings));

|

|

|

|

|

line_editor.with_edit_mode(edit_mode)

|

|

|

|

|

}

|

|

|

|

|

},

|

|

|

|

|

Err(e) => {

|

|

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

report_error(&working_set, &e);

|

|

|

|

|

line_editor

|

2022-01-18 20:32:45 +01:00

|

|

|

}

|

2022-01-18 09:48:28 +01:00

|

|

|

};

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"keybindings",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-05-09 03:56:48 +02:00

|

|

|

// Right before we start our prompt and take input from the user,

|

|

|

|

|

// fire the "pre_prompt" hook

|

2022-07-10 12:45:46 +02:00

|

|

|

if let Some(hook) = config.hooks.pre_prompt.clone() {

|

2022-11-06 01:46:40 +01:00

|

|

|

if let Err(err) = eval_hook(engine_state, stack, None, vec![], &hook) {

|

2022-07-10 12:45:46 +02:00

|

|

|

report_error_new(engine_state, &err);

|

2022-05-09 03:56:48 +02:00

|

|

|

}

|

|

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"pre-prompt hook",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-05-09 03:56:48 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-05-20 23:49:42 +02:00

|

|

|

// Next, check all the environment variables they ask for

|

|

|

|

|

// fire the "env_change" hook

|

2022-07-10 12:45:46 +02:00

|

|

|

let config = engine_state.get_config();

|

|

|

|

|

if let Err(error) =

|

|

|

|

|

eval_env_change_hook(config.hooks.env_change.clone(), engine_state, stack)

|

|

|

|

|

{

|

|

|

|

|

report_error_new(engine_state, &error)

|

2022-05-20 23:49:42 +02:00

|

|

|

}

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"env-change hook",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-05-20 23:49:42 +02:00

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-07-10 12:45:46 +02:00

|

|

|

let config = engine_state.get_config();

|

2022-10-21 17:20:21 +02:00

|

|

|

let prompt = prompt_update::update_prompt(config, engine_state, stack, &mut nu_prompt);

|

2023-02-02 00:03:05 +01:00

|

|

|

perf(

|

|

|

|

|

"update_prompt",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

|

|

|

|

use_color,

|

|

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-02-10 22:22:39 +01:00

|

|

|

entry_num += 1;

|

|

|

|

|

|

2023-01-23 19:57:40 +01:00

|

|

|

if entry_num == 1 && show_banner {

|

|

|

|

|

println!(

|

|

|

|

|

"Startup Time: {}",

|

2023-01-24 21:28:59 +01:00

|

|

|

format_duration(entire_start_time.elapsed().as_nanos() as i64)

|

2023-01-23 19:57:40 +01:00

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

|

2023-01-24 21:28:59 +01:00

|

|

|

start_time = std::time::Instant::now();

|

2022-01-18 09:48:28 +01:00

|

|

|

let input = line_editor.read_line(prompt);

|

2022-07-20 22:03:29 +02:00

|

|

|

let shell_integration = config.shell_integration;

|

2022-04-17 05:03:02 +02:00

|

|

|

|

2022-01-18 09:48:28 +01:00

|

|

|

match input {

|

|

|

|

|

Ok(Signal::Success(s)) => {

|

2022-09-13 14:36:53 +02:00

|

|

|

let hostname = sys.host_name();

|

2022-06-14 22:53:33 +02:00

|

|

|

let history_supports_meta =

|

|

|

|

|

matches!(config.history_file_format, HistoryFileFormat::Sqlite);

|

2022-09-05 13:31:26 +02:00

|

|

|

if history_supports_meta && !s.is_empty() && line_editor.has_last_command_context()

|

|

|

|

|

{

|

2022-06-14 22:53:33 +02:00

|

|

|

line_editor

|

|

|

|

|

.update_last_command_context(&|mut c| {

|

|

|

|

|

c.start_timestamp = Some(chrono::Utc::now());

|

2022-09-13 14:36:53 +02:00

|

|

|

c.hostname = hostname.clone();

|

2022-06-14 22:53:33 +02:00

|

|

|

|

|

|

|

|

c.cwd = Some(StateWorkingSet::new(engine_state).get_cwd());

|

|

|

|

|

c

|

|

|

|

|

})

|

|

|

|

|

.into_diagnostic()?; // todo: don't stop repl if error here?

|

|

|

|

|

}

|

|

|

|

|

|

2022-09-09 22:31:32 +02:00

|

|

|

engine_state

|

|

|

|

|

.repl_buffer_state

|

|

|

|

|

.lock()

|

|

|

|

|

.expect("repl buffer state mutex")

|

|

|

|

|

.replace(line_editor.current_buffer_contents().to_string());

|

|

|

|

|

|

2022-05-08 21:28:39 +02:00

|

|

|

// Right before we start running the code the user gave us,

|

|

|

|

|

// fire the "pre_execution" hook

|

2022-07-10 12:45:46 +02:00

|

|

|

if let Some(hook) = config.hooks.pre_execution.clone() {

|

2022-11-06 01:46:40 +01:00

|

|

|

if let Err(err) = eval_hook(engine_state, stack, None, vec![], &hook) {

|

2022-07-10 12:45:46 +02:00

|

|

|

report_error_new(engine_state, &err);

|

2022-05-08 21:28:39 +02:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2022-06-03 00:57:19 +02:00

|

|

|

if shell_integration {

|

|

|

|

|

run_ansi_sequence(PRE_EXECUTE_MARKER)?;

|

2022-05-10 23:33:18 +02:00

|

|

|

}

|

|

|

|

|

|

2022-01-21 15:53:49 +01:00

|

|

|

let start_time = Instant::now();

|

2022-01-18 09:48:28 +01:00

|

|

|

let tokens = lex(s.as_bytes(), 0, &[], &[], false);

|

|

|

|

|

// Check if this is a single call to a directory, if so auto-cd

|

2022-03-16 19:17:06 +01:00

|

|

|

let cwd = nu_engine::env::current_dir_str(engine_state, stack)?;

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-10-14 23:37:31 +02:00

|

|

|

let mut orig = s.clone();

|

|

|

|

|

if orig.starts_with('`') {

|

|

|

|

|

orig = trim_quotes_str(&orig).to_string()

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

let path = nu_path::expand_path_with(&orig, &cwd);

|

2022-01-18 09:48:28 +01:00

|

|

|

|

2022-07-16 04:01:38 +02:00

|

|

|

if looks_like_path(&orig) && path.is_dir() && tokens.0.len() == 1 {

|

2022-01-18 09:48:28 +01:00

|

|

|

// We have an auto-cd

|

|

|

|

|

let (path, span) = {

|

|

|

|

|

if !path.exists() {

|

|

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

|

|

|

|

|

report_error(

|

|

|

|

|

&working_set,

|

2022-04-18 14:34:10 +02:00

|

|

|

&ShellError::DirectoryNotFound(tokens.0[0].span, None),

|

2022-01-18 09:48:28 +01:00

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

let path = nu_path::canonicalize_with(path, &cwd)

|

|

|

|

|

.expect("internal error: cannot canonicalize known path");

|

|

|

|

|

(path.to_string_lossy().to_string(), tokens.0[0].span)

|

|

|

|

|

};

|

|

|

|

|

|

2022-07-12 13:05:19 +02:00

|

|

|

stack.add_env_var(

|

|

|

|

|

"OLDPWD".into(),

|

|

|

|

|

Value::String {

|

|

|

|

|

val: cwd.clone(),

|

2022-12-03 10:44:12 +01:00

|

|

|

span: Span::unknown(),

|

2022-07-12 13:05:19 +02:00

|

|

|

},

|

|

|

|

|

);

|

|

|

|

|

|

2022-01-18 09:48:28 +01:00

|

|

|

//FIXME: this only changes the current scope, but instead this environment variable

|

|

|

|

|

//should probably be a block that loads the information from the state in the overlay

|

|

|

|

|

stack.add_env_var(

|

|

|

|

|

"PWD".into(),

|

|

|

|

|

Value::String {

|

|

|

|

|

val: path.clone(),

|

2022-12-03 10:44:12 +01:00

|

|

|

span: Span::unknown(),

|

2022-01-18 09:48:28 +01:00

|

|

|

},

|

|

|

|

|

);

|

|

|

|

|

let cwd = Value::String { val: cwd, span };

|

|

|

|

|

|

|

|

|

|

let shells = stack.get_env_var(engine_state, "NUSHELL_SHELLS");

|

|

|

|

|

let mut shells = if let Some(v) = shells {

|

|

|

|

|

v.as_list()

|

|

|

|

|

.map(|x| x.to_vec())

|

|

|

|

|

.unwrap_or_else(|_| vec![cwd])

|

|

|

|

|

} else {

|

|

|

|

|

vec![cwd]

|

|

|

|

|

};

|

|

|

|

|

|

|

|

|

|

let current_shell = stack.get_env_var(engine_state, "NUSHELL_CURRENT_SHELL");

|

|

|

|

|

let current_shell = if let Some(v) = current_shell {

|

|

|

|

|

v.as_integer().unwrap_or_default() as usize

|

|

|

|

|

} else {

|

|

|

|

|

0

|

|

|

|

|

};

|

|

|

|

|

|

2022-08-06 17:11:03 +02:00

|

|

|

let last_shell = stack.get_env_var(engine_state, "NUSHELL_LAST_SHELL");

|

|

|

|

|

let last_shell = if let Some(v) = last_shell {

|

|

|

|

|

v.as_integer().unwrap_or_default() as usize

|

|

|

|

|

} else {

|

|

|

|

|

0

|

|

|

|

|

};

|

|

|

|

|

|

2022-01-18 09:48:28 +01:00

|

|

|

shells[current_shell] = Value::String { val: path, span };

|

|

|

|

|

|

|

|

|

|

stack.add_env_var("NUSHELL_SHELLS".into(), Value::List { vals: shells, span });

|

2022-08-06 17:11:03 +02:00

|

|

|

stack.add_env_var(

|

|

|

|

|

"NUSHELL_LAST_SHELL".into(),

|

|

|

|

|

Value::Int {

|

|

|

|

|

val: last_shell as i64,

|

|

|

|

|

span,

|

|

|

|

|

},

|

|

|

|

|

);

|

2022-10-08 23:38:35 +02:00

|

|

|

} else if !s.trim().is_empty() {

|

2022-01-18 09:48:28 +01:00

|

|

|

trace!("eval source: {}", s);

|

|

|

|

|

|

|

|

|

|

eval_source(

|

|

|

|

|

engine_state,

|

2022-03-16 19:17:06 +01:00

|

|

|

stack,

|

2022-02-14 16:53:48 +01:00

|

|

|

s.as_bytes(),

|

2023-01-30 02:37:54 +01:00

|

|

|

&format!("entry #{entry_num}"),

|

2022-12-07 19:31:57 +01:00

|

|

|

PipelineData::empty(),

|

2023-02-02 00:02:27 +01:00

|

|

|

false,

|

2022-01-18 09:48:28 +01:00

|

|

|

);

|

|

|

|

|

}

|

2022-06-14 22:53:33 +02:00

|

|

|

let cmd_duration = start_time.elapsed();

|

2022-05-05 17:10:03 +02:00

|

|

|

|

|

|

|

|

stack.add_env_var(

|

|

|

|

|

"CMD_DURATION_MS".into(),

|

|

|

|

|

Value::String {

|

2022-06-14 22:53:33 +02:00

|

|

|

val: format!("{}", cmd_duration.as_millis()),

|

2022-12-03 10:44:12 +01:00

|

|

|

span: Span::unknown(),

|

2022-05-05 17:10:03 +02:00

|

|

|

},

|

|

|

|

|

);

|

|

|

|

|

|

2022-09-05 13:31:26 +02:00

|

|

|

if history_supports_meta && !s.is_empty() && line_editor.has_last_command_context()

|

|

|

|

|

{

|

2022-06-14 22:53:33 +02:00

|

|

|

line_editor

|

|

|

|

|

.update_last_command_context(&|mut c| {

|

|

|

|

|

c.duration = Some(cmd_duration);

|

|

|

|

|

c.exit_status = stack

|

|

|

|

|

.get_env_var(engine_state, "LAST_EXIT_CODE")

|

|

|

|

|

.and_then(|e| e.as_i64().ok());

|

|

|

|

|

c

|

|

|

|

|

})

|

|

|

|

|

.into_diagnostic()?; // todo: don't stop repl if error here?

|

|

|

|

|

}

|

|

|

|

|

|

2022-06-03 00:57:19 +02:00

|

|

|

if shell_integration {

|

2022-07-20 22:03:29 +02:00

|

|

|

run_ansi_sequence(&get_command_finished_marker(stack, engine_state))?;

|

2022-07-24 16:01:59 +02:00

|

|

|

if let Some(cwd) = stack.get_env_var(engine_state, "PWD") {

|

|

|

|

|

let path = cwd.as_string()?;

|

2022-09-13 14:36:53 +02:00

|

|

|

|

|

|

|

|

// Communicate the path as OSC 7 (often used for spawning new tabs in the same dir)

|

|

|

|

|

run_ansi_sequence(&format!(

|

|

|

|

|

"\x1b]7;file://{}{}{}\x1b\\",

|

|

|

|

|

percent_encoding::utf8_percent_encode(

|

|

|

|

|

&hostname.unwrap_or_else(|| "localhost".to_string()),

|

|

|

|

|

percent_encoding::CONTROLS

|

|

|

|

|

),

|

|

|

|

|

if path.starts_with('/') { "" } else { "/" },

|

|

|

|

|

percent_encoding::utf8_percent_encode(

|

|

|

|

|

&path,

|

|

|

|

|

percent_encoding::CONTROLS

|

|

|

|

|

)

|

|

|

|

|

))?;

|

|

|

|

|

|

2022-07-24 16:01:59 +02:00

|

|

|

// Try to abbreviate string for windows title

|

|

|

|

|

let maybe_abbrev_path = if let Some(p) = nu_path::home_dir() {

|

|

|

|

|

path.replace(&p.as_path().display().to_string(), "~")

|

|

|

|

|

} else {

|

|

|

|

|

path

|

|

|

|

|

};

|

|

|

|

|

|

|

|

|

|

// Set window title too

|

|

|

|

|

// https://tldp.org/HOWTO/Xterm-Title-3.html

|

|

|

|

|

// ESC]0;stringBEL -- Set icon name and window title to string

|

|

|

|

|

// ESC]1;stringBEL -- Set icon name to string

|

|

|

|

|

// ESC]2;stringBEL -- Set window title to string

|

2023-01-30 02:37:54 +01:00

|

|

|

run_ansi_sequence(&format!("\x1b]2;{maybe_abbrev_path}\x07"))?;

|

2022-07-24 16:01:59 +02:00

|

|

|

}

|

2022-07-29 15:47:31 +02:00

|

|

|

run_ansi_sequence(RESET_APPLICATION_MODE)?;

|

2022-06-03 00:57:19 +02:00

|

|

|

}

|

2022-09-09 22:31:32 +02:00

|

|

|

|

|

|

|

|

let mut ops = engine_state

|

|

|

|

|

.repl_operation_queue

|

|

|

|

|

.lock()

|

|

|

|

|

.expect("repl op queue mutex");

|

|

|

|

|

while let Some(op) = ops.pop_front() {

|

|

|

|

|

match op {

|

|

|

|

|

ReplOperation::Append(s) => line_editor.run_edit_commands(&[

|

|

|

|

|

EditCommand::MoveToEnd,

|

|

|

|

|

EditCommand::InsertString(s),

|

|

|

|

|

]),

|

|

|

|

|

ReplOperation::Insert(s) => {

|

|

|

|

|

line_editor.run_edit_commands(&[EditCommand::InsertString(s)])

|

|

|

|

|

}

|

|

|

|

|

ReplOperation::Replace(s) => line_editor

|

|

|

|

|

.run_edit_commands(&[EditCommand::Clear, EditCommand::InsertString(s)]),

|

|

|

|

|

}

|

|

|

|

|

}

|

2022-01-18 09:48:28 +01:00

|

|

|

}

|

|

|

|

|

Ok(Signal::CtrlC) => {

|

|

|

|

|

// `Reedline` clears the line content. New prompt is shown

|

2022-06-03 00:57:19 +02:00

|

|

|

if shell_integration {

|

2022-07-20 22:03:29 +02:00

|

|

|

run_ansi_sequence(&get_command_finished_marker(stack, engine_state))?;

|

2022-06-03 00:57:19 +02:00

|

|

|

}

|

2022-01-18 09:48:28 +01:00

|

|

|

}

|

|

|

|

|

Ok(Signal::CtrlD) => {

|

|

|

|

|

// When exiting clear to a new line

|

2022-06-03 00:57:19 +02:00

|

|

|

if shell_integration {

|

2022-07-20 22:03:29 +02:00

|

|

|

run_ansi_sequence(&get_command_finished_marker(stack, engine_state))?;

|

2022-06-03 00:57:19 +02:00

|

|

|

}

|

2022-01-18 09:48:28 +01:00

|

|

|

println!();

|

|

|

|

|

break;

|

|

|

|

|

}

|

|

|

|

|

Err(err) => {

|

|

|

|

|

let message = err.to_string();

|

|

|

|

|

if !message.contains("duration") {

|

2023-01-30 02:37:54 +01:00

|

|

|

eprintln!("Error: {err:?}");

|

2022-09-05 13:33:54 +02:00

|

|

|

// TODO: Identify possible error cases where a hard failure is preferable

|

|

|

|

|

// Ignoring and reporting could hide bigger problems

|

|

|

|

|

// e.g. https://github.com/nushell/nushell/issues/6452

|

|

|

|

|

// Alternatively only allow that expected failures let the REPL loop

|

2022-01-18 09:48:28 +01:00

|

|

|

}

|

2022-06-03 00:57:19 +02:00

|

|

|

if shell_integration {

|

2022-07-20 22:03:29 +02:00

|

|

|

run_ansi_sequence(&get_command_finished_marker(stack, engine_state))?;

|

2022-06-03 00:57:19 +02:00

|

|

|

}

|

2022-01-18 09:48:28 +01:00

|

|

|

}

|

|

|

|

|

}

|

2023-01-24 21:28:59 +01:00

|

|

|

perf(

|

|

|

|

|

"processing line editor input",

|

|

|

|

|

start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

|

|

|

|

|

|

|

|

|

perf(

|

|

|

|

|

"finished repl loop",

|

|

|

|

|

loop_start_time,

|

|

|

|

|

file!(),

|

|

|

|

|

line!(),

|

|

|

|

|

column!(),

|

2023-02-02 00:03:05 +01:00

|

|

|

use_color,

|

2023-01-24 21:28:59 +01:00

|

|

|

);

|

2022-01-18 09:48:28 +01:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

Ok(())

|

|

|

|

|

}

|

2022-05-08 21:28:39 +02:00

|

|

|

|

2023-01-13 21:37:39 +01:00

|

|

|

fn map_nucursorshape_to_cursorshape(shape: NuCursorShape) -> CursorShape {

|

|

|

|

|

match shape {

|

|

|

|

|

NuCursorShape::Block => CursorShape::Block,

|

|

|

|

|

NuCursorShape::UnderScore => CursorShape::UnderScore,

|

|

|

|

|

NuCursorShape::Line => CursorShape::Line,

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2022-07-29 19:50:12 +02:00

|

|

|

fn get_banner(engine_state: &mut EngineState, stack: &mut Stack) -> String {

|

|

|

|

|

let age = match eval_string_with_input(

|

|

|

|

|

engine_state,

|

|

|

|

|

stack,

|

|

|

|

|

None,

|

2022-09-29 21:07:32 +02:00

|

|

|

"(date now) - ('2019-05-10 09:59:12-0700' | into datetime)",

|

2022-07-29 19:50:12 +02:00

|

|

|

) {

|

|

|

|

|

Ok(Value::Duration { val, .. }) => format_duration(val),

|

|

|

|

|

_ => "".to_string(),

|

|

|

|

|

};

|

|

|

|

|

|

|

|

|

|

let banner = format!(

|

|

|

|

|

r#"{} __ ,

|

2022-08-04 21:51:02 +02:00

|

|

|

{} .--()°'.' {}Welcome to {}Nushell{},

|

2022-07-29 19:50:12 +02:00

|

|

|

{}'|, . ,' {}based on the {}nu{} language,

|

|

|

|

|

{} !_-(_\ {}where all data is structured!

|

|

|

|

|

|

|

|

|

|

Please join our {}Discord{} community at {}https://discord.gg/NtAbbGn{}

|

|

|

|

|

Our {}GitHub{} repository is at {}https://github.com/nushell/nushell{}

|

2023-03-01 05:32:31 +01:00

|

|

|

Our {}Documentation{} is located at {}https://nushell.sh{}

|

2022-07-29 19:50:12 +02:00

|

|

|

{}Tweet{} us at {}@nu_shell{}

|

2023-03-01 05:32:31 +01:00

|

|

|

Learn how to remove this at: {}https://nushell.sh/book/configuration.html#remove-welcome-message{}

|

2022-07-29 19:50:12 +02:00

|

|

|

|

2022-09-29 21:07:32 +02:00

|

|

|

It's been this long since {}Nushell{}'s first commit:

|

2023-01-23 19:57:40 +01:00

|

|

|

{}{}

|

2022-07-29 19:50:12 +02:00

|

|

|

"#,

|

|

|

|

|

"\x1b[32m", //start line 1 green

|

|

|

|

|

"\x1b[32m", //start line 2

|

|

|

|

|

"\x1b[0m", //before welcome

|

|

|

|

|

"\x1b[32m", //before nushell

|

|

|

|

|

"\x1b[0m", //after nushell

|

|

|

|

|

"\x1b[32m", //start line 3

|

|

|

|

|

"\x1b[0m", //before based

|

|

|

|

|

"\x1b[32m", //before nu

|

|

|

|

|

"\x1b[0m", //after nu

|

|

|

|

|

"\x1b[32m", //start line 4

|

|

|

|

|

"\x1b[0m", //before where

|

|

|

|

|

"\x1b[35m", //before Discord purple

|

|

|

|

|

"\x1b[0m", //after Discord

|

|

|

|

|

"\x1b[35m", //before Discord URL

|

|

|

|

|

"\x1b[0m", //after Discord URL

|

|

|

|

|

"\x1b[1;32m", //before GitHub green_bold

|

|

|

|

|

"\x1b[0m", //after GitHub

|

|

|

|

|

"\x1b[1;32m", //before GitHub URL

|

|

|

|

|

"\x1b[0m", //after GitHub URL

|

|

|

|

|

"\x1b[32m", //before Documentation

|

|

|

|

|

"\x1b[0m", //after Documentation

|

|

|

|

|

"\x1b[32m", //before Documentation URL

|

|

|

|

|