Bumps [shadow-rs](https://github.com/baoyachi/shadow-rs) from 0.33.0 to

0.34.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/baoyachi/shadow-rs/releases">shadow-rs's

releases</a>.</em></p>

<blockquote>

<h2>v0.34.0</h2>

<h2>What's Changed</h2>

<ul>

<li>Make using the CARGO_METADATA object simpler by <a

href="https://github.com/baoyachi"><code>@baoyachi</code></a> in <a

href="https://redirect.github.com/baoyachi/shadow-rs/pull/181">baoyachi/shadow-rs#181</a></li>

</ul>

<p><strong>Full Changelog</strong>: <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.33.0...v0.34.0">https://github.com/baoyachi/shadow-rs/compare/v0.33.0...v0.34.0</a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="ccb09f154b"><code>ccb09f1</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/181">#181</a>

from baoyachi/issue/179</li>

<li><a

href="65c56630da"><code>65c5663</code></a>

fix cargo clippy check</li>

<li><a

href="998d000023"><code>998d000</code></a>

Make using the CARGO_METADATA object simpler</li>

<li>See full diff in <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.33.0...v0.34.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

This PR makes it so that non-zero exit codes and termination by signal

are treated as a normal `ShellError`. Currently, these are silent

errors. That is, if an external command fails, then it's code block is

aborted, but the parent block can sometimes continue execution. E.g.,

see #8569 and this example:

```nushell

[1 2] | each { ^false }

```

Before this would give:

```

╭───┬──╮

│ 0 │ │

│ 1 │ │

╰───┴──╯

```

Now, this shows an error:

```

Error: nu:🐚:eval_block_with_input

× Eval block failed with pipeline input

╭─[entry #1:1:2]

1 │ [1 2] | each { ^false }

· ┬

· ╰── source value

╰────

Error: nu:🐚:non_zero_exit_code

× External command had a non-zero exit code

╭─[entry #1:1:17]

1 │ [1 2] | each { ^false }

· ──┬──

· ╰── exited with code 1

╰────

```

This PR fixes#12874, fixes#5960, fixes#10856, and fixes#5347. This

PR also partially addresses #10633 and #10624 (only the last command of

a pipeline is currently checked). It looks like #8569 is already fixed,

but this PR will make sure it is definitely fixed (fixes#8569).

# User-Facing Changes

- Non-zero exit codes and termination by signal now cause an error to be

thrown.

- The error record value passed to a `catch` block may now have an

`exit_code` column containing the integer exit code if the error was due

to an external command.

- Adds new config values, `display_errors.exit_code` and

`display_errors.termination_signal`, which determine whether an error

message should be printed in the respective error cases. For

non-interactive sessions, these are set to `true`, and for interactive

sessions `display_errors.exit_code` is false (via the default config).

# Tests

Added a few tests.

# After Submitting

- Update docs and book.

- Future work:

- Error if other external commands besides the last in a pipeline exit

with a non-zero exit code. Then, deprecate `do -c` since this will be

the default behavior everywhere.

- Add a better mechanism for exit codes and deprecate

`$env.LAST_EXIT_CODE` (it's buggy).

# Description

`cargo` somewhat recently gained the capability to store `lints`

settings for the crate and workspace, that can override the defaults

from `rustc` and `clippy` lints. This means we can enforce some lints

without having to actively pass them to clippy via `cargo clippy -- -W

...`. So users just forking the repo have an easier time to follow

similar requirements like our CI.

## Limitation

An exception that remains is that those lints apply to both the primary

code base and the tests. Thus we can't include e.g. `unwrap_used`

without generating noise in the tests. Here the setup in the CI remains

the most helpful.

## Included lints

- Add `clippy::unchecked_duration_subtraction` (added by #12549)

# User-Facing Changes

Running `cargo clippy --workspace` should be closer to the CI. This has

benefits for editor configured runs of clippy and saves you from having

to use `toolkit` to be close to CI in more cases.

# Description

The meaning of the word usage is specific to describing how a command

function is *used* and not a synonym for general description. Usage can

be used to describe the SYNOPSIS or EXAMPLES sections of a man page

where the permitted argument combinations are shown or example *uses*

are given.

Let's not confuse people and call it what it is a description.

Our `help` command already creates its own *Usage* section based on the

available arguments and doesn't refer to the description with usage.

# User-Facing Changes

`help commands` and `scope commands` will now use `description` or

`extra_description`

`usage`-> `description`

`extra_usage` -> `extra_description`

Breaking change in the plugin protocol:

In the signature record communicated with the engine.

`usage`-> `description`

`extra_usage` -> `extra_description`

The same rename also takes place for the methods on

`SimplePluginCommand` and `PluginCommand`

# Tests + Formatting

- Updated plugin protocol specific changes

# After Submitting

- [ ] update plugin protocol doc

Bumps [shadow-rs](https://github.com/baoyachi/shadow-rs) from 0.30.0 to

0.31.1.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/baoyachi/shadow-rs/releases">shadow-rs's

releases</a>.</em></p>

<blockquote>

<h2>[Improvement] Correct git command directory</h2>

<p>ref: <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/170">#170</a></p>

<p>Thx <a

href="https://github.com/MichaelScofield"><code>@MichaelScofield</code></a></p>

<h2>Make build_with function public</h2>

<p>ref:<a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/169">#169</a></p>

<p>Thx <a

href="https://github.com/MichaelScofield"><code>@MichaelScofield</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="aa804ec8a2"><code>aa804ec</code></a>

Update Cargo.toml</li>

<li><a

href="b3fbe36403"><code>b3fbe36</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/170">#170</a>

from MichaelScofield/find-right-branch</li>

<li><a

href="fe6f940f8b"><code>fe6f940</code></a>

execute "git" command in the right path</li>

<li><a

href="458be25e74"><code>458be25</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/169">#169</a>

from MichaelScofield/flexible-for-submodule</li>

<li><a

href="1521a288b4"><code>1521a28</code></a>

Expose the "build" function to let projects with submodules

control where to ...</li>

<li><a

href="ee12741fa0"><code>ee12741</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/168">#168</a>

from baoyachi/issue/149</li>

<li><a

href="dfb8b24adb"><code>dfb8b24</code></a>

cargo fmt</li>

<li><a

href="a3be8680aa"><code>a3be868</code></a>

fix clippy</li>

<li><a

href="c8e7cd5704"><code>c8e7cd5</code></a>

Fix compilation failures caused by unwrap</li>

<li>See full diff in <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.30.0...v0.31.1">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

By popular demand (a.k.a.

https://github.com/nushell/nushell.github.io/issues/1035), provide an

example of a type signature in the `def` help.

# User-Facing Changes

Help/Doc

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib

# After Submitting

N/A

# Description

This grew quite a bit beyond its original scope, but I've tried to make

`$in` a bit more consistent and easier to work with.

Instead of the parser generating calls to `collect` and creating

closures, this adds `Expr::Collect` which just evaluates in the same

scope and doesn't require any closure.

When `$in` is detected in an expression, it is replaced with a new

variable (also called `$in`) and wrapped in `Expr::Collect`. During

eval, this expression is evaluated directly, with the input and with

that new variable set to the collected value.

Other than being faster and less prone to gotchas, it also makes it

possible to typecheck the output of an expression containing `$in`,

which is nice. This is a breaking change though, because of the lack of

the closure and because now typechecking will actually happen. Also, I

haven't attempted to typecheck the input yet.

The IR generated now just looks like this:

```gas

collect %in

clone %tmp, %in

store-variable $in, %tmp

# %out <- ...expression... <- %in

drop-variable $in

```

(where `$in` is the local variable created for this collection, and not

`IN_VARIABLE_ID`)

which is a lot better than having to create a closure and call `collect

--keep-env`, dealing with all of the capture gathering and allocation

that entails. Ideally we can also detect whether that input is actually

needed, so maybe we don't have to clone, but I haven't tried to do that

yet. Theoretically now that the variable is a unique one every time, it

should be possible to give it a type - I just don't know how to

determine that yet.

On top of that, I've also reworked how `$in` works in pipeline-initial

position. Previously, it was a little bit inconsistent. For example,

this worked:

```nushell

> 3 | do { let x = $in; let y = $in; print $x $y }

3

3

```

However, this causes a runtime variable not found error on the second

`$in`:

```nushell

> def foo [] { let x = $in; let y = $in; print $x $y }; 3 | foo

Error: nu:🐚:variable_not_found

× Variable not found

╭─[entry #115:1:35]

1 │ def foo [] { let x = $in; let y = $in; print $x $y }; 3 | foo

· ─┬─

· ╰── variable not found

╰────

```

I've fixed this by making the first element `$in` detection *always*

happen at the block level, so if you use `$in` in pipeline-initial

position anywhere in a block, it will collect with an implicit

subexpression around the whole thing, and you can then use that `$in`

more than once. In doing this I also rewrote `parse_pipeline()` and

hopefully it's a bit more straightforward and possibly more efficient

too now.

Finally, I've tried to make `let` and `mut` a lot more straightforward

with how they handle the rest of the pipeline, and using a redirection

with `let`/`mut` now does what you'd expect if you assume that they

consume the whole pipeline - the redirection is just processed as

normal. These both work now:

```nushell

let x = ^foo err> err.txt

let y = ^foo out+err>| str length

```

It was previously possible to accomplish this with a subexpression, but

it just seemed like a weird gotcha that you couldn't do it. Intuitively,

`let` and `mut` just seem to take the whole line.

- closes#13137

# User-Facing Changes

- `$in` will behave more consistently with blocks and closures, since

the entire block is now just wrapped to handle it if it appears in the

first pipeline element

- `$in` no longer creates a closure, so what can be done within an

expression containing `$in` is less restrictive

- `$in` containing expressions are now type checked, rather than just

resulting in `any`. However, `$in` itself is still `any`, so this isn't

quite perfect yet

- Redirections are now allowed in `let` and `mut` and behave pretty much

how you'd expect

# Tests + Formatting

Added tests to cover the new behaviour.

# After Submitting

- [ ] release notes (definitely breaking change)

# Description

Add `README.md` files to each crate in our workspace (-plugins) and also

include it in the `lib.rs` documentation for <docs.rs> (if there is no

existing `lib.rs` crate documentation)

In all new README I added the defensive comment that the crates are not

considered stable for public consumption. If necessary we can adjust

this if we deem a crate useful for plugin authors.

# Description

This PR adds an internal representation language to Nushell, offering an

alternative evaluator based on simple instructions, stream-containing

registers, and indexed control flow. The number of registers required is

determined statically at compile-time, and the fixed size required is

allocated upon entering the block.

Each instruction is associated with a span, which makes going backwards

from IR instructions to source code very easy.

Motivations for IR:

1. **Performance.** By simplifying the evaluation path and making it

more cache-friendly and branch predictor-friendly, code that does a lot

of computation in Nushell itself can be sped up a decent bit. Because

the IR is fairly easy to reason about, we can also implement

optimization passes in the future to eliminate and simplify code.

2. **Correctness.** The instructions mostly have very simple and

easily-specified behavior, so hopefully engine changes are a little bit

easier to reason about, and they can be specified in a more formal way

at some point. I have made an effort to document each of the

instructions in the docs for the enum itself in a reasonably specific

way. Some of the errors that would have happened during evaluation

before are now moved to the compilation step instead, because they don't

make sense to check during evaluation.

3. **As an intermediate target.** This is a good step for us to bring

the [`new-nu-parser`](https://github.com/nushell/new-nu-parser) in at

some point, as code generated from new AST can be directly compared to

code generated from old AST. If the IR code is functionally equivalent,

it will behave the exact same way.

4. **Debugging.** With a little bit more work, we can probably give

control over advancing the virtual machine that `IrBlock`s run on to

some sort of external driver, making things like breakpoints and single

stepping possible. Tools like `view ir` and [`explore

ir`](https://github.com/devyn/nu_plugin_explore_ir) make it easier than

before to see what exactly is going on with your Nushell code.

The goal is to eventually replace the AST evaluator entirely, once we're

sure it's working just as well. You can help dogfood this by running

Nushell with `$env.NU_USE_IR` set to some value. The environment

variable is checked when Nushell starts, so config runs with IR, or it

can also be set on a line at the REPL to change it dynamically. It is

also checked when running `do` in case within a script you want to just

run a specific piece of code with or without IR.

# Example

```nushell

view ir { |data|

mut sum = 0

for n in $data {

$sum += $n

}

$sum

}

```

```gas

# 3 registers, 19 instructions, 0 bytes of data

0: load-literal %0, int(0)

1: store-variable var 904, %0 # let

2: drain %0

3: drop %0

4: load-variable %1, var 903

5: iterate %0, %1, end 15 # for, label(1), from(14:)

6: store-variable var 905, %0

7: load-variable %0, var 904

8: load-variable %2, var 905

9: binary-op %0, Math(Plus), %2

10: span %0

11: store-variable var 904, %0

12: load-literal %0, nothing

13: drain %0

14: jump 5

15: drop %0 # label(0), from(5:)

16: drain %0

17: load-variable %0, var 904

18: return %0

```

# Benchmarks

All benchmarks run on a base model Mac Mini M1.

## Iterative Fibonacci sequence

This is about as best case as possible, making use of the much faster

control flow. Most code will not experience a speed improvement nearly

this large.

```nushell

def fib [n: int] {

mut a = 0

mut b = 1

for _ in 2..=$n {

let c = $a + $b

$a = $b

$b = $c

}

$b

}

use std bench

bench { 0..50 | each { |n| fib $n } }

```

IR disabled:

```

╭───────┬─────────────────╮

│ mean │ 1ms 924µs 665ns │

│ min │ 1ms 700µs 83ns │

│ max │ 3ms 450µs 125ns │

│ std │ 395µs 759ns │

│ times │ [list 50 items] │

╰───────┴─────────────────╯

```

IR enabled:

```

╭───────┬─────────────────╮

│ mean │ 452µs 820ns │

│ min │ 427µs 417ns │

│ max │ 540µs 167ns │

│ std │ 17µs 158ns │

│ times │ [list 50 items] │

╰───────┴─────────────────╯

```

##

[gradient_benchmark_no_check.nu](https://github.com/nushell/nu_scripts/blob/main/benchmarks/gradient_benchmark_no_check.nu)

IR disabled:

```

╭───┬──────────────────╮

│ 0 │ 27ms 929µs 958ns │

│ 1 │ 21ms 153µs 459ns │

│ 2 │ 18ms 639µs 666ns │

│ 3 │ 19ms 554µs 583ns │

│ 4 │ 13ms 383µs 375ns │

│ 5 │ 11ms 328µs 208ns │

│ 6 │ 5ms 659µs 542ns │

╰───┴──────────────────╯

```

IR enabled:

```

╭───┬──────────────────╮

│ 0 │ 22ms 662µs │

│ 1 │ 17ms 221µs 792ns │

│ 2 │ 14ms 786µs 708ns │

│ 3 │ 13ms 876µs 834ns │

│ 4 │ 13ms 52µs 875ns │

│ 5 │ 11ms 269µs 666ns │

│ 6 │ 6ms 942µs 500ns │

╰───┴──────────────────╯

```

##

[random-bytes.nu](https://github.com/nushell/nu_scripts/blob/main/benchmarks/random-bytes.nu)

I got pretty random results out of this benchmark so I decided not to

include it. Not clear why.

# User-Facing Changes

- IR compilation errors may appear even if the user isn't evaluating

with IR.

- IR evaluation can be enabled by setting the `NU_USE_IR` environment

variable to any value.

- New command `view ir` pretty-prints the IR for a block, and `view ir

--json` can be piped into an external tool like [`explore

ir`](https://github.com/devyn/nu_plugin_explore_ir).

# Tests + Formatting

All tests are passing with `NU_USE_IR=1`, and I've added some more eval

tests to compare the results for some very core operations. I will

probably want to add some more so we don't have to always check

`NU_USE_IR=1 toolkit test --workspace` on a regular basis.

# After Submitting

- [ ] release notes

- [ ] further documentation of instructions?

- [ ] post-release: publish `nu_plugin_explore_ir`

# Description

This PR introduces a new `Signals` struct to replace our adhoc passing

around of `ctrlc: Option<Arc<AtomicBool>>`. Doing so has a few benefits:

- We can better enforce when/where resetting or triggering an interrupt

is allowed.

- Consolidates `nu_utils::ctrl_c::was_pressed` and other ad-hoc

re-implementations into a single place: `Signals::check`.

- This allows us to add other types of signals later if we want. E.g.,

exiting or suspension.

- Similarly, we can more easily change the underlying implementation if

we need to in the future.

- Places that used to have a `ctrlc` of `None` now use

`Signals::empty()`, so we can double check these usages for correctness

in the future.

# Description

Fixes: #13189

The issue is caused `error make` returns a `Value::Errror`, and when

nushell pass it to `table -e` in `std help`, it directly stop and render

the error message.

To solve it, I think it's safe to make these examples return None

directly, it doesn't change the reult of `help error make`.

# User-Facing Changes

## Before

```nushell

~> help "error make"

Error: nu:🐚:eval_block_with_input

× Eval block failed with pipeline input

╭─[NU_STDLIB_VIRTUAL_DIR/std/help.nu:692:21]

691 │ ] {

692 │ let commands = (scope commands | sort-by name)

· ───────┬──────

· ╰── source value

693 │

╰────

Error: × my custom error message

```

## After

```nushell

Create an error.

Search terms: panic, crash, throw

Category: core

This command:

- does not create a scope.

- is a built-in command.

- is a subcommand.

- is not part of a plugin.

- is not a custom command.

- is not a keyword.

Usage:

> error make {flags} <error_struct>

Flags:

-u, --unspanned - remove the origin label from the error

-h, --help - Display the help message for this command

Signatures:

<nothing> | error make[ <record>] -> <any>

Parameters:

error_struct: <record> The error to create.

Examples:

Create a simple custom error

> error make {msg: "my custom error message"}

Create a more complex custom error

> error make {

msg: "my custom error message"

label: {

text: "my custom label text" # not mandatory unless $.label exists

# optional

span: {

# if $.label.span exists, both start and end must be present

start: 123

end: 456

}

}

help: "A help string, suggesting a fix to the user" # optional

}

Create a custom error for a custom command that shows the span of the argument

> def foo [x] {

error make {

msg: "this is fishy"

label: {

text: "fish right here"

span: (metadata $x).span

}

}

}

```

# Tests + Formatting

Added 1 test

# Description

Complete the `--numbered` removal that was started with the deprecation

in #13112.

# User-Facing Changes

Breaking change - Use `| enumerate` in place of `--numbered` as shown in

the help example

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

Searched online doc for `--numbered` to ensure no other usage needed to

be updated.

# Description

Provides the ability to use http commands as part of a pipeline.

Additionally, this pull requests extends the pipeline metadata to add a

content_type field. The content_type metadata field allows commands such

as `to json` to set the metadata in the pipeline allowing the http

commands to use it when making requests.

This pull request also introduces the ability to directly stream http

requests from streaming pipelines.

One other small change is that Content-Type will always be set if it is

passed in to the http commands, either indirectly or throw the content

type flag. Previously it was not preserved with requests that were not

of type json or form data.

# User-Facing Changes

* `http post`, `http put`, `http patch`, `http delete` can be used as

part of a pipeline

* `to text`, `to json`, `from json` all set the content_type metadata

field and the http commands will utilize them when making requests.

Recommend holding until after #13125 is fully digested and *possibly*

until 0.96.

# Description

Fixes one of the issues described in #13125

The `do` signature included a `SyntaxShape:Any` as one of the possible

first-positional types. This is incorrect. `do` only takes a closure as

a positional. This had the result of:

1. Moving what should have been a parser error to evaluation-time

## Before

```nu

> do 1

Error: nu:🐚:cant_convert

× Can't convert to Closure.

╭─[entry #26:1:4]

1 │ do 1

· ┬

· ╰── can't convert int to Closure

╰────

```

## After

```nu

> do 1

Error: nu::parser::parse_mismatch

× Parse mismatch during operation.

╭─[entry #5:1:4]

1 │ do 1

· ┬

· ╰── expected block, closure or record

╰────

```

2. Masking a bad test in `std assert`

This is a bit convoluted, but `std assert` tests included testing

`assert error` to make sure it:

* Asserts on bad code

* Doesn't assert on good code

The good-code test was broken, and was essentially bad-code (really

bad-code) that wasn't getting caught due to the bad signature.

Fixing this resulted in *parse time* failures on every call to

`test_asserts` (not something that particular test was designed to

handle.

This PR also fixes the test case to properly evaluate `std assert error`

against a good code path.

# User-Facing Changes

* Error-type returned (possible breaking change?)

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

Some commands in `nu-cmd-lang` are not classified as keywords even

though they should be.

# User-Facing Changes

In the output of `which`, `scope commands`, and `help commands`, some

commands will now have a `type` of `keyword` instead of `built-in`.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This allows plugins to report their version (and potentially other

metadata in the future). The version is shown in `plugin list` and in

`version`.

The metadata is stored in the registry file, and reflects whatever was

retrieved on `plugin add`, not necessarily the running binary. This can

help you to diagnose if there's some kind of mismatch with what you

expect. We could potentially use this functionality to show a warning or

error if a plugin being run does not have the same version as what was

in the cache file, suggesting `plugin add` be run again, but I haven't

done that at this point.

It is optional, and it requires the plugin author to make some code

changes if they want to provide it, since I can't automatically

determine the version of the calling crate or anything tricky like that

to do it.

Example:

```

> plugin list | select name version is_running pid

╭───┬────────────────┬─────────┬────────────┬─────╮

│ # │ name │ version │ is_running │ pid │

├───┼────────────────┼─────────┼────────────┼─────┤

│ 0 │ example │ 0.93.1 │ false │ │

│ 1 │ gstat │ 0.93.1 │ false │ │

│ 2 │ inc │ 0.93.1 │ false │ │

│ 3 │ python_example │ 0.1.0 │ false │ │

╰───┴────────────────┴─────────┴────────────┴─────╯

```

cc @maxim-uvarov (he asked for it)

# User-Facing Changes

- `plugin list` gets a `version` column

- `version` shows plugin versions when available

- plugin authors *should* add `fn metadata()` to their `impl Plugin`,

but don't have to

# Tests + Formatting

Tested the low level stuff and also the `plugin list` column.

# After Submitting

- [ ] update plugin guide docs

- [ ] update plugin protocol docs (`Metadata` call & response)

- [ ] update plugin template (`fn metadata()` should be easy)

- [ ] release notes

# Description

#12056 added support for default and type-checked arguments in `do`

closures.

This PR adds examples for those features. It also:

* Fixes the TODO (a closure parameter that wasn't being used) that was

preventing a result from being added

* Removes extraneous commas from the descriptions

* Adds an example demonstrating multiple positional closure arguments

# User-Facing Changes

Help examples only

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

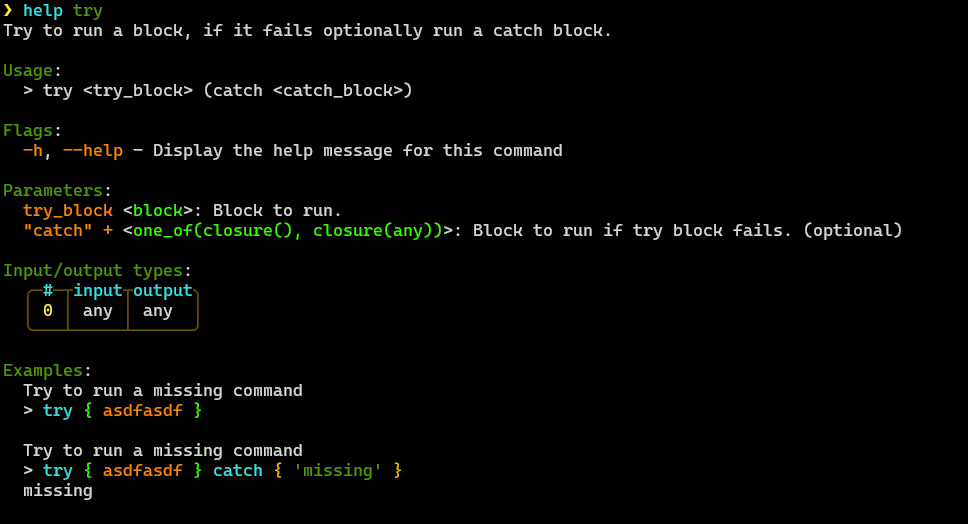

# Description

This PR updates the `try` command to show that `catch` is a closure and

can be used as such.

### Before

### After

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

In this PR, I continue my tradition of trivial but hopefully helpful

`help` tweaks. As mentioned in #13143, I noticed that `help -f else`

oddly didn't return the `if` statement itself. Perhaps not so oddly,

since who the heck is going to go looking for *"else"* in the help?

Well, I did ...

Added *"else"* and *"conditional"* to the search terms for `if`.

I'll work on the meat of #13143 next - That's more substantiative.

# User-Facing Changes

Help only

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

-

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Removes the `which-support` cargo feature and makes all of its

feature-gated code enabled by default in all builds. I'm not sure why

this one command is gated behind a feature. It seems to be a relic of

older code where we had features for what seems like every command.

# Description

I've noticed this several times but kept forgetting to fix it:

The example given for `help def` for the `--wrapped` flag is:

```nu

Define a custom wrapper for an external command

> def --wrapped my-echo [...rest] { echo $rest }; my-echo spam

╭───┬──────╮

│ 0 │ spam │

╰───┴──────╯

```

That's ... odd, since (a) it specifically says *"for an external"*

command, and yet uses (and shows the output from) the builtin `echo`.

Also, (b) I believe `--wrapped` is *only* applicable to external

commands. Finally, (c) the `my-echo spam` doesn't even demonstrate a

wrapped argument.

Unless I'm truly missing something, the example just makes no sense.

This updates the example to really demonstrate `def --wrapped` with the

*external* version of `^echo`. It uses the `-e` command to interpret the

escape-tab character in the string.

```nu

> def --wrapped my-echo [...rest] { ^echo ...$rest }; my-echo -e 'spam\tspam'

spam spam

```

# User-Facing Changes

Help example only.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

#7777 removed the `--numbered` flag from `each`, `par-each`, `reduce`,

and `each while`. It was suggested at the time that it should be removed

from `for` as well, but for several reasons it wasn't.

This PR deprecates `--numbered` in anticipation of removing it in 0.96.

Note: Please review carefully, as this is my first "real" Rust/Nushell

code. I was hoping that some prior commit would be useful as a template,

but since this was an argument on a parser keyword, I didn't find too

much useful. So I had to actually find the relevant helpers in the code

and `nu_protocol` doc and learn how to use them - oh darn ;-) But please

make sure I did it correctly.

# User-Facing Changes

* Use of `--numbered` will result in a deprecation warning.

* Changed help example to demonstrate the new syntax.

* Help shows deprecation notice on the flag

# Description

Per a Discord question

(https://discord.com/channels/601130461678272522/1244293194603167845/1247794228696711198),

this adds examples to the `help` for both:

* `cd`

* `def`

to demonstrate that `def --env` is required when changing directories in

a custom command.

Since the existing examples for `def` were a bit more complex (and had

output) but the `cd` ones were more simplified, I did use slightly

different examples in each. Either or both could be tweaked if desired.

# User-Facing Changes

Command `help` examples

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

---------

Co-authored-by: Jakub Žádník <kubouch@gmail.com>

As discussed in https://github.com/nushell/nushell/pull/12749, we no

longer need to call `std::env::set_current_dir()` to sync `$env.PWD`

with the actual working directory. This PR removes the call from

`EngineState::merge_env()`.

Bumps [shadow-rs](https://github.com/baoyachi/shadow-rs) from 0.27.1 to

0.28.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/baoyachi/shadow-rs/releases">shadow-rs's

releases</a>.</em></p>

<blockquote>

<h2>fix cargo clippy</h2>

<p><a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/160">#160</a></p>

<p>Thx <a href="https://github.com/qartik"><code>@qartik</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="ba9f8b0c2b"><code>ba9f8b0</code></a>

Update Cargo.toml</li>

<li><a

href="d1b724c1e7"><code>d1b724c</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/160">#160</a>

from qartik/patch-1</li>

<li><a

href="505108d5d6"><code>505108d</code></a>

Allow missing_docs for deprecated CLAP_VERSION constant</li>

<li>See full diff in <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.27.1...v0.28.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

Removes the old `nu-cmd-dataframe` crate in favor of the polars plugin.

As such, this PR also removes the `dataframe` feature, related CI, and

full releases of nushell.

# Description

This PR allows byte streams to optionally be colored as being

specifically binary or string data, which guarantees that they'll be

converted to `Binary` or `String` appropriately on `into_value()`,

making them compatible with `Type` guarantees. This makes them

significantly more broadly usable for command input and output.

There is still an `Unknown` type for byte streams coming from external

commands, which uses the same behavior as we previously did where it's a

string if it's UTF-8.

A small number of commands were updated to take advantage of this, just

to prove the point. I will be adding more after this merges.

# User-Facing Changes

- New types in `describe`: `string (stream)`, `binary (stream)`

- These commands now return a stream if their input was a stream:

- `into binary`

- `into string`

- `bytes collect`

- `str join`

- `first` (binary)

- `last` (binary)

- `take` (binary)

- `skip` (binary)

- Streams that are explicitly binary colored will print as a streaming

hexdump

- example:

```nushell

1.. | each { into binary } | bytes collect

```

# Tests + Formatting

I've added some tests to cover it at a basic level, and it doesn't break

anything existing, but I do think more would be nice. Some of those will

come when I modify more commands to stream.

# After Submitting

There are a few things I'm not quite satisfied with:

- **String trimming behavior.** We automatically trim newlines from

streams from external commands, but I don't think we should do this with

internal commands. If I call a command that happens to turn my string

into a stream, I don't want the newline to suddenly disappear. I changed

this to specifically do it only on `Child` and `File`, but I don't know

if this is quite right, and maybe we should bring back the old flag for

`trim_end_newline`

- **Known binary always resulting in a hexdump.** It would be nice to

have a `print --raw`, so that we can put binary data on stdout

explicitly if we want to. This PR doesn't change how external commands

work though - they still dump straight to stdout.

Otherwise, here's the normal checklist:

- [ ] release notes

- [ ] docs update for plugin protocol changes (added `type` field)

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

Changes `get_full_help` to take a `&dyn Command` instead of multiple

arguments (`&Signature`, `&Examples` `is_parser_keyword`). All of these

arguments can be gathered from a `Command`, so there is no need to pass

the pieces to `get_full_help`.

This PR also fixes an issue where the search terms are not shown if

`--help` is used on a command.

# Description

Kind of a vague title, but this PR does two main things:

1. Rather than overriding functions like `Command::is_parser_keyword`,

this PR instead changes commands to override `Command::command_type`.

The `CommandType` returned by `Command::command_type` is then used to

automatically determine whether `Command::is_parser_keyword` and the

other `is_{type}` functions should return true. These changes allow us

to remove the `CommandType::Other` case and should also guarantee than

only one of the `is_{type}` functions on `Command` will return true.

2. Uses the new, reworked `Command::command_type` function in the `scope

commands` and `which` commands.

# User-Facing Changes

- Breaking change for `scope commands`: multiple columns (`is_builtin`,

`is_keyword`, `is_plugin`, etc.) have been merged into the `type`

column.

- Breaking change: the `which` command can now report `plugin` or

`keyword` instead of `built-in` in the `type` column. It may also now

report `external` instead of `custom` in the `type` column for known

`extern`s.

# Description

This changes the `collect` command so that it doesn't require a closure.

Still allowed, optionally.

Before:

```nushell

open foo.json | insert foo bar | collect { save -f foo.json }

```

After:

```nushell

open foo.json | insert foo bar | collect | save -f foo.json

```

The closure argument isn't really necessary, as collect values are also

supported as `PipelineData`.

# User-Facing Changes

- `collect` command changed

# Tests + Formatting

Example changed to reflect.

# After Submitting

- [ ] release notes

- [ ] we may want to deprecate the closure arg?

# Description

This PR introduces a `ByteStream` type which is a `Read`-able stream of

bytes. Internally, it has an enum over three different byte stream

sources:

```rust

pub enum ByteStreamSource {

Read(Box<dyn Read + Send + 'static>),

File(File),

Child(ChildProcess),

}

```

This is in comparison to the current `RawStream` type, which is an

`Iterator<Item = Vec<u8>>` and has to allocate for each read chunk.

Currently, `PipelineData::ExternalStream` serves a weird dual role where

it is either external command output or a wrapper around `RawStream`.

`ByteStream` makes this distinction more clear (via `ByteStreamSource`)

and replaces `PipelineData::ExternalStream` in this PR:

```rust

pub enum PipelineData {

Empty,

Value(Value, Option<PipelineMetadata>),

ListStream(ListStream, Option<PipelineMetadata>),

ByteStream(ByteStream, Option<PipelineMetadata>),

}

```

The PR is relatively large, but a decent amount of it is just repetitive

changes.

This PR fixes#7017, fixes#10763, and fixes#12369.

This PR also improves performance when piping external commands. Nushell

should, in most cases, have competitive pipeline throughput compared to,

e.g., bash.

| Command | Before (MB/s) | After (MB/s) | Bash (MB/s) |

| -------------------------------------------------- | -------------:|

------------:| -----------:|

| `throughput \| rg 'x'` | 3059 | 3744 | 3739 |

| `throughput \| nu --testbin relay o> /dev/null` | 3508 | 8087 | 8136 |

# User-Facing Changes

- This is a breaking change for the plugin communication protocol,

because the `ExternalStreamInfo` was replaced with `ByteStreamInfo`.

Plugins now only have to deal with a single input stream, as opposed to

the previous three streams: stdout, stderr, and exit code.

- The output of `describe` has been changed for external/byte streams.

- Temporary breaking change: `bytes starts-with` no longer works with

byte streams. This is to keep the PR smaller, and `bytes ends-with`

already does not work on byte streams.

- If a process core dumped, then instead of having a `Value::Error` in

the `exit_code` column of the output returned from `complete`, it now is

a `Value::Int` with the negation of the signal number.

# After Submitting

- Update docs and book as necessary

- Release notes (e.g., plugin protocol changes)

- Adapt/convert commands to work with byte streams (high priority is

`str length`, `bytes starts-with`, and maybe `bytes ends-with`).

- Refactor the `tee` code, Devyn has already done some work on this.

---------

Co-authored-by: Devyn Cairns <devyn.cairns@gmail.com>