As discussed in https://github.com/nushell/nushell/pull/12749, we no

longer need to call `std::env::set_current_dir()` to sync `$env.PWD`

with the actual working directory. This PR removes the call from

`EngineState::merge_env()`.

Refer to #12603 for part 1.

We need to be careful when migrating to the new API, because the new API

has slightly different semantics (PWD can contain symlinks). This PR

handles the "obviously safe" part of the migrations. Namely, it handles

two specific use cases:

* Passing PWD into `canonicalize_with()`

* Passing PWD into `EngineState::merge_env()`

The first case is safe because symlinks are canonicalized away. The

second case is safe because `EngineState::merge_env()` only uses PWD to

call `std::env::set_current_dir()`, which shouldn't affact Nushell. The

commit message contains detailed stats on the updated files.

Because these migrations touch a lot of files, I want to keep these PRs

small to avoid merge conflicts.

This is the first PR towards migrating to a new `$env.PWD` API that

returns potentially un-canonicalized paths. Refer to PR #12515 for

motivations.

## New API: `EngineState::cwd()`

The goal of the new API is to cover both parse-time and runtime use

case, and avoid unintentional misuse. It takes an `Option<Stack>` as

argument, which if supplied, will search for `$env.PWD` on the stack in

additional to the engine state. I think with this design, there's less

confusion over parse-time and runtime environments. If you have access

to a stack, just supply it; otherwise supply `None`.

## Deprecation of other PWD-related APIs

Other APIs are re-implemented using `EngineState::cwd()` and properly

documented. They're marked deprecated, but their behavior is unchanged.

Unused APIs are deleted, and code that accesses `$env.PWD` directly

without using an API is rewritten.

Deprecated APIs:

* `EngineState::current_work_dir()`

* `StateWorkingSet::get_cwd()`

* `env::current_dir()`

* `env::current_dir_str()`

* `env::current_dir_const()`

* `env::current_dir_str_const()`

Other changes:

* `EngineState::get_cwd()` (deleted)

* `StateWorkingSet::list_env()` (deleted)

* `repl::do_run_cmd()` (rewritten with `env::current_dir_str()`)

## `cd` and `pwd` now use logical paths by default

This pulls the changes from PR #12515. It's currently somewhat broken

because using non-canonicalized paths exposed a bug in our path

normalization logic (Issue #12602). Once that is fixed, this should

work.

## Future plans

This PR needs some tests. Which test helpers should I use, and where

should I put those tests?

I noticed that unquoted paths are expanded within `eval_filepath()` and

`eval_directory()` before they even reach the `cd` command. This means

every paths is expanded twice. Is this intended?

Once this PR lands, the plan is to review all usages of the deprecated

APIs and migrate them to `EngineState::cwd()`. In the meantime, these

usages are annotated with `#[allow(deprecated)]` to avoid breaking CI.

---------

Co-authored-by: Jakub Žádník <kubouch@gmail.com>

# Description

Fixes: #11996

After this change `let t = timeit ^ls` will list current directory to

stdout.

```

❯ let t = timeit ^ls

CODE_OF_CONDUCT.md Cargo.lock Cross.toml README.md aaa benches devdocs here11 scripts target toolkit.nu wix

CONTRIBUTING.md Cargo.toml LICENSE a.txt assets crates docker rust-toolchain.toml src tests typos.toml

```

If user don't want such behavior, he can redirect the stdout to `std

null-stream` easily

```

> use std

> let t = timeit { ^ls o> (std null-device) }

```

# User-Facing Changes

NaN

# Tests + Formatting

Done

# After Submitting

Nan

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

When implementing a `Command`, one must also import all the types

present in the function signatures for `Command`. This makes it so that

we often import the same set of types in each command implementation

file. E.g., something like this:

```rust

use nu_protocol::ast::Call;

use nu_protocol::engine::{Command, EngineState, Stack};

use nu_protocol::{

record, Category, Example, IntoInterruptiblePipelineData, IntoPipelineData, PipelineData,

ShellError, Signature, Span, Type, Value,

};

```

This PR adds the `nu_engine::command_prelude` module which contains the

necessary and commonly used types to implement a `Command`:

```rust

// command_prelude.rs

pub use crate::CallExt;

pub use nu_protocol::{

ast::{Call, CellPath},

engine::{Command, EngineState, Stack},

record, Category, Example, IntoInterruptiblePipelineData, IntoPipelineData, IntoSpanned,

PipelineData, Record, ShellError, Signature, Span, Spanned, SyntaxShape, Type, Value,

};

```

This should reduce the boilerplate needed to implement a command and

also gives us a place to track the breadth of the `Command` API. I tried

to be conservative with what went into the prelude modules, since it

might be hard/annoying to remove items from the prelude in the future.

Let me know if something should be included or excluded.

# Description

The PR overhauls how IO redirection is handled, allowing more explicit

and fine-grain control over `stdout` and `stderr` output as well as more

efficient IO and piping.

To summarize the changes in this PR:

- Added a new `IoStream` type to indicate the intended destination for a

pipeline element's `stdout` and `stderr`.

- The `stdout` and `stderr` `IoStream`s are stored in the `Stack` and to

avoid adding 6 additional arguments to every eval function and

`Command::run`. The `stdout` and `stderr` streams can be temporarily

overwritten through functions on `Stack` and these functions will return

a guard that restores the original `stdout` and `stderr` when dropped.

- In the AST, redirections are now directly part of a `PipelineElement`

as a `Option<Redirection>` field instead of having multiple different

`PipelineElement` enum variants for each kind of redirection. This

required changes to the parser, mainly in `lite_parser.rs`.

- `Command`s can also set a `IoStream` override/redirection which will

apply to the previous command in the pipeline. This is used, for

example, in `ignore` to allow the previous external command to have its

stdout redirected to `Stdio::null()` at spawn time. In contrast, the

current implementation has to create an os pipe and manually consume the

output on nushell's side. File and pipe redirections (`o>`, `e>`, `e>|`,

etc.) have precedence over overrides from commands.

This PR improves piping and IO speed, partially addressing #10763. Using

the `throughput` command from that issue, this PR gives the following

speedup on my setup for the commands below:

| Command | Before (MB/s) | After (MB/s) | Bash (MB/s) |

| --------------------------- | -------------:| ------------:|

-----------:|

| `throughput o> /dev/null` | 1169 | 52938 | 54305 |

| `throughput \| ignore` | 840 | 55438 | N/A |

| `throughput \| null` | Error | 53617 | N/A |

| `throughput \| rg 'x'` | 1165 | 3049 | 3736 |

| `(throughput) \| rg 'x'` | 810 | 3085 | 3815 |

(Numbers above are the median samples for throughput)

This PR also paves the way to refactor our `ExternalStream` handling in

the various commands. For example, this PR already fixes the following

code:

```nushell

^sh -c 'echo -n "hello "; sleep 0; echo "world"' | find "hello world"

```

This returns an empty list on 0.90.1 and returns a highlighted "hello

world" on this PR.

Since the `stdout` and `stderr` `IoStream`s are available to commands

when they are run, then this unlocks the potential for more convenient

behavior. E.g., the `find` command can disable its ansi highlighting if

it detects that the output `IoStream` is not the terminal. Knowing the

output streams will also allow background job output to be redirected

more easily and efficiently.

# User-Facing Changes

- External commands returned from closures will be collected (in most

cases):

```nushell

1..2 | each {|_| nu -c "print a" }

```

This gives `["a", "a"]` on this PR, whereas this used to print "a\na\n"

and then return an empty list.

```nushell

1..2 | each {|_| nu -c "print -e a" }

```

This gives `["", ""]` and prints "a\na\n" to stderr, whereas this used

to return an empty list and print "a\na\n" to stderr.

- Trailing new lines are always trimmed for external commands when

piping into internal commands or collecting it as a value. (Failure to

decode the output as utf-8 will keep the trailing newline for the last

binary value.) In the current nushell version, the following three code

snippets differ only in parenthesis placement, but they all also have

different outputs:

1. `1..2 | each { ^echo a }`

```

a

a

╭────────────╮

│ empty list │

╰────────────╯

```

2. `1..2 | each { (^echo a) }`

```

╭───┬───╮

│ 0 │ a │

│ 1 │ a │

╰───┴───╯

```

3. `1..2 | (each { ^echo a })`

```

╭───┬───╮

│ 0 │ a │

│ │ │

│ 1 │ a │

│ │ │

╰───┴───╯

```

But in this PR, the above snippets will all have the same output:

```

╭───┬───╮

│ 0 │ a │

│ 1 │ a │

╰───┴───╯

```

- All existing flags on `run-external` are now deprecated.

- File redirections now apply to all commands inside a code block:

```nushell

(nu -c "print -e a"; nu -c "print -e b") e> test.out

```

This gives "a\nb\n" in `test.out` and prints nothing. The same result

would happen when printing to stdout and using a `o>` file redirection.

- External command output will (almost) never be ignored, and ignoring

output must be explicit now:

```nushell

(^echo a; ^echo b)

```

This prints "a\nb\n", whereas this used to print only "b\n". This only

applies to external commands; values and internal commands not in return

position will not print anything (e.g., `(echo a; echo b)` still only

prints "b").

- `complete` now always captures stderr (`do` is not necessary).

# After Submitting

The language guide and other documentation will need to be updated.

This is partially "feng-shui programming" of moving things to new

separate places.

The later commits include "`git blame` tollbooths" by moving out chunks

of code into new files, which requires an extra step to track things

with `git blame`. We can negiotiate if you want to keep particular

things in their original place.

If egregious I tried to add a bit of documentation. If I see something

that is unused/unnecessarily `pub` I will try to remove that.

- Move `nu_protocol::Exportable` to `nu-parser`

- Guess doccomment for `Exportable`

- Move `Unit` enum from `value` to `AST`

- Move engine state `Variable` def into its folder

- Move error-related files in `nu-protocol` subdir

- Move `pipeline_data` module into its own folder

- Move `stream.rs` over into the `pipeline_data` mod

- Move `PipelineMetadata` into its own file

- Doccomment `PipelineMetadata`

- Remove unused `is_leap_year` in `value/mod`

- Note about criminal `type_compatible` helper

- Move duration fmting into new `value/duration.rs`

- Move filesize fmting logic to new `value/filesize`

- Split reexports from standard imports in `value/mod`

- Doccomment trait `CustomValue`

- Polish doccomments and intradoc links

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This PR adds a new evaluator path with callbacks to a mutable trait

object implementing a Debugger trait. The trait object can do anything,

e.g., profiling, code coverage, step debugging. Currently,

entering/leaving a block and a pipeline element is marked with

callbacks, but more callbacks can be added as necessary. Not all

callbacks need to be used by all debuggers; unused ones are simply empty

calls. A simple profiler is implemented as a proof of concept.

The debugging support is implementing by making `eval_xxx()` functions

generic depending on whether we're debugging or not. This has zero

computational overhead, but makes the binary slightly larger (see

benchmarks below). `eval_xxx()` variants called from commands (like

`eval_block_with_early_return()` in `each`) are chosen with a dynamic

dispatch for two reasons: to not grow the binary size due to duplicating

the code of many commands, and for the fact that it isn't possible

because it would make Command trait objects object-unsafe.

In the future, I hope it will be possible to allow plugin callbacks such

that users would be able to implement their profiler plugins instead of

having to recompile Nushell.

[DAP](https://microsoft.github.io/debug-adapter-protocol/) would also be

interesting to explore.

Try `help debug profile`.

## Screenshots

Basic output:

To profile with more granularity, increase the profiler depth (you'll

see that repeated `is-windows` calls take a large chunk of total time,

making it a good candidate for optimizing):

## Benchmarks

### Binary size

Binary size increase vs. main: **+40360 bytes**. _(Both built with

`--release --features=extra,dataframe`.)_

### Time

```nushell

# bench_debug.nu

use std bench

let test = {

1..100

| each {

ls | each {|row| $row.name | str length }

}

| flatten

| math avg

}

print 'debug:'

let res2 = bench { debug profile $test } --pretty

print $res2

```

```nushell

# bench_nodebug.nu

use std bench

let test = {

1..100

| each {

ls | each {|row| $row.name | str length }

}

| flatten

| math avg

}

print 'no debug:'

let res1 = bench { do $test } --pretty

print $res1

```

`cargo run --release -- bench_debug.nu` is consistently 1--2 ms slower

than `cargo run --release -- bench_nodebug.nu` due to the collection

overhead + gathering the report. This is expected. When gathering more

stuff, the overhead is obviously higher.

`cargo run --release -- bench_nodebug.nu` vs. `nu bench_nodebug.nu` I

didn't measure any difference. Both benchmarks report times between 97

and 103 ms randomly, without one being consistently higher than the

other. This suggests that at least in this particular case, when not

running any debugger, there is no runtime overhead.

## API changes

This PR adds a generic parameter to all `eval_xxx` functions that forces

you to specify whether you use the debugger. You can resolve it in two

ways:

* Use a provided helper that will figure it out for you. If you wanted

to use `eval_block(&engine_state, ...)`, call `let eval_block =

get_eval_block(&engine_state); eval_block(&engine_state, ...)`

* If you know you're in an evaluation path that doesn't need debugger

support, call `eval_block::<WithoutDebug>(&engine_state, ...)` (this is

the case of hooks, for example).

I tried to add more explanation in the docstring of `debugger_trait.rs`.

## TODO

- [x] Better profiler output to reduce spam of iterative commands like

`each`

- [x] Resolve `TODO: DEBUG` comments

- [x] Resolve unwraps

- [x] Add doc comments

- [x] Add usage and extra usage for `debug profile`, explaining all

columns

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Hopefully none.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Fixes: #11913

When running external command, nushell shouldn't consumes stderr

messages, if user want to redirect stderr.

# User-Facing Changes

NaN

# Tests + Formatting

Done

# After Submitting

NaN

# Description

Following #11851, this PR adds one final conversion function for

`Value`. `Value::coerce_str` takes a `&Value` and converts it to a

`Cow<str>`, creating an owned `String` for types that needed converting.

Otherwise, it returns a borrowed `str` for `String` and `Binary`

`Value`s which avoids a clone/allocation. Where possible, `coerce_str`

and `coerce_into_string` should be used instead of `coerce_string`,

since `coerce_string` always allocates a new `String`.

# Description

This PR renames the conversion functions on `Value` to be more consistent.

It follows the Rust [API guidelines](https://rust-lang.github.io/api-guidelines/naming.html#ad-hoc-conversions-follow-as_-to_-into_-conventions-c-conv) for ad-hoc conversions.

The conversion functions on `Value` now come in a few forms:

- `coerce_{type}` takes a `&Value` and attempts to convert the value to

`type` (e.g., `i64` are converted to `f64`). This is the old behavior of

some of the `as_{type}` functions -- these functions have simply been

renamed to better reflect what they do.

- The new `as_{type}` functions take a `&Value` and returns an `Ok`

result only if the value is of `type` (no conversion is attempted). The

returned value will be borrowed if `type` is non-`Copy`, otherwise an

owned value is returned.

- `into_{type}` exists for non-`Copy` types, but otherwise does not

attempt conversion just like `as_type`. It takes an owned `Value` and

always returns an owned result.

- `coerce_into_{type}` has the same relationship with `coerce_{type}` as

`into_{type}` does with `as_{type}`.

- `to_{kind}_string`: conversion to different string formats (debug,

abbreviated, etc.). Only two of the old string conversion functions were

removed, the rest have been renamed only.

- `to_{type}`: other conversion functions. Currently, only `to_path`

exists. (And `to_string` through `Display`.)

This table summaries the above:

| Form | Cost | Input Ownership | Output Ownership | Converts `Value`

case/`type` |

| ---------------------------- | ----- | --------------- |

---------------- | -------- |

| `as_{type}` | Cheap | Borrowed | Borrowed/Owned | No |

| `into_{type}` | Cheap | Owned | Owned | No |

| `coerce_{type}` | Cheap | Borrowed | Borrowed/Owned | Yes |

| `coerce_into_{type}` | Cheap | Owned | Owned | Yes |

| `to_{kind}_string` | Expensive | Borrowed | Owned | Yes |

| `to_{type}` | Expensive | Borrowed | Owned | Yes |

# User-Facing Changes

Breaking API change for `Value` in `nu-protocol` which is exposed as

part of the plugin API.

# Description

We have seen some test cases which requires to output message to both

stdout and stderr, especially in redirection scenario.

This pr is going to introduce a new echo_env_mixed testbin, so we can

have less tests which only runs on windows.

# User-Facing Changes

NaN

# Tests + Formatting

NaN

# After Submitting

NaN

I moved hook to *nu_cmd_base* instead of *nu_cli* because it will enable

other developers to continue to use hook even if they decide to write

their on cli or NOT depend on nu-cli

Then they will still have the hook functionality because they can

include nu-cmd-base

# Description

This PR names the hooks as they're executing so that you can see them

with debug statements. So, at the beginning of `eval_hook()` you could

put a dbg! or eprintln! to see what hook was executing. It also shows up

in View files.

### Before - notice item 14 and 25

### After - The hooks are now named (14 & 25)

Curiosity, on my mac, the display_output hook fires 3 times before

anything else. Also, curious is that the value if the display_output, is

not what I have in my config but what is in the default_config. So,

there may be a bug or some shenanigans going on somewhere with hooks.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR tries to document some of the more obscure testbin parameters

and what they do. I still don't grok all of them.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Make sure that our different crates that contain commands can be

compiled in parallel.

This can under certain circumstances accelerate the compilation with

sufficient multithreading available.

## Details

- Move `help` commands from `nu-cmd-lang` back to `nu-command`

- This also makes sense as the commands are implemented in an

ANSI-terminal specific way

- Make `nu-cmd-lang` only a dev dependency for `nu-command`

- Change context creation helpers for `nu-cmd-extra` and

`nu-cmd-dataframe` to have a consistent api used in

`src/main.rs`:`get_engine_state()`

- `nu-command` now indepedent from `nu-cmd-extra` and `nu-cmd-dataframe`

that are now dependencies of `nu` directly. (change to internal

features)

- Fix tests that previously used `nu-command::create_default_context()`

with replacement functions

## From scratch compilation times:

just debug (dev) build and default features

```

cargo clean --profile dev && cargo build --timings

```

### before

### after

# User-Facing Changes

None direct, only change to compilation on multithreaded jobs expected.

# Tests + Formatting

Tests that previously chose to use `nu-command` for their scope will

still use `nu-cmd-lang` + `nu-command` (command list in the granularity

at the time)

Related to:

- #8311

- #8353

# Description

with the new `$nu.startup-time` from #8353 and as mentionned in #8311,

we are now able to fully move the `nushell` banner from the `rust`

source base to the standard library.

this PR

- removes all the `rust` source code for the banner

- rewrites a perfect clone of the banner to `std.nu`, called `std

banner`

- call `std banner` from `default_config.nu`

# User-Facing Changes

see the demo: https://asciinema.org/a/566521

- no config will show the banner (e.g. `cargo run --release --

--no-config-file`)

- a custom config without the `if $env.config.show_banner` block and no

call to `std banner` would never show the banner

- a custom config with the block and `config.show_banner = true` will

show the banner

- a custom config with the block and `config.show_banner = false` will

NOT show the banner

# Tests + Formatting

a new test line has been added to `tests.nu` to check the length of the

`std banner` output.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

```

$nothing

```

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

# Description

Part of the larger cratification effort.

Moves all `reedline` or shell line editor specific commands to `nu-cli`.

## From `nu-cmd-lang`:

- `commandline`

- This shouldn't have moved there. Doesn't directly depend on reedline

but assumes parts in the engine state that are specific to the use of

reedline or a REPL

## From `nu-command`:

- `keybindings` and subcommands

- `keybindings default`

- `keybindings list`

- `keybindings listen`

- very `reedline` specific

- `history`

- needs `reedline`

- `history session`

## internal use

Instead of having a separate `create_default_context()` that calls

`nu-command`'s `create_default_context()`, I added a `add_cli_context()`

that updates an `EngineState`

# User-Facing Changes

None

## Build time comparison

`cargo build --timings` from a `cargo clean --profile dev`

### total

main: 64 secs

this: 59 secs

### `nu-command` build time

branch | total| codegen | fraction

---|---|---|---

main | 14.0s | 6.2s | (44%)

this | 12.5s | 5.5s | (44%)

`nu-cli` depends on `nu-command` at the moment.

Thus it is built during the code-gen phase of `nu-command` (on 16

virtual cores)

# Tests + Formatting

I removed the `test_example()` facilities for now as we had not run any

of the commands in an `Example` test and importing the right context for

those tests seemed more of a hassle than the duplicated

`test_examples()` implementations in `nu-cmd-lang` and `nu-command`

# Description

This is a pretty heavy refactor of the parser to support multiple parser

errors. It has a few issues we should address before landing:

- [x] In some cases, error quality has gotten worse `1 / "bob"` for

example

- [x] if/else isn't currently parsing correctly

- probably others

# User-Facing Changes

This may have error quality degradation as we adjust to the new error

reporting mechanism.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-utils/standard_library/tests.nu` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Add a `command_not_found` function to `$env.config.hooks`. If this

function outputs a string, then it's included in the `help`.

An example hook on *Arch Linux*, to find packages that contain the

binary, looks like:

```nushell

let-env config = {

# ...

hooks: {

command_not_found: {

|cmd_name| (

try {

let pkgs = (pkgfile --binaries --verbose $cmd_name)

(

$"(ansi $env.config.color_config.shape_external)($cmd_name)(ansi reset) " +

$"may be found in the following packages:\n($pkgs)"

)

} catch {

null

}

)

}

# ...

```

# User-Facing Changes

- Add a `command_not_found` function to `$env.config.hooks`.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

This fixes the `commandline` command when it's run with no arguments, so

it outputs the command being run. New line characters are included.

This allows for:

- [A way to get current command inside pre_execution hook · Issue #6264

· nushell/nushell](https://github.com/nushell/nushell/issues/6264)

- The possibility of *Atuin* to work work *Nushell*. *Atuin* hooks need

to know the current repl input before it is run.

# User-Facing Changes

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

`bytes starts-with` converts the input into a `Value` before running

.starts_with to find if the binary matches. This has two side effects:

it makes the code simpler, only dealing in whole values, and simplifying

a lot of input pipeline handling and value transforming it would

otherwise have to do. _Especially_ in the presence of a cell path to

drill into. It also makes buffers the entire input into memory, which

can take up a lot of memory when dealing with large files, especially if

you only want to check the first few bytes (like for a magic number).

This PR adds a special branch on PipelineData::ExternalStream with a

streaming version of starts_with.

# User-Facing Changes

Opening large files and running bytes starts-with on them will not take

a long time.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# Drawbacks

Streaming checking is more complicated, and there may be bugs. I tested

it with multiple chunks with string data and binary data and it seems to

work alright up to 8k and over bytes, though.

The existing `operate` method still exists because the way it handles

cell paths and values is complicated. This causes some "code

duplication", or at least some intent duplication, between the value

code and the streaming code. This might be worthwhile considering the

performance gains (approaching infinity on larger inputs).

Another thing to consider is that my ExternalStream branch considers

string data as valid input. The operate branch only parses Binary

values, so it would fail. `open` is kind of unpredictable on whether it

returns string data or binary data, even when passing `--raw`. I think

this can be a problem but not really one I'm trying to tackle in this

PR, so, it's worth considering.

Fixes#7615

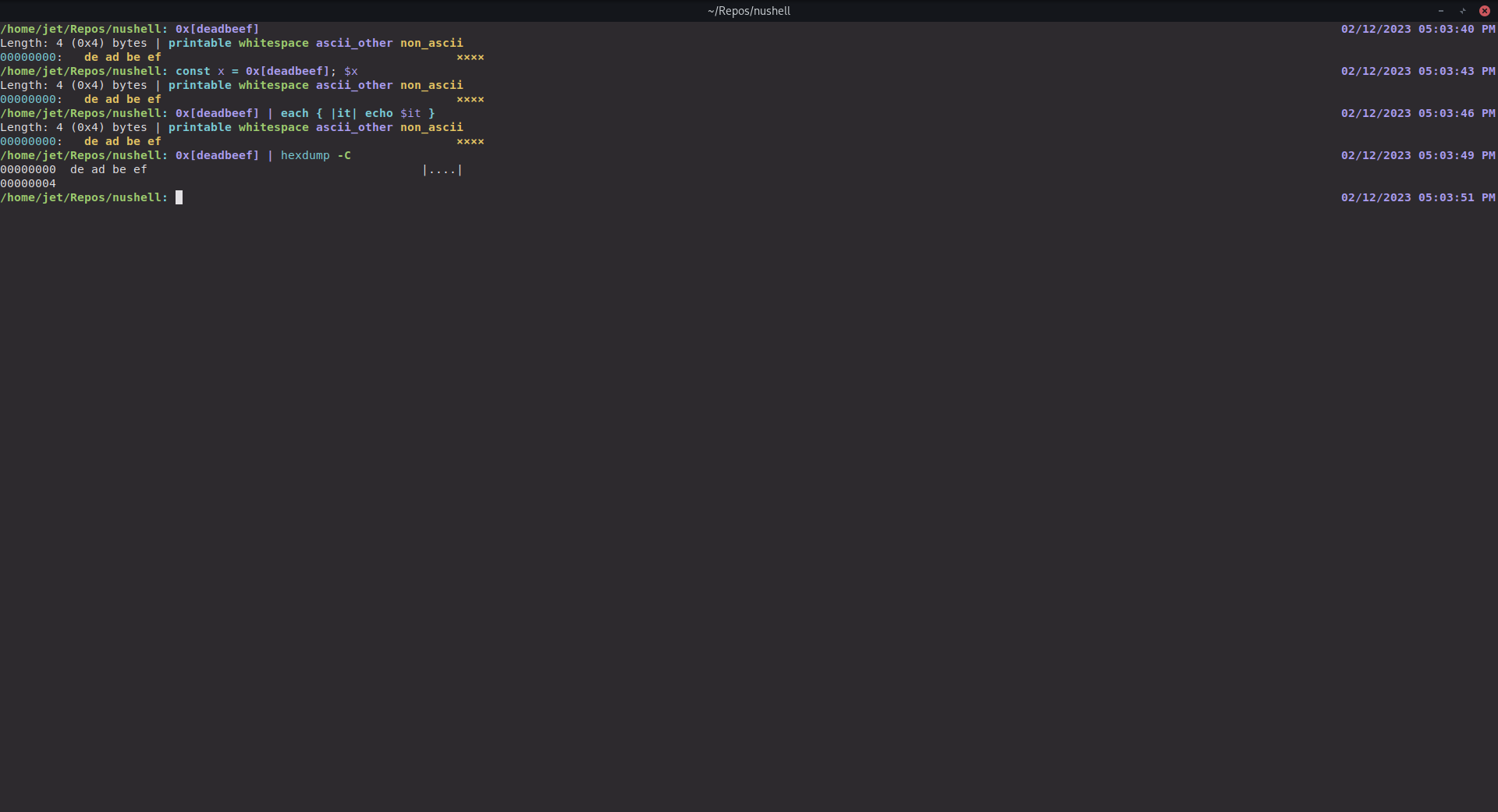

# Description

When calling external commands, we create a table from the pipeline data

to handle external commands expecting paginated input. When a binary

value is made into a table, we convert the vector of bytes representing

the binary bytes into a pretty formatted string. This results in the

pretty formatted string being sent to external commands instead of the

actual binary bytes. By checking whether the stdout of the call is being

redirected, we can decide whether to send the raw binary bytes or the

pretty formatted output when creating a table command.

# User-Facing Changes

When passing binary values to external commands, the external command

will receive the actual bytes instead of the pretty printed string. Use

cases that don't involve piping a binary value into an external command

are unchanged.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

cargo fmt --all -- --check to check standard code formatting (cargo fmt

--all applies these changes)

cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect to check that you're using the standard code

style

cargo test --workspace to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Lint: `clippy::uninlined_format_args`

More readable in most situations.

(May be slightly confusing for modifier format strings

https://doc.rust-lang.org/std/fmt/index.html#formatting-parameters)

Alternative to #7865

# User-Facing Changes

None intended

# Tests + Formatting

(Ran `cargo +stable clippy --fix --workspace -- -A clippy::all -D

clippy::uninlined_format_args` to achieve this. Depends on Rust `1.67`)

# Description

Inspired by #7592

For brevity use `Value::test_{string,int,float,bool}`

Includes fixes to commands that were abusing `Span::test_data` in their

implementation. Now the call span is used where possible or the explicit

`Span::unknonw` is used.

## Command fixes

- Fix abuse of `Span::test_data()` in `query_xml`

- Fix abuse of `Span::test_data()` in `term size`

- Fix abuse of `Span::test_data()` in `seq date`

- Fix two abuses of `Span::test_data` in `nu-cli`

- Change `Span::test_data` to `Span::unknown` in `keybindings listen`

- Add proper call span to `registry query`

- Fix span use in `nu_plugin_query`

- Fix span assignment in `select`

- Use `Span::unknown` instead of `test_data` in more places

## Other

- Use `Value::test_int`/`test_float()` consistently

- More `test_string` and `test_bool`

- Fix unused imports

# User-Facing Changes

Some commands may now provide more helpful spans for downstream use in

errors

# Description

While perusing Value.rs, I noticed the `Value::int()`, `Value::float()`,

`Value::boolean()` and `Value::string()` constructors, which seem

designed to make it easier to construct various Values, but which aren't

used often at all in the codebase. So, using a few find-replaces

regexes, I increased their usage. This reduces overall LOC because

structures like this:

```

Value::Int {

val: a,

span: head

}

```

are changed into

```

Value::int(a, head)

```

and are respected as such by the project's formatter.

There are little readability concerns because the second argument to all

of these is `span`, and it's almost always extremely obvious which is

the span at every callsite.

# User-Facing Changes

None.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

This fix changes pipelines to allow them to actually be empty. Mapping

over empty pipelines gives empty pipelines. Empty pipelines immediately

return `None` when iterated.

This removes a some of where `Span::new(0, 0)` was coming from, though

there are other cases where we still use it.

# User-Facing Changes

None

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

* New "display_output" hook.

* Fix unrelated "clippy" complaint in nu-tables crate.

* Fix code-formattng and style issues in "display_output" hook

* Enhance eval_hook to return PipelineData.

This allows a hook (including display_output) to return a value.

Co-authored-by: JT <547158+jntrnr@users.noreply.github.com>

* Add test for passing binary data through externals

This change adds an ignored test to confirm that binary data is passed

correctly between externals to be enabled in a later commit along with

the fix.

To assist in platform agnostic testing of binary data a couple of

additional testbins were added to allow testing on `Value::Binary` inside

`ExternalStream`.

* Support binary data to stdin of run-external

Prior to this change, any pipeline producing binary data (not detected

as string) then feed into an external would be ignored due to

run-external only supporting `Value::String` on stdin.

This change adds binary stdin support for externals allowing something

like this for example:

〉^cat /dev/urandom | ^head -c 1MiB | ^pv -b | ignore

1.00MiB

This would previously output `0.00 B [0.00 B/s]` due to the data not

being pushed to stdin at each stage.