Fixes#10696

# Description

As reported, you could mess up the ring of remembered directories in

`std dirs` (a.k.a the `shells` commands) with a sequence like this:

```

~/test> mkdir b c

~/test> pushd b

~/test/b> cd ../c

~/test/c> goto 0

~/test> goto 1

## expect to end up in ~/test/c

## observe you're in ~/test/b

~/test/b>

```

Problem was `dirs goto` was not updating the remembered directories

before leaving the current slot for some other. This matters if the user

did a manual `cd` (which cannot update the remembered directories ring)

# User-Facing Changes

None! it just works ™️

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

Test runner now uses annotations instead of magic function names to pick

up code to run. Additionally skipping tests is now done on annotation

level so skipping and unskipping a test no longer requires changes to

the test code

In order for a function to be picked up by the test runner it needs to

meet following criteria:

* Needs to be private (all exported functions are ignored)

* Needs to contain one of valid annotations (and only the annotation)

directly above the definition, all other comments are ignored

Following are considered valid annotations:

* \# test

* \# test-skip

* \# before-all

* \# before-each

* \# after-each

* \# after-all

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-std/tests/run.nu` to run the tests for the

standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

When the directory stack contains only two directories, then std dirs

drop

used to misbehave and (essentially) drop the other directory, instead of

dropping the current directory.

This is fixed by always cd'ing for std dirs drop.

Before:

/tmp〉enter ..

/〉dexit

/〉

After:

/tmp〉enter ..

/〉dexit

/tmp〉

Additionally, I propose to explain the relevant environment variables a

bit

more thoroughly.

# User-Facing Changes

- Fix bug in dexit (std dirs drop) when two directories are remaining

# Tests + Formatting

Added a regression test. Made the existing test easier to understand.

# Description

Test runner now performs following actions in order to run tests:

* Module file is opened

* Public function with random name is added to the source code, this

function calls user-specified private function

* Modified module file is saved under random name in $nu.temp-path

* Modified module file is imported in subprocess, injected function is

called by the test runner

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

* Test functions no longer need to be exported

* test functions no longer need to reside in separate test_ files

* setup and teardown renamed to before-each and after-each respectively

* before-all and after-all functions added that run before all tests in

given module. This matches the behavior of test runners used by other

languages such as JUnit/TestNG or Mocha

# Tests + Formatting

# After Submitting

---------

Co-authored-by: Kamil <skelly37@protonmail.com>

Co-authored-by: amtoine <stevan.antoine@gmail.com>

related to the namespace bullet point in

- https://github.com/nushell/nushell/issues/8450

# Description

this was the last module of the standard library with a broken

namespace, this PR takes care of this.

- `run-tests` has been moved to `std/mod.nu`

- `std/testing.nu` has been moved to `std/assert.nu`

- the namespace has been fixed

- `assert` is now called `main` and used in all the other `std assert`

commands

- for `std assert length` and `std assert str contains`, in order not to

shadow the built-in `length` and `str contains` commands, i've used

`alias "core ..." = ...` to (1) define `foo` in `assert.nu` and (2)

still use the builtin `foo` with `core foo` (replace `foo` by `length`

or `str contains`)

- tests have been fixed accordingly

# User-Facing Changes

one can not use

```

use std "assert equal"

```

anymore because `assert ...` is not exported from `std`.

`std assert` is now a *real* module.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- ⚫ `toolkit test`

- ⚫ `toolkit test stdlib`

# After Submitting

```

$nothing

```

# Notes for reviewers

to test this, i think the easiest is to

- run `toolkit test stdlib` and see all the tests pass

- run `cargo run -- -n` and try `use std assert` => are all the commands

available in scope?

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Fixes https://github.com/nushell/nushell/issues/9229.

Supersedes #9234

The reported problem was that `shells` list of active shells (a.k.a `std

dirs show` would show an inaccurate active

working directory if user changed it via `cd` command.

The fix here is for the `std dirs` module to let `$env.PWD` mask the

active slot of `$env.DIRS_LIST`. The user is free to invoke CD (or write

to `$env.PWD`) and `std dirs show` will display that as the active

working directory.

When user changes the active slot (via `n`, `p`, `add` or `drop`) `std

dirs` remembers the then current PWD in the about-to-be-vacated active

slot in `$env.DIRS_LIST`, so it is there if you come back to that slot.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

None. It just works™️

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-std/tests/run.nu` to run the tests for the

standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> [x] use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

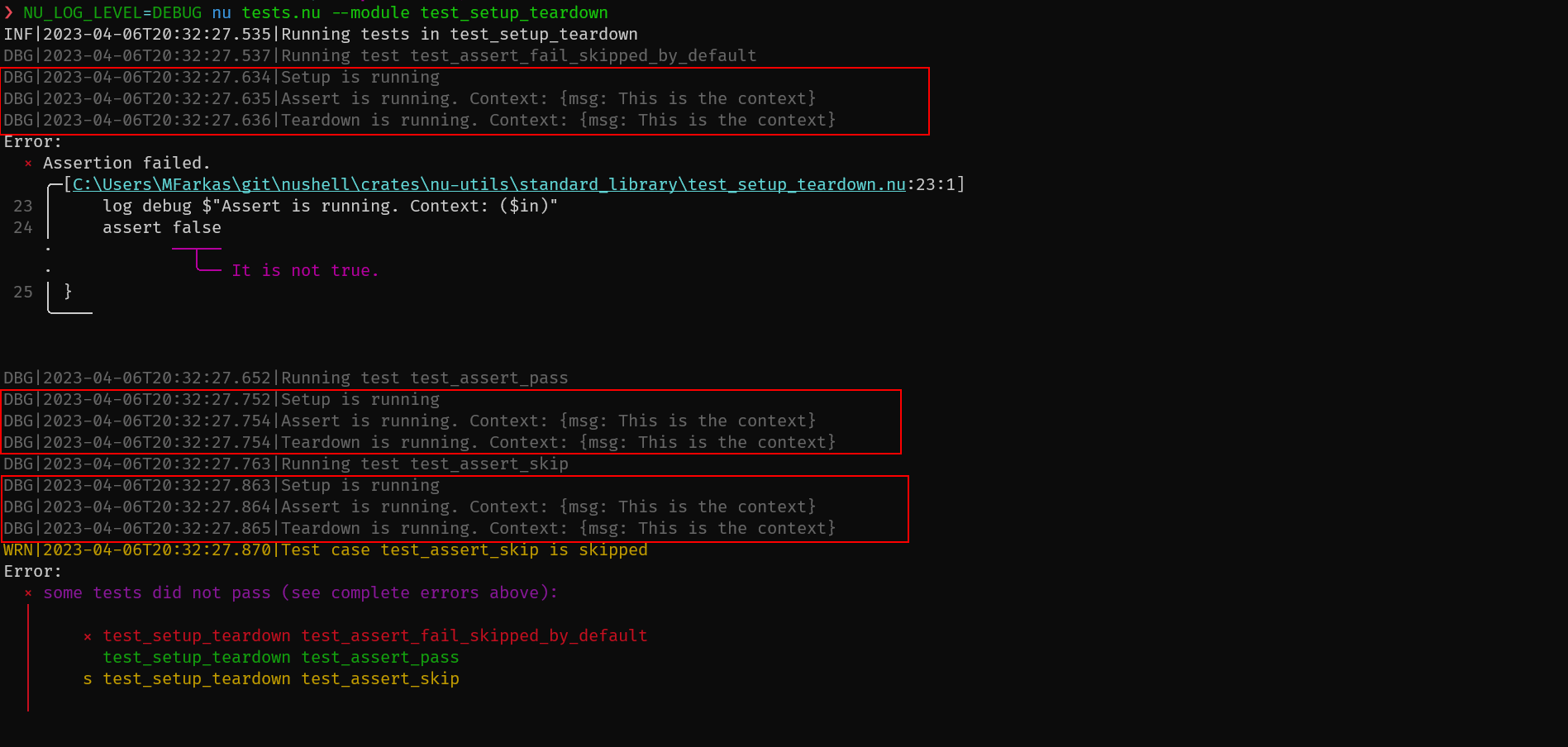

# Description

As in other testing frameworks, the `setup` runs before every test case,

and the `teardown` after that. A context can be created in `setup`,

which will be in the `$in` variable in the test cases, and in the

`teardown`. The `teardown` is called regardless of the test is passed,

skipped, or failed.

For example:

```nushell

use std.nu *

export def setup [] {

log debug "Setup is running"

{msg: "This is the context"}

}

export def teardown [] {

log debug $"Teardown is running. Context: ($in)"

}

export def test_assert_pass [] {

log debug $"Assert is running. Context: ($in)"

}

export def test_assert_skip [] {

log debug $"Assert is running. Context: ($in)"

assert skip

}

export def test_assert_fail_skipped_by_default [] {

log debug $"Assert is running. Context: ($in)"

assert false

}

```

# After Submitting

I'll update the documentation.

---------

Co-authored-by: Mate Farkas <Mate.Farkas@oneidentity.com>