diff --git a/examples/whisper.objc/README.md b/examples/whisper.objc/README.md

index 6833ebb7..bb55653d 100644

--- a/examples/whisper.objc/README.md

+++ b/examples/whisper.objc/README.md

@@ -28,6 +28,8 @@ This can significantly improve the performance of the transcription:

+## Core ML

+

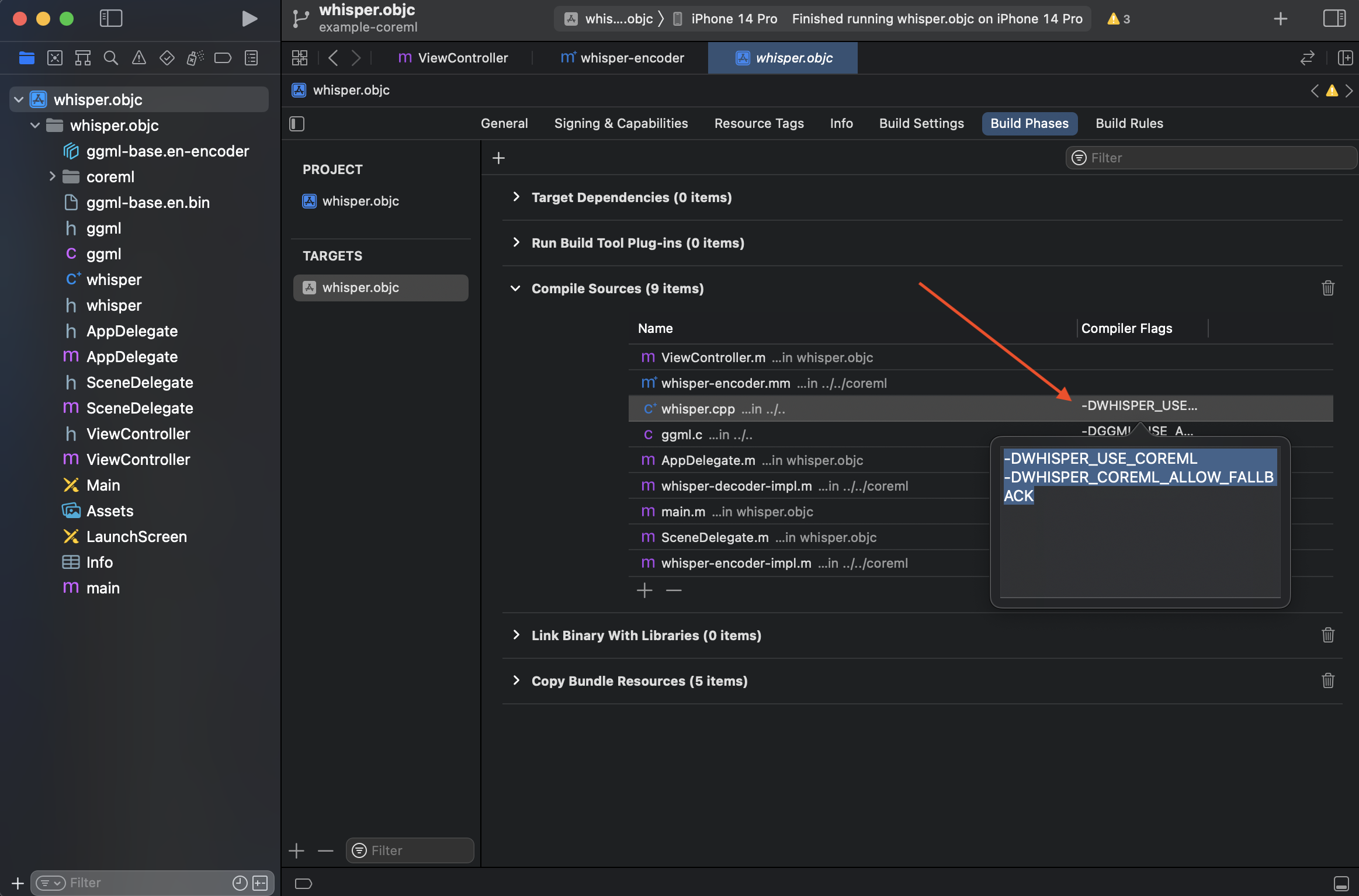

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases:

+## Core ML

+

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases:

@@ -35,3 +37,13 @@ If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DW

Then follow the [`Core ML support` section of readme](../../README.md#core-ml-support) for convert the model.

In this project, it also added `-O3 -DNDEBUG` to `Other C Flags`, but adding flags to app proj is not ideal in real world (applies to all C/C++ files), consider splitting xcodeproj in workspace in your own project.

+

+## Metal

+

+You can also enable Metal to make the inference run on the GPU of your device. This might or might not be more efficient

+compared to Core ML depending on the model and device that you use.

+

+To enable Metal, just add `-DGGML_USE_METAL` instead off the `-DWHISPER_USE_COREML` flag and you are ready.

+This will make both the Encoder and the Decoder run on the GPU.

+

+If you want to run the Encoder with Core ML and the Decoder with Metal then simply add both `-DWHISPER_USE_COREML -DGGML_USE_METAL` flags. That's all!

diff --git a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

index c0909a8b..f34b9c5b 100644

--- a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

+++ b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

@@ -8,6 +8,8 @@

/* Begin PBXBuildFile section */

1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */ = {isa = PBXBuildFile; fileRef = 184447182AB211A2007D6BFE /* ggml-alloc.c */; };

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */ = {isa = PBXBuildFile; fileRef = 1844471B2AB21655007D6BFE /* ggml-metal.m */; settings = {COMPILER_FLAGS = "-framework Foundation -framework Metal -framework MetalKit -fno-objc-arc"; }; };

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */ = {isa = PBXBuildFile; fileRef = 1844471D2AB2195F007D6BFE /* ggml-metal.metal */; };

18627C7B29052BDF00BD2A04 /* AppDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7A29052BDF00BD2A04 /* AppDelegate.m */; };

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7D29052BDF00BD2A04 /* SceneDelegate.m */; };

18627C8129052BDF00BD2A04 /* ViewController.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8029052BDF00BD2A04 /* ViewController.m */; };

@@ -15,7 +17,7 @@

18627C8629052BE000BD2A04 /* Assets.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8529052BE000BD2A04 /* Assets.xcassets */; };

18627C8929052BE000BD2A04 /* LaunchScreen.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8729052BE000BD2A04 /* LaunchScreen.storyboard */; };

18627C8C29052BE000BD2A04 /* main.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8B29052BE000BD2A04 /* main.m */; };

- 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK"; }; };

+ 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML"; }; };

18627C9629052C5800BD2A04 /* ggml.c in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9529052C5800BD2A04 /* ggml.c */; settings = {COMPILER_FLAGS = "-DGGML_USE_ACCELERATE"; }; };

18627C9B29052CFF00BD2A04 /* ggml-base.en.bin in Resources */ = {isa = PBXBuildFile; fileRef = 18627C9A29052CFF00BD2A04 /* ggml-base.en.bin */; };

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */ = {isa = PBXBuildFile; fileRef = 7FE342452A0C3FA20015A058 /* whisper-encoder-impl.m */; };

@@ -24,9 +26,24 @@

7FE3424F2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc in Resources */ = {isa = PBXBuildFile; fileRef = 7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */; };

/* End PBXBuildFile section */

+/* Begin PBXCopyFilesBuildPhase section */

+ 184447202AB21B25007D6BFE /* CopyFiles */ = {

+ isa = PBXCopyFilesBuildPhase;

+ buildActionMask = 2147483647;

+ dstPath = "";

+ dstSubfolderSpec = 7;

+ files = (

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */,

+ );

+ runOnlyForDeploymentPostprocessing = 0;

+ };

+/* End PBXCopyFilesBuildPhase section */

+

/* Begin PBXFileReference section */

184447182AB211A2007D6BFE /* ggml-alloc.c */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.c; name = "ggml-alloc.c"; path = "../../../ggml-alloc.c"; sourceTree = ""; };

184447192AB211A2007D6BFE /* ggml-alloc.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; name = "ggml-alloc.h"; path = "../../../ggml-alloc.h"; sourceTree = ""; };

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.objc; name = "ggml-metal.m"; path = "../../../ggml-metal.m"; sourceTree = ""; };

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.metal; name = "ggml-metal.metal"; path = "../../../ggml-metal.metal"; sourceTree = ""; };

18627C7629052BDF00BD2A04 /* whisper.objc.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = whisper.objc.app; sourceTree = BUILT_PRODUCTS_DIR; };

18627C7929052BDF00BD2A04 /* AppDelegate.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = AppDelegate.h; sourceTree = ""; };

18627C7A29052BDF00BD2A04 /* AppDelegate.m */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.objc; path = AppDelegate.m; sourceTree = ""; };

@@ -83,6 +100,8 @@

18627C7829052BDF00BD2A04 /* whisper.objc */ = {

isa = PBXGroup;

children = (

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */,

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */,

184447182AB211A2007D6BFE /* ggml-alloc.c */,

184447192AB211A2007D6BFE /* ggml-alloc.h */,

7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */,

@@ -131,6 +150,7 @@

18627C7229052BDF00BD2A04 /* Sources */,

18627C7329052BDF00BD2A04 /* Frameworks */,

18627C7429052BDF00BD2A04 /* Resources */,

+ 184447202AB21B25007D6BFE /* CopyFiles */,

);

buildRules = (

);

@@ -202,6 +222,7 @@

1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */,

18627C8C29052BE000BD2A04 /* main.m in Sources */,

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */,

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */,

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */,

);

runOnlyForDeploymentPostprocessing = 0;

diff --git a/ggml-metal.m b/ggml-metal.m

index a810e5c5..54c7f21d 100644

--- a/ggml-metal.m

+++ b/ggml-metal.m

@@ -118,14 +118,17 @@ static NSString * const msl_library_source = @"see metal.metal";

struct ggml_metal_context * ggml_metal_init(int n_cb) {

metal_printf("%s: allocating\n", __func__);

- // Show all the Metal device instances in the system

- NSArray * devices = MTLCopyAllDevices();

id device;

NSString * s;

+

+#if TARGET_OS_OSX

+ // Show all the Metal device instances in the system

+ NSArray * devices = MTLCopyAllDevices();

for (device in devices) {

s = [device name];

metal_printf("%s: found device: %s\n", __func__, [s UTF8String]);

}

+#endif

// Pick and show default Metal device

device = MTLCreateSystemDefaultDevice();

@@ -142,12 +145,20 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

ctx->d_queue = dispatch_queue_create("ggml-metal", DISPATCH_QUEUE_CONCURRENT);

-#if 0

- // compile from source string and show compile log

+#ifdef GGML_SWIFT

+ // load the default.metallib file

{

NSError * error = nil;

- ctx->library = [ctx->device newLibraryWithSource:msl_library_source options:nil error:&error];

+ NSBundle * bundle = [NSBundle bundleForClass:[GGMLMetalClass class]];

+ NSString * llamaBundlePath = [bundle pathForResource:@"llama_llama" ofType:@"bundle"];

+ NSBundle * llamaBundle = [NSBundle bundleWithPath:llamaBundlePath];

+ NSString * libPath = [llamaBundle pathForResource:@"default" ofType:@"metallib"];

+ NSURL * libURL = [NSURL fileURLWithPath:libPath];

+

+ // Load the metallib file into a Metal library

+ ctx->library = [ctx->device newLibraryWithURL:libURL error:&error];

+

if (error) {

metal_printf("%s: error: %s\n", __func__, [[error description] UTF8String]);

return NULL;

@@ -249,13 +260,15 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

#undef GGML_METAL_ADD_KERNEL

}

- metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

metal_printf("%s: hasUnifiedMemory = %s\n", __func__, ctx->device.hasUnifiedMemory ? "true" : "false");

+#if TARGET_OS_OSX

+ metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

if (ctx->device.maxTransferRate != 0) {

metal_printf("%s: maxTransferRate = %8.2f MB/s\n", __func__, ctx->device.maxTransferRate / 1024.0 / 1024.0);

} else {

metal_printf("%s: maxTransferRate = built-in GPU\n", __func__);

}

+#endif

return ctx;

}

@@ -458,6 +471,7 @@ bool ggml_metal_add_buffer(

}

}

+#if TARGET_OS_OSX

metal_printf(", (%8.2f / %8.2f)",

ctx->device.currentAllocatedSize / 1024.0 / 1024.0,

ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

@@ -467,6 +481,9 @@ bool ggml_metal_add_buffer(

} else {

metal_printf("\n");

}

+#else

+ metal_printf(", (%8.2f)\n", ctx->device.currentAllocatedSize / 1024.0 / 1024.0);

+#endif

}

return true;

@@ -35,3 +37,13 @@ If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DW

Then follow the [`Core ML support` section of readme](../../README.md#core-ml-support) for convert the model.

In this project, it also added `-O3 -DNDEBUG` to `Other C Flags`, but adding flags to app proj is not ideal in real world (applies to all C/C++ files), consider splitting xcodeproj in workspace in your own project.

+

+## Metal

+

+You can also enable Metal to make the inference run on the GPU of your device. This might or might not be more efficient

+compared to Core ML depending on the model and device that you use.

+

+To enable Metal, just add `-DGGML_USE_METAL` instead off the `-DWHISPER_USE_COREML` flag and you are ready.

+This will make both the Encoder and the Decoder run on the GPU.

+

+If you want to run the Encoder with Core ML and the Decoder with Metal then simply add both `-DWHISPER_USE_COREML -DGGML_USE_METAL` flags. That's all!

diff --git a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

index c0909a8b..f34b9c5b 100644

--- a/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

+++ b/examples/whisper.objc/whisper.objc.xcodeproj/project.pbxproj

@@ -8,6 +8,8 @@

/* Begin PBXBuildFile section */

1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */ = {isa = PBXBuildFile; fileRef = 184447182AB211A2007D6BFE /* ggml-alloc.c */; };

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */ = {isa = PBXBuildFile; fileRef = 1844471B2AB21655007D6BFE /* ggml-metal.m */; settings = {COMPILER_FLAGS = "-framework Foundation -framework Metal -framework MetalKit -fno-objc-arc"; }; };

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */ = {isa = PBXBuildFile; fileRef = 1844471D2AB2195F007D6BFE /* ggml-metal.metal */; };

18627C7B29052BDF00BD2A04 /* AppDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7A29052BDF00BD2A04 /* AppDelegate.m */; };

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C7D29052BDF00BD2A04 /* SceneDelegate.m */; };

18627C8129052BDF00BD2A04 /* ViewController.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8029052BDF00BD2A04 /* ViewController.m */; };

@@ -15,7 +17,7 @@

18627C8629052BE000BD2A04 /* Assets.xcassets in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8529052BE000BD2A04 /* Assets.xcassets */; };

18627C8929052BE000BD2A04 /* LaunchScreen.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 18627C8729052BE000BD2A04 /* LaunchScreen.storyboard */; };

18627C8C29052BE000BD2A04 /* main.m in Sources */ = {isa = PBXBuildFile; fileRef = 18627C8B29052BE000BD2A04 /* main.m */; };

- 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK"; }; };

+ 18627C9429052C4900BD2A04 /* whisper.cpp in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9329052C4900BD2A04 /* whisper.cpp */; settings = {COMPILER_FLAGS = "-DWHISPER_USE_COREML"; }; };

18627C9629052C5800BD2A04 /* ggml.c in Sources */ = {isa = PBXBuildFile; fileRef = 18627C9529052C5800BD2A04 /* ggml.c */; settings = {COMPILER_FLAGS = "-DGGML_USE_ACCELERATE"; }; };

18627C9B29052CFF00BD2A04 /* ggml-base.en.bin in Resources */ = {isa = PBXBuildFile; fileRef = 18627C9A29052CFF00BD2A04 /* ggml-base.en.bin */; };

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */ = {isa = PBXBuildFile; fileRef = 7FE342452A0C3FA20015A058 /* whisper-encoder-impl.m */; };

@@ -24,9 +26,24 @@

7FE3424F2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc in Resources */ = {isa = PBXBuildFile; fileRef = 7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */; };

/* End PBXBuildFile section */

+/* Begin PBXCopyFilesBuildPhase section */

+ 184447202AB21B25007D6BFE /* CopyFiles */ = {

+ isa = PBXCopyFilesBuildPhase;

+ buildActionMask = 2147483647;

+ dstPath = "";

+ dstSubfolderSpec = 7;

+ files = (

+ 184447212AB21B43007D6BFE /* ggml-metal.metal in CopyFiles */,

+ );

+ runOnlyForDeploymentPostprocessing = 0;

+ };

+/* End PBXCopyFilesBuildPhase section */

+

/* Begin PBXFileReference section */

184447182AB211A2007D6BFE /* ggml-alloc.c */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.c; name = "ggml-alloc.c"; path = "../../../ggml-alloc.c"; sourceTree = ""; };

184447192AB211A2007D6BFE /* ggml-alloc.h */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.h; name = "ggml-alloc.h"; path = "../../../ggml-alloc.h"; sourceTree = ""; };

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.objc; name = "ggml-metal.m"; path = "../../../ggml-metal.m"; sourceTree = ""; };

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.metal; name = "ggml-metal.metal"; path = "../../../ggml-metal.metal"; sourceTree = ""; };

18627C7629052BDF00BD2A04 /* whisper.objc.app */ = {isa = PBXFileReference; explicitFileType = wrapper.application; includeInIndex = 0; path = whisper.objc.app; sourceTree = BUILT_PRODUCTS_DIR; };

18627C7929052BDF00BD2A04 /* AppDelegate.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = AppDelegate.h; sourceTree = ""; };

18627C7A29052BDF00BD2A04 /* AppDelegate.m */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.objc; path = AppDelegate.m; sourceTree = ""; };

@@ -83,6 +100,8 @@

18627C7829052BDF00BD2A04 /* whisper.objc */ = {

isa = PBXGroup;

children = (

+ 1844471D2AB2195F007D6BFE /* ggml-metal.metal */,

+ 1844471B2AB21655007D6BFE /* ggml-metal.m */,

184447182AB211A2007D6BFE /* ggml-alloc.c */,

184447192AB211A2007D6BFE /* ggml-alloc.h */,

7FE3424E2A0C418A0015A058 /* ggml-base.en-encoder.mlmodelc */,

@@ -131,6 +150,7 @@

18627C7229052BDF00BD2A04 /* Sources */,

18627C7329052BDF00BD2A04 /* Frameworks */,

18627C7429052BDF00BD2A04 /* Resources */,

+ 184447202AB21B25007D6BFE /* CopyFiles */,

);

buildRules = (

);

@@ -202,6 +222,7 @@

1844471A2AB211A2007D6BFE /* ggml-alloc.c in Sources */,

18627C8C29052BE000BD2A04 /* main.m in Sources */,

18627C7E29052BDF00BD2A04 /* SceneDelegate.m in Sources */,

+ 1844471C2AB21655007D6BFE /* ggml-metal.m in Sources */,

7FE3424B2A0C3FA20015A058 /* whisper-encoder-impl.m in Sources */,

);

runOnlyForDeploymentPostprocessing = 0;

diff --git a/ggml-metal.m b/ggml-metal.m

index a810e5c5..54c7f21d 100644

--- a/ggml-metal.m

+++ b/ggml-metal.m

@@ -118,14 +118,17 @@ static NSString * const msl_library_source = @"see metal.metal";

struct ggml_metal_context * ggml_metal_init(int n_cb) {

metal_printf("%s: allocating\n", __func__);

- // Show all the Metal device instances in the system

- NSArray * devices = MTLCopyAllDevices();

id device;

NSString * s;

+

+#if TARGET_OS_OSX

+ // Show all the Metal device instances in the system

+ NSArray * devices = MTLCopyAllDevices();

for (device in devices) {

s = [device name];

metal_printf("%s: found device: %s\n", __func__, [s UTF8String]);

}

+#endif

// Pick and show default Metal device

device = MTLCreateSystemDefaultDevice();

@@ -142,12 +145,20 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

ctx->d_queue = dispatch_queue_create("ggml-metal", DISPATCH_QUEUE_CONCURRENT);

-#if 0

- // compile from source string and show compile log

+#ifdef GGML_SWIFT

+ // load the default.metallib file

{

NSError * error = nil;

- ctx->library = [ctx->device newLibraryWithSource:msl_library_source options:nil error:&error];

+ NSBundle * bundle = [NSBundle bundleForClass:[GGMLMetalClass class]];

+ NSString * llamaBundlePath = [bundle pathForResource:@"llama_llama" ofType:@"bundle"];

+ NSBundle * llamaBundle = [NSBundle bundleWithPath:llamaBundlePath];

+ NSString * libPath = [llamaBundle pathForResource:@"default" ofType:@"metallib"];

+ NSURL * libURL = [NSURL fileURLWithPath:libPath];

+

+ // Load the metallib file into a Metal library

+ ctx->library = [ctx->device newLibraryWithURL:libURL error:&error];

+

if (error) {

metal_printf("%s: error: %s\n", __func__, [[error description] UTF8String]);

return NULL;

@@ -249,13 +260,15 @@ struct ggml_metal_context * ggml_metal_init(int n_cb) {

#undef GGML_METAL_ADD_KERNEL

}

- metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

metal_printf("%s: hasUnifiedMemory = %s\n", __func__, ctx->device.hasUnifiedMemory ? "true" : "false");

+#if TARGET_OS_OSX

+ metal_printf("%s: recommendedMaxWorkingSetSize = %8.2f MB\n", __func__, ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

if (ctx->device.maxTransferRate != 0) {

metal_printf("%s: maxTransferRate = %8.2f MB/s\n", __func__, ctx->device.maxTransferRate / 1024.0 / 1024.0);

} else {

metal_printf("%s: maxTransferRate = built-in GPU\n", __func__);

}

+#endif

return ctx;

}

@@ -458,6 +471,7 @@ bool ggml_metal_add_buffer(

}

}

+#if TARGET_OS_OSX

metal_printf(", (%8.2f / %8.2f)",

ctx->device.currentAllocatedSize / 1024.0 / 1024.0,

ctx->device.recommendedMaxWorkingSetSize / 1024.0 / 1024.0);

@@ -467,6 +481,9 @@ bool ggml_metal_add_buffer(

} else {

metal_printf("\n");

}

+#else

+ metal_printf(", (%8.2f)\n", ctx->device.currentAllocatedSize / 1024.0 / 1024.0);

+#endif

}

return true;

+## Core ML

+

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases:

+## Core ML

+

If you want to enable Core ML support, you can add the `-DWHISPER_USE_COREML -DWHISPER_COREML_ALLOW_FALLBACK` compiler flag for `whisper.cpp` in Build Phases: