# Description

This PR is kind of two PRs in one because they were dependent on each

other.

PR1 -

3de58d4dc2

with update

7fcdb242d9

- This follows our mantra of having everything with defaults written in

nushell rust code. So, that if you run without a config, you get the

same behavior as with the default config/env files. This sets

NU_LIB_DIRS to $nu.config-path/scripts and sets NU_PLUGIN_DIRS to

$nu.config-path/plugins.

PR2 -

0e8ac876fd

- The benchmarks have been broke for some time and we didn't notice it.

This PR fixes that. It's dependent on PR1 because it was throwing errors

because PWD needed to be set to a valid folder and `$nu` did not exist

based on how the benchmark was setup.

I've tested the benchmarks and they run without error now and I've also

launched nushell as `nu -n --no-std-lib` and the env vars exist.

closes#11236

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

As part of the refactor to split spans off of Value, this moves to using

helper functions to create values, and using `.span()` instead of

matching span out of Value directly.

Hoping to get a few more helping hands to finish this, as there are a

lot of commands to update :)

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

Co-authored-by: WindSoilder <windsoilder@outlook.com>

# Description

This PR creates a new `Record` type to reduce duplicate code and

possibly bugs as well. (This is an edited version of #9648.)

- `Record` implements `FromIterator` and `IntoIterator` and so can be

iterated over or collected into. For example, this helps with

conversions to and from (hash)maps. (Also, no more

`cols.iter().zip(vals)`!)

- `Record` has a `push(col, val)` function to help insure that the

number of columns is equal to the number of values. I caught a few

potential bugs thanks to this (e.g. in the `ls` command).

- Finally, this PR also adds a `record!` macro that helps simplify

record creation. It is used like so:

```rust

record! {

"key1" => some_value,

"key2" => Value::string("text", span),

"key3" => Value::int(optional_int.unwrap_or(0), span),

"key4" => Value::bool(config.setting, span),

}

```

Since macros hinder formatting, etc., the right hand side values should

be relatively short and sweet like the examples above.

Where possible, prefer `record!` or `.collect()` on an iterator instead

of multiple `Record::push`s, since the first two automatically set the

record capacity and do less work overall.

# User-Facing Changes

Besides the changes in `nu-protocol` the only other breaking changes are

to `nu-table::{ExpandedTable::build_map, JustTable::kv_table}`.

a recent addition must have removed the `create_default_context` command

from `nu_command`, which breaks the benchmarks 😮

## before this PR

```bash

cargo check --all-targets --workspace

```

gives a bunch of

```bash

error[E0425]: cannot find function `create_default_context` in crate `nu_command`

--> benches/benchmarks.rs:93:48

|

93 | let mut engine_state = nu_command::create_default_context();

| ^^^^^^^^^^^^^^^^^^^^^^ not found in `nu_command`

|

help: consider importing this function

|

1 | use nu_cmd_lang::create_default_context;

|

help: if you import `create_default_context`, refer to it directly

|

93 - let mut engine_state = nu_command::create_default_context();

93 + let mut engine_state = create_default_context();

|

```

and `cargo bench` does not run...

## with this PR

```bash

cargo check --all-targets --workspace

```

is not happy and the benchmarks run again with `cargo bench`

---------

Co-authored-by: sholderbach <sholderbach@users.noreply.github.com>

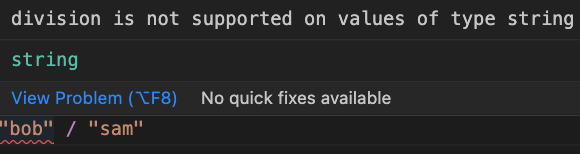

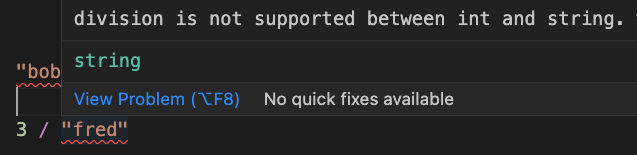

# Description

This improves the operation mismatch error in a few ways:

* We now detect if the left-hand side of the operation is at fault, and

show a simpler error/error message if it is

* Removed the unhelpful hint

* Updated the error text to make it clear what types are causing the

issue

# User-Facing Changes

Error texts and spans will change

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-utils/standard_library/tests.nu` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Broken after #7415

We currently don't try to build the benchmarks in the CI thus this

slipped through the cracks.

# User-Facing Changes

None

# Tests + Formatting

Compile check is currently missing, but working towards that

I've been using the new Criterion benchmarks and I noticed that they

take a _long_ time to build before the benchmark can run. Turns out

`cargo build` was building 3 separate benchmarking binaries with most of

Nu's functionality in each one.

As a simple temporary fix, I've moved all the benchmarks into a single

file so that we only build 1 binary.

### Future work

Would be nice to split the unrelated benchmarks out into modules, but

when I did that a separate binary still got built for each one. I

suspect Criterion's macros are doing something funny with module or file

names. I've left a FIXME in the code to investigate this further.

A quick follow-up to https://github.com/nushell/nushell/pull/7686. This

adds benchmarks for evaluating `default_env.nu` and `default_config.nu`,

because evaluating config takes up the lion's share of Nushell's startup

time. The benchmarks will help us speed up Nu's startup and test

execution.

```

eval default_env.nu time: [4.2417 ms 4.2596 ms 4.2780 ms]

...

eval default_config.nu time: [1.9362 ms 1.9439 ms 1.9523 ms]

```

This PR sets up [Criterion](https://github.com/bheisler/criterion.rs)

for benchmarking in the main `nu` crate, and adds some simple parser

benchmarks.

To run the benchmarks, just do `cargo bench` or `cargo bench -- <regex

matching benchmark names>` in the repo root:

```bash

〉cargo bench -- parse

...

Running benches/parser_benchmark.rs (target/release/deps/parser_benchmark-75d224bac82d5b0b)

parse_default_env_file time: [221.17 µs 222.34 µs 223.61 µs]

Found 8 outliers among 100 measurements (8.00%)

5 (5.00%) high mild

3 (3.00%) high severe

parse_default_config_file

time: [1.4935 ms 1.4993 ms 1.5059 ms]

Found 11 outliers among 100 measurements (11.00%)

7 (7.00%) high mild

4 (4.00%) high severe

```

Existing benchmarks from `nu-plugin` have been moved into the main `nu`

crate to keep all our benchmarks in one place.