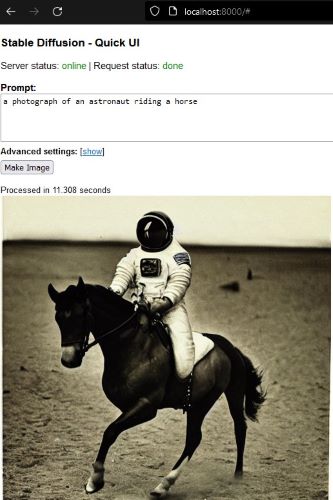

A simple browser UI for generating images from text prompts, using Stable Diffusion. Designed for running locally on your computer. Just enter the text prompt, and see the generated image.

What does this do?

Two things:

- Automatically downloads and installs Stable Diffusion on your local computer (no need to mess with conda or environments)

- Gives you a simple browser-based UI to talk to your local Stable Diffusion. Enter text prompts and view the generated image. No API keys required.

All the processing will happen on your local computer, it does not transmit your prompts or process on any remote server.

System Requirements

- Requires Docker and Python (3.6 or higher).

- Linux or Windows 11 (with WSL). Basically if your system can run Stable Diffusion.

Installation

- Download Quick UI (this project)

- Unzip:

unzip main.zip - Enter:

cd stable-diffusion-ui-main - Install dependencies:

pip install fastapi uvicorn(this is the framework and server used by this UI project) - Run:

./server.sh(warning: this will take a while the first time, since it'll download Stable Diffusion's docker image, nearly 17 GiB) - Open

http://localhost:8000in your browser

Usage

- Open

http://localhost:8000in your browser (after running./server.shfrom step 5 previously) - Enter a text prompt, like

a photograph of an astronaut riding a horsein the textbox. - Press

Make Image. This will take a while, depending on your system's processing power. - See the image generated using your prompt. If there's an error, the status message at the top will show 'error' in red.

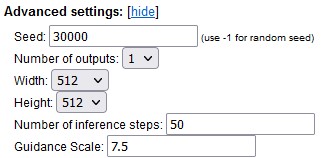

Advanced Settings

You can also set the configuration like seed, width, height, num_outputs, num_inference_steps and guidance_scale using the 'show' button next to 'Advanced settings'.

Use the same seed number to get the same image for a certain prompt. This is useful for refining a prompt without losing the basic image design. Use a seed of -1 to get random images.

Behind the scenes

This project is a quick way to get started with Stable Diffusion. You do not need to have Stable Diffusion already installed, and do not need any API keys. This project will automatically download Stable Diffusion's docker image, the first time it is run.

This project runs Stable Diffusion in a docker container behind the scenes, using Stable Diffusion's official Docker image on replicate.com.

Bugs reports and code contributions welcome

This was built in a few hours for fun. So if there are any problems, please feel free to file an issue.

Also, please feel free to submit a pull request, if you have any code contributions in mind.

Disclaimer

I am not responsible for any images generated using this interface.