Just a quick change: the test I made for `--no-newline` was missing

`--no-config-file`, so it could false-negative if you have problems with

your config.

# Description

Fixes a potential panic in `ls`.

# User-Facing Changes

Entries in the same directory are sorted first based on whether or not

they errored. Errors will be listed first, potentially stopping the

pipeline short.

# Description

Fix the docs repo CI build error here:

https://github.com/nushell/nushell.github.io/actions/runs/12425087184/job/34691291790#step:5:18

The doc generated by `make_docs.nu` for `polars profile` command will

make the CI build fail due to the indention error of markdown front

matters. I used to fix it manually before, for the long run, it's better

to fix it from the source code.

# Description

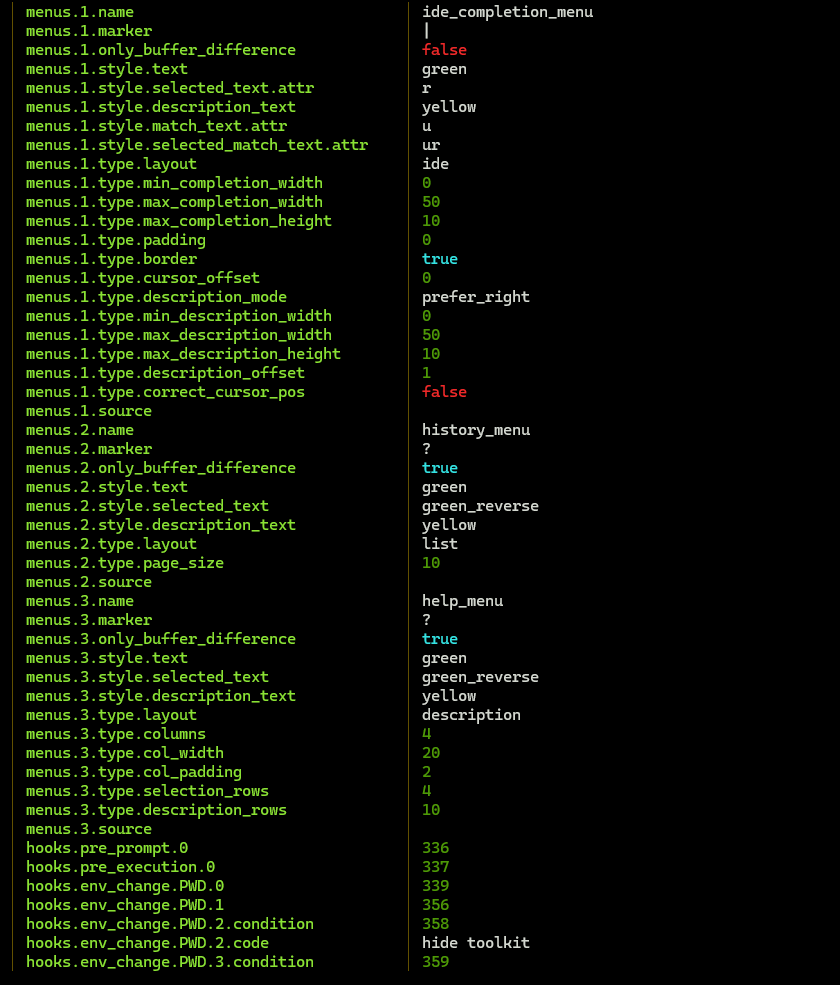

@maxim-uvarov found some bugs in the new `config flatten` command. This

PR should take care of what's been identified so far.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

I had issues with the following tests:

- `commands::network::http::delete::http_delete_timeout`

- `commands::network::http::get::http_get_timeout`

- `commands::network::http::options::http_options_timeout`

- `commands::network::http::patch::http_patch_timeout`

- `commands::network::http::post::http_post_timeout`

- `commands::network::http::put::http_put_timeout`

I checked what the actual issue was and my problem was that the tested

string `"did not properly respond after a period of time"` wasn't in the

actual error. This happened because my german Windows would return a

german error message which obviosly did not include that string. To fix

that I replaced the string check with the os error code that is also

part of the error message which should be language agnostic. (I hope.)

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

None.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

\o/

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

This file is not made accessible to the user through any of our `config`

commands.

Thus I discussed with Douglas to delete it, to ensure it doesn't go out

of date (the version added with #14601 was not yet part of the bumping

script)

All the necessary information on how to setup a `login.nu` file is

provided in the website documentation

Stumbled over unnecessary `pub` `fn action` and `struct Arguments` when

reworking `into bits` in #14634

Stuff like this should be local until proven otherwise and then named

approrpiately.

# Description

This PR continues to tweak `config flatten` by looking up the closures

and block_ids and extracts the content into the produced record.

Example

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

#14019 deprecated the `split-by` command. This sets its doc-category to

"deprecated" so that it will display that way in the in-shell and online

help

# User-Facing Changes

`split-by` will now show as a deprecated command in Help. Will also be

reported using:

```nushell

help commands | where category == deprecated

```

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

Adds `$env.config.color_config.shape_garbage` to the default config so

that it is populated out of the box.

Thanks to @PerchunPak for finding that it was missing.

# User-Facing Changes

I think this is useful on two levels, but it will be a change for a lot

of users:

1. Accessing it won't generate an error out-of-the-box

2. Garbage errors are highlighted in reverse-red in real-time in the

REPL. This means that, for example, typing just a `$` will start out as

an error - Once a valid variable (e.g., `$env`) is completed, then the

highlight will change to the parsed shape.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

There is an opportunity to give a bogus block id to view source. This

makes it more resilient and not panic when an invalid block id is passed

in.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This is supposed to be a Quality-of-Life command that just makes some

things easier when dealing with a nushell config. Really all it does is

show you the current config in a flattened state. That's it. I was

thinking this could be useful when comparing config settings between old

and new config files. There are still room for improvements. For

instance, closures are listed as an int. They can be updated with a

`view source <int>` pipeline but that could all be built in too.

The command works by getting the current configuration, serializing it

to json, then flattening that json. BTW, there's a new flatten_json.rs

in nu-utils. Theoretically all this mess could be done in a custom

command script, but it's proven to be exceedingly difficult based on the

work from discord.

Here's some more complex items to flatten.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR is meant to add another nushell introspection/debug command,

`view blocks`. This command shows what is in the EngineState's memory

that is parsed and stored as blocks. Blocks may continue to grow as you

use the repl.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR adds the `merge deep` command. This allows you to merge nested

records and tables/lists within records together, instead of overwriting

them. The code for `merge` was reworked to support more general merging

of values, so `merge` and `merge deep` use the same underlying code.

`merge deep` mostly works like `merge`, except it recurses into inner

records which exist in both the input and argument rather than just

overwriting. For lists and by extension tables, `merge deep` has a

couple different strategies for merging inner lists, which can be

selected with the `--strategy` flag. These are:

- `table`: Merges tables element-wise, similarly to the merge command.

Non-table lists are not merged.

- `overwrite`: Lists and tables are overwritten with their corresponding

value from the argument, similarly to scalars.

- `append`: Lists and tables in the input are appended with the

corresponding list from the argument.

- `prepend`: Lists and tables in the input are prepended with the

corresponding list from the argument.

This can also be used with the new config changes to write a monolithic

record of _only_ the config values you want to change:

```nushell

# in config file:

const overrides = {

history: {

file_format: "sqlite",

isolation: true

}

}

# use append strategy for lists, e.g., menus keybindings

$env.config = $env.config | merge deep --strategy=append $overrides

# later, in REPL:

$env.config.history

# => ╭───────────────┬────────╮

# => │ max_size │ 100000 │

# => │ sync_on_enter │ true │

# => │ file_format │ sqlite │

# => │ isolation │ true │

# => ╰───────────────┴────────╯

```

<details>

<summary>Performance details</summary>

For those interested, there was less than one standard deviation of

difference in startup time when setting each config item individually

versus using <code>merge deep</code>, so you can use <code>merge

deep</code> in your config at no measurable performance cost. Here's my

results:

My normal config (in 0.101 style, with each `$env.config.[...]` value

updated individually)

```nushell

bench --pretty { ./nu -l -c '' }

# => 45ms 976µs 983ns +/- 455µs 955ns

```

Equivalent config with a single `overrides` record and `merge deep -s

append`:

```nushell

bench --pretty { ./nu -l -c '' }

# => 45ms 587µs 428ns +/- 702µs 944ns

```

</details>

Huge thanks to @Bahex for designing the strategies API and helping

finish up this PR while I was sick ❤️

Related: #12148

# User-Facing Changes

Adds the `merge deep` command to recursively merge records. For example:

```nushell

{a: {foo: 123 bar: "overwrite me"}, b: [1, 2, 3]} | merge deep {a: {bar: 456, baz: 789}, b: [4, 5, 6]}

# => ╭───┬───────────────╮

# => │ │ ╭─────┬─────╮ │

# => │ a │ │ foo │ 123 │ │

# => │ │ │ bar │ 456 │ │

# => │ │ │ baz │ 789 │ │

# => │ │ ╰─────┴─────╯ │

# => │ │ ╭───┬───╮ │

# => │ b │ │ 0 │ 4 │ │

# => │ │ │ 1 │ 5 │ │

# => │ │ │ 2 │ 6 │ │

# => │ │ ╰───┴───╯ │

# => ╰───┴───────────────╯

```

`merge deep` also has different strategies for merging inner lists and

tables. For example, you can use the `append` strategy to _merge_ the

inner `b` list instead of overwriting it.

```nushell

{a: {foo: 123 bar: "overwrite me"}, b: [1, 2, 3]} | merge deep --strategy=append {a: {bar: 456, baz: 789}, b: [4, 5, 6]}

# => ╭───┬───────────────╮

# => │ │ ╭─────┬─────╮ │

# => │ a │ │ foo │ 123 │ │

# => │ │ │ bar │ 456 │ │

# => │ │ │ baz │ 789 │ │

# => │ │ ╰─────┴─────╯ │

# => │ │ ╭───┬───╮ │

# => │ b │ │ 0 │ 1 │ │

# => │ │ │ 1 │ 2 │ │

# => │ │ │ 2 │ 3 │ │

# => │ │ │ 3 │ 4 │ │

# => │ │ │ 4 │ 5 │ │

# => │ │ │ 5 │ 6 │ │

# => │ │ ╰───┴───╯ │

# => ╰───┴───────────────╯

```

**Note to release notes writers**: Please credit @Bahex for this PR as

well 😄

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

Added tests for deep merge

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

N/A

---------

Co-authored-by: Bahex <bahey1999@gmail.com>

Bumps [crate-ci/typos](https://github.com/crate-ci/typos) from 1.28.2 to

1.28.4.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/crate-ci/typos/releases">crate-ci/typos's

releases</a>.</em></p>

<blockquote>

<h2>v1.28.4</h2>

<h2>[1.28.4] - 2024-12-16</h2>

<h3>Features</h3>

<ul>

<li><code>--format sarif</code> support</li>

</ul>

<h2>v1.28.3</h2>

<h2>[1.28.3] - 2024-12-12</h2>

<h3>Fixes</h3>

<ul>

<li>Correct <code>imlementations</code>, <code>includs</code>,

<code>qurorum</code>, <code>transatctions</code>,

<code>trasnactions</code>, <code>validasted</code>,

<code>vview</code></li>

</ul>

</blockquote>

</details>

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/crate-ci/typos/blob/master/CHANGELOG.md">crate-ci/typos's

changelog</a>.</em></p>

<blockquote>

<h2>[1.28.4] - 2024-12-16</h2>

<h3>Features</h3>

<ul>

<li><code>--format sarif</code> support</li>

</ul>

<h2>[1.28.3] - 2024-12-12</h2>

<h3>Fixes</h3>

<ul>

<li>Correct <code>imlementations</code>, <code>includs</code>,

<code>qurorum</code>, <code>transatctions</code>,

<code>trasnactions</code>, <code>validasted</code>,

<code>vview</code></li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="9d89015957"><code>9d89015</code></a>

chore: Release</li>

<li><a

href="6b24563a99"><code>6b24563</code></a>

chore: Release</li>

<li><a

href="bd0a2769ae"><code>bd0a276</code></a>

docs: Update changelog</li>

<li><a

href="370109dd4d"><code>370109d</code></a>

Merge pull request <a

href="https://redirect.github.com/crate-ci/typos/issues/1047">#1047</a>

from Zxilly/sarif</li>

<li><a

href="63908449a7"><code>6390844</code></a>

feat: Implement sarif format reporter</li>

<li><a

href="32b96444b9"><code>32b9644</code></a>

Merge pull request <a

href="https://redirect.github.com/crate-ci/typos/issues/1169">#1169</a>

from klensy/deps</li>

<li><a

href="720258f60b"><code>720258f</code></a>

Merge pull request <a

href="https://redirect.github.com/crate-ci/typos/issues/1176">#1176</a>

from Ghaniyyat05/master</li>

<li><a

href="a42904ad6e"><code>a42904a</code></a>

Update README.md</li>

<li><a

href="d1c850b2b5"><code>d1c850b</code></a>

chore: Release</li>

<li><a

href="a491fd56c0"><code>a491fd5</code></a>

chore: Release</li>

<li>Additional commits viewable in <a

href="https://github.com/crate-ci/typos/compare/v1.28.2...v1.28.4">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

With great thanks to @fdncred and especially @PerchunPak (see #14601)

for finding and fixing a number of issues that I pulled in here due to

the filename changes and upcoming freeze.

This PR primarily fixes a poor wording choice in the new filenames and

`config` command options. The fact that these were called

`sample_config.nu` (etc.) and accessed via `config --sample` created a

great deal of confusion. These were never intended to be used as-is as

config files, but rather as in-shell documentation.

As such, I've renamed them:

* `sample_config.nu` becomes `doc_config.nu`

* `sample_env.nu` becomes `doc_env.nu`

* `config nu --sample` becomes `config nu --doc`

* `config env --sample` because `config env --doc`

Also the following:

* Updates `doc_config.nu` with a few additional comment-fixes on top of

@PerchunPak's changes.

* Adds version numbers to all files - Will need to update the version

script to add some files after this PR.

* Additional doc on plugin and plugin_gc configuration which I had

failed to previously completely update from the older wording

* Updated the comments in the `scaffold_*.nu` files to point people to

`help config`/`help nu` so that, if things change in the future, it will

become more difficult for the comments to be outdated.

*

# User-Facing Changes

Mostly doc.

`config nu` and `config env` changes update new behavior previously

added in 0.100.1

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

* Update configuration chapter of doc

* Update the blog entry on migrating config

* Update `bump-version.nu`

# Description

This PR allows the `view source` command to view source based on an int

value. I wrote this specifically to be able to see closures where the

text is hidden. For example:

And then you can use those `<Closure #>` with the `view source` command

like this.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- fixes#14572

# Description

This allowed columns to be coalesced on full joins with `polars join`,

providing functionality simlar to the old `--outer` join behavior.

# User-Facing Changes

- Provides a new flag `--coalesce-columns` on the `polars join` command

# Description

Add tests for `path self`.

I wasn't very familiar with the code base, especially the testing

utilities, when I first implemented `path self`. It's been on my mind to

add tests for it since then.

# Description

Fixes#14600 by adding a default value for missing keys in

`default_config.nu`:

* `$env.config.color_config.glob`

* `$env.config.color_config.closure`

# User-Facing Changes

Will no longer error when accessing these keys.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

`from ...` conversions pass along all metadata except `content_type`,

which they set to `None`.

## Rationale

`open`ing a file results in no `content_type` metadata if it can be

parsed into a nu data structure, and using `open --raw` results in

`content_type` metadata.

`from ...` commands should preserve metadata ***except*** for

`content_type`, as after parsing it's no longer that `content_type` and

just structured nu data.

These commands should return identical data *and* identical metadata

```nushell

open foo.csv

```

```nushell

open foo.csv --raw | from csv

```

# User-Facing Changes

N/A

# Tests + Formatting

- 🟢 toolkit fmt

- 🟢 toolkit clippy

- 🟢 toolkit test

- 🟢 toolkit test stdlib

# After Submitting

N/A

# Description

With `NU_LIB_DIRS`, `NU_PLUGIN_DIRS`, and `ENV_CONVERSIONS` now moved

out of `default_env.nu`, we're down to just a few left. This moves all

non-closure `PROMPT` variables out as well (and into Rust `main()`. It

also:

* Implements #14565 and sets the default

`TRANSIENT_PROMPT_COMMAND_RIGHT` and `TRANSIENT_MULTILINE_INDICATOR` to

an empty string so that they are removed for easier copying from the

terminal.

* Reverses portions of #14249 where I was overzealous in some of the

variables that were imported

* Fixes#12096

* Will be the final fix in place, I believe, to close#13670

# User-Facing Changes

Transient prompt will now remove the right-prompt and

multiline-indicator once a commandline has been entered.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

-

# After Submitting

Release notes addition

# Description

I noticed that `std/iter scan`'s closure has the order of parameters

reversed compared to `reduce`, so changed it to be consistent.

Also it didn't have `$acc` as `$in` like `reduce`, so fixed that as

well.

# User-Facing Changes

> [!WARNING]

> This is a breaking change for all operations where order of `$it` and

`$acc` matter.

- This is still fine.

```nushell

[1 2 3] | iter scan 0 {|x, y| $x + $y}

```

- This is broken

```nushell

[a b c d] | iter scan "" {|x, y| [$x, $y] | str join} -n

```

and should be changed to either one of these

- ```nushell

[a b c d] | iter scan "" {|it, acc| [$acc, $it] | str join} -n

```

- ```nushell

[a b c d] | iter scan "" {|it| append $it | str join} -n

```

# Tests + Formatting

Only change is in the std and its tests

- 🟢 toolkit test stdlib

# After Submitting

Mention in release notes

# Description

A lot of filter commands that have a closure argument (`each`, `filter`,

etc), have a wrong signature for the closure, indicating an extra int

argument for the closure.

I think they are a left over from before `enumerate` was added, used to

access iteration index. None of the commands changed in this PR actually

supply this int argument.

# User-Facing Changes

N/A

# Tests + Formatting

- 🟢 toolkit fmt

- 🟢 toolkit clippy

- 🟢 toolkit test

- 🟢 toolkit test stdlib

# After Submitting

N/A

# Description

Add an example to `reduce` that shows accumulator can also be accessed

pipeline input.

# User-Facing Changes

N/A

# Tests + Formatting

- 🟢 toolkit fmt

- 🟢 toolkit clippy

- 🟢 toolkit test

- 🟢 toolkit test stdlib

# After Submitting

N/A

Addresses some null handling issues in #6882

# Description

This changes the implementation of guessing a column type when a schema

is not specified.

New behavior:

1. Use the first non-Value::Nothing value type for the columns data type

2. If the value type changes (ignoring Value::Nothing) in subsequent

values, the datatype will be changed to DataType::Object("Value", None)

3. If a column type does not have a value type,

DataType::Object("Value", None) will be assumed.

Fixes#14542

# User-Facing Changes

Constant values are no longer missing from `scope variables` output

when the IR evaluator is enabled:

```diff

const foo = 1

scope variables | where name == "$foo" | get value.0 | to nuon

-null

+int

```

This PR should close

1. #10327

1. #13667

1. #13810

1. #14129

# Description

For `#` to start a comment, then it either need to be the first

character of the token or prefixed with ` ` (space).

So now you can do this:

```

~/Projects/nushell> 1..10 | each {echo test#testing } 12/05/2024 05:37:19 PM

╭───┬──────────────╮

│ 0 │ test#testing │

│ 1 │ test#testing │

│ 2 │ test#testing │

│ 3 │ test#testing │

│ 4 │ test#testing │

│ 5 │ test#testing │

│ 6 │ test#testing │

│ 7 │ test#testing │

│ 8 │ test#testing │

│ 9 │ test#testing │

╰───┴──────────────╯

```

# User-Facing Changes

It is a breaking change if anyone expected comments to start in the

middle of a string without any prefixing ` ` (space).

# Tests + Formatting

Did all:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

# After Submitting

I cant see that I need to update anything in [the

documentation](https://github.com/nushell/nushell.github.io) but please

point me in the direction if there is anything.

# Description

Closes#14521

This PR tweaks the way 64-bit hex numbers are parsed.

### Before

```nushell

❯ 0xffffffffffffffef

Error: nu:🐚:external_command

× External command failed

╭─[entry #1:1:1]

1 │ 0xffffffffffffffef

· ─────────┬────────

· ╰── Command `0xffffffffffffffef` not found

╰────

help: `0xffffffffffffffef` is neither a Nushell built-in or a known external command

```

### After

```nushell

❯ 0xffffffffffffffef

-17

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Fixes#14554

# User-Facing Changes

Raw strings are now supported in match patterns:

```diff

match "foo" { r#'foo'# => true, _ => false }

-false

+true

```

#14556 Seems strange to me, because it downgrade `windows-target`

version.

So In this pr I tried to update it by hand, and also run `cargo update`

manually to see how it goes

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

fixes#14567

Now NuShell's `exec` command will decrement `SHLVL` env value before

passing it to target executable.

It only works in interactive session, the same as `SHLVL`

initialization.

In addition, this PR also make a simple change to `SHLVL` initialization

(only remove an unnecessary type conversion).

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

None.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

Formatted.

With interactively tested with several shells (bash, zsh, fish) and

cross-exec-ing them, it works well this time.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

With Windows Path case-insensitivity in place, we no longer need an

`ENV_CONVERSIONS` for `PATH`, as the

`nu_engine::env::convert_env_values()` handles it automatically.

This PR:

* Removes the default `ENV_CONVERSIONS` for path from `default_env.nu`

* Sets `ENV_CONVERSIONS` to an empty record (so it can be `merge`'d) in

`main()` instead

# User-Facing Changes

No behavioral changes - Users will now have an empty `ENV_CONVERSIONS`

at startup by default, but the behavior should not change.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

# Description

Tidying up some of the wording of the sample and scaffold files to align

with our current recommendations:

* Continue to generate a commented-only `env.nu` and `config.nu` on

first launch.

* The generated `env.nu` mentions that most configuration can be done in

`config.nu`

* The `sample_env.nu` mentions the same. I might try getting rid of

`config env --sample` entirely (it's new since 0.100 anyway).

* All configuration is now documented "in-shell" in `sample_config.nu`,

which can be viewed using `config nu --sample` - This means that

environment variables that used to be in `sample_env.nu` have been moved

to `sample_config.new`.

# User-Facing Changes

Doc-only

# Tests + Formatting

Doc-only changes, but:

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

Need to work on updates to Config chapter

# Description

Fix#14544 and is also the reciprocal of #14549.

Before: If both a const and env `NU_PLUGIN_DIRS` were defined at the

same time, the env paths would not be used.

After: The directories from `const NU_PLUGIN_DIRS` are searched for a

matching filename, and if not found, `$env.NU_PLUGIN_DIRS` directories

will be searched.

Before: `$env.NU_PLUGIN_DIRS` was unnecessary set both in main() and in

default_env.nu

After: `$env.NU_PLUGIN_DIRS` is only set in main()

Before: `$env.NU_PLUGIN_DIRS` was set to `plugins` in the config

directory

After: `$env.NU_PLUGIN_DIRS` is set to an empty list and `const

NU_PLUGIN_DIRS` is set to the directory above.

Also updates `sample_env.nu` to use the `const`

# User-Facing Changes

Most scenarios should work just fine as there continues to be an

`$env.NU_PLUGIN_DIRS` to append to or override.

However, there is a small chance of a breaking change if someone was

*querying* the old default `$env.NU_PLUGIN_DIRS`.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

Also updated the `env` tests and added one for the `const`.

# After Submitting

Config doc updates

Bumps [scraper](https://github.com/causal-agent/scraper) from 0.21.0 to

0.22.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/causal-agent/scraper/releases">scraper's

releases</a>.</em></p>

<blockquote>

<h2>v0.22.0</h2>

<h2>What's Changed</h2>

<ul>

<li>Make current nightly version of Clippy happy. by <a

href="https://github.com/adamreichold"><code>@adamreichold</code></a>

in <a

href="https://redirect.github.com/rust-scraper/scraper/pull/220">rust-scraper/scraper#220</a></li>

<li>RFC: Drop hash table for per-element attributes for more compact

sorted vector by <a

href="https://github.com/adamreichold"><code>@adamreichold</code></a>

in <a

href="https://redirect.github.com/rust-scraper/scraper/pull/221">rust-scraper/scraper#221</a></li>

<li>Bump ego-tree to version 0.10.0 by <a

href="https://github.com/cfvescovo"><code>@cfvescovo</code></a> in <a

href="https://redirect.github.com/rust-scraper/scraper/pull/222">rust-scraper/scraper#222</a></li>

</ul>

<p><strong>Full Changelog</strong>: <a

href="https://github.com/rust-scraper/scraper/compare/v0.21.0...v0.22.0">https://github.com/rust-scraper/scraper/compare/v0.21.0...v0.22.0</a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="dcf5e0c781"><code>dcf5e0c</code></a>

Version 0.22.0</li>

<li><a

href="932ed03849"><code>932ed03</code></a>

Merge pull request <a

href="https://redirect.github.com/causal-agent/scraper/issues/222">#222</a>

from rust-scraper/bump-ego-tree</li>

<li><a

href="483ecab721"><code>483ecab</code></a>

Bump ego-tree to version 0.10.0</li>

<li><a

href="26f04ed47c"><code>26f04ed</code></a>

Merge pull request <a

href="https://redirect.github.com/causal-agent/scraper/issues/221">#221</a>

from rust-scraper/sorted-vec-instead-of-hash-table</li>

<li><a

href="ee66ee8d23"><code>ee66ee8</code></a>

Drop hash table for per-element attributes for more compact sorted

vector.</li>

<li><a

href="8d3e74bf36"><code>8d3e74b</code></a>

Merge pull request <a

href="https://redirect.github.com/causal-agent/scraper/issues/220">#220</a>

from rust-scraper/make-clippy-happy</li>

<li><a

href="47cc9de953"><code>47cc9de</code></a>

Make current nightly version of Clippy happy.</li>

<li>See full diff in <a

href="https://github.com/causal-agent/scraper/compare/v0.21.0...v0.22.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

This PR should close

1. #10327

1. #13667

1. #13810

1. #14129

# Description

For `#` to start a comment, then it either need to be the first

character of the token or prefixed with ` ` (space).

So now you can do this:

```

~/Projects/nushell> 1..10 | each {echo test#testing } 12/05/2024 05:37:19 PM

╭───┬──────────────╮

│ 0 │ test#testing │

│ 1 │ test#testing │

│ 2 │ test#testing │

│ 3 │ test#testing │

│ 4 │ test#testing │

│ 5 │ test#testing │

│ 6 │ test#testing │

│ 7 │ test#testing │

│ 8 │ test#testing │

│ 9 │ test#testing │

╰───┴──────────────╯

```

# User-Facing Changes

It is a breaking change if anyone expected comments to start in the

middle of a string without any prefixing ` ` (space).

# Tests + Formatting

Did all:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

# After Submitting

I cant see that I need to update anything in [the

documentation](https://github.com/nushell/nushell.github.io) but please

point me in the direction if there is anything.

---------

Co-authored-by: Wind <WindSoilder@outlook.com>

# Description

Closes: #14387

~To make it happen, just need to added `-l` flag to `du`, and pass it to

`DirBuilder`, `DirInfo`, `FileInfo`

Then tweak `impl From<DirInfo> for Value` and `impl From<FileInfo> for

Value` impl.~

---

Edit: this PR is going to:

1. Exclude directories and files columns by default

2. Added `-l/--long` flag to output directories and files columns

3. When running `du`, it will output the files as well. Previously it

doesn't output the size of file.

To make it happen, just need to added `-r` flag to `du`, and pass it to

`DirBuilder`, `DirInfo`, `FileInfo`

Then tweak `impl From<DirInfo> for Value` and `impl From<FileInfo> for

Value` impl.

And rename some variables.

# User-Facing Changes

`du` is no longer output `directories` and `file` columns by default,

added `-r` flag will show `directories` column, `-f` flag will show

`files` column.

```nushell

> du nushell

╭───┬────────────────────────────────────┬──────────┬──────────╮

│ # │ path │ apparent │ physical │

├───┼────────────────────────────────────┼──────────┼──────────┤

│ 0 │ /home/windsoilder/projects/nushell │ 34.6 GiB │ 34.7 GiB │

├───┼────────────────────────────────────┼──────────┼──────────┤

│ # │ path │ apparent │ physical │

╰───┴────────────────────────────────────┴──────────┴──────────╯

> du nushell --recursive --files # It outputs two more columns, `directories` and `files`, but the output is too long to paste here.

```

# Tests + Formatting

Added 1 test

# After Submitting

NaN

# Description

Before this PR, `help commands` uses the name from a command's

declaration rather than the name in the scope. This is problematic when

trying to view the help page for the `main` command of a module. For

example, `std bench`:

```nushell

use std/bench

help bench

# => Error: nu::parser::not_found

# =>

# => × Not found.

# => ╭─[entry #10:1:6]

# => 1 │ help bench

# => · ──┬──

# => · ╰── did not find anything under this name

# => ╰────

```

This can also cause confusion when importing specific commands from

modules. Furthermore, if there are multiple commands with the same name

from different modules, the help text for _both_ will appear when

querying their help text (this is especially problematic for `main`

commands, see #14033):

```nushell

use std/iter

help iter find

# => Error: nu::parser::not_found

# =>

# => × Not found.

# => ╭─[entry #3:1:6]

# => 1│ help iter find

# => · ────┬────

# => · ╰── did not find anything under this name

# => ╰────

help find

# => Searches terms in the input.

# =>

# => Search terms: filter, regex, search, condition

# =>

# => Usage:

# => > find {flags} ...(rest)

# [...]

# => Returns the first element of the list that matches the

# => closure predicate, `null` otherwise

# [...]

# (full text omitted for brevity)

```

This PR changes `help commands` to use the name as it is in scope, so

prefixing any command in scope with `help` will show the correct help

text.

```nushell

use std/bench

help bench

# [help text for std bench]

use std/iter

help iter find

# [help text for std iter find]

use std

help std bench

# [help text for std bench]

help std iter find

# [help text for std iter find]

```

Additionally, the IR code generation for commands called with the

`--help` text has been updated to reflect this change.

This does have one side effect: when a module has a `main` command

defined, running `help <name>` (which checks `help aliases`, then `help

commands`, then `help modules`) will show the help text for the `main`

command rather than the module. The help text for the module is still

accessible with `help modules <name>`.

Fixes#10499, #10311, #11609, #13470, #14033, and #14402.

Partially fixes#10707.

Does **not** fix#11447.

# User-Facing Changes

* Help text for commands can be obtained by running `help <command

name>`, where the command name is the same thing you would type in order

to execute the command. Previously, it was the name of the function as

written in the source file.

* For example, for the following module `spam` with command `meow`:

```nushell

module spam {

# help text

export def meow [] {}

}

```

* Before this PR:

* Regardless of how `meow` is `use`d, the help text is viewable by

running `help meow`.

* After this PR:

* When imported with `use spam`: The `meow` command is executed by

running `spam meow` and the `help` text is viewable by running `help

spam meow`.

* When imported with `use spam foo`: The `meow` command is executed by

running `meow` and the `help` text is viewable by running `meow`.

* When a module has a `main` command defined, `help <module name>` will

return help for the main command, rather than the module. To access the

help for the module, use `help modules <module name>`.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

Fixes#14401 where expressions passed to `timeit` will execute twice.

This PR removes the expression support for `timeit`, as this behavior is

almost exclusive to `timeit` and can hinder migration to the IR

evaluator in the future. Additionally, `timeit` used to be able to take

a `block` as an argument. Blocks should probably only be allowed for

parser keywords, so this PR changes `timeit` to instead only take

closures as an argument. This also fixes an issue where environment

updates inside the `timeit` block would affect the parent scope and all

commands later in the pipeline.

```nu

> timeit { $env.FOO = 'bar' }; print $env.FOO

bar

```

# User-Facing Changes

`timeit` now only takes a closure as the first argument.

# After Submitting

Update examples in the book/docs if necessary.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Noticed this TODO, so I did as it said.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

N/A (the functionality was already removed)

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

N/A

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

N/A

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

# Description

A slower, gentler alternative to #14531, in that we're just moving one

setting *out* of `default_env.nu` in this PR ;-).

All this does is transition from using `$env.NU_LIB_DIRS` in the startup

config to `const $NU_LIB_DIRS`. Also updates the `sample_env.nu` to

reflect the changes.

Details:

Before: `$env.NU_LIB_DIRS` was unnecessary set both in `main()` and in

`default_env.nu`

After: `$env.NU_LIB_DIRS` is only set in `main()`

Before: `$env.NU_LIB_DIRS` was set to `config-dir/scripts` and

`data-dir/completions`

After: `$env.NU_LIB_DIRS` is set to an empty list, and `const

NU_LIB_DIRS` is set to the directories above

Before: Using `--include-path (-I)` would set the `$env.NU_LIB_DIRS`

After: Using `--include-path (-I)` sets the constant `$NU_LIB_DIRS`

# User-Facing Changes

There shouldn't be any breaking changes here. The `$env.NU_LIBS_DIRS`

still works for most cases. There are a few areas we need to clean-up to

make sure that the const is usable (`nu-check`, et. al.) but they will

still work in the meantime with the older `$env` version.

# Tests + Formatting

* Changed the Type-check on the `$env` version.

* Added a type check for the const version.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

Doc updates

# Description

#14249 loaded `convert_env_values()` several times to force more updates

to `ENV_CONVERSION`. This allows the user to treat variables as

structured data inside `config.nu` (and others).

Unfortunately, `convert_env_values()` did not originally anticipate

being called more than once, so it would attempt to re-convert values

that had already been converted. This usually leads to an error in the

conversion closure.

With this PR, values are only converted with `from_string` if they are

still strings; otherwise they are skipped and their existing value is

used.

# User-Facing Changes

No user-facing change when compared to 0.100, since closures written for

0.100's `ENV_CONVERSION` now work again without errors.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

-

# After Submitting

Will remove the "workaround" from the Config doc preview.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

In this PR I made the `cwd` parameter in the functions from the `table`

command not used when targeting `not(feature = "os)`. As without an OS

and therefore without filesystem we don't have any real concept of a

current working directory. This allows using the `table` command in the

WASM context.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

None.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

My tests timed out on the http stuff but I cannot think why this would

trigger a test failure. Let's see what the CI finds out.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR updates nushell to the latest commit of reedline that fixes some

rendering issues on window resize.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- This PR should fix/close:

- #11266

- #12893

- #13736

- #13748

- #14170

- It doesn't fix#13736 though unfortunately. The issue there is at a

different level to this fix (I think probably in the lexing somewhere,

which I haven't touched).

# The Problem

The linked issues have many examples of the problem and the related

confusion it causes, but I'll give some more examples here for

illustration. It boils down to the following:

This doesn't type check (good):

```nu

def foo []: string -> int { false }

```

This does (bad):

```nu

def foo [] : string -> int { false }

```

Because the parser is completely ignoring all the characters. This also

compiles in 0.100.0:

```nu

def blue [] Da ba Dee da Ba da { false }

```

And this also means commands which have a completely fine type, but an

extra space before `:`, lose that type information and end up as `any ->

any`, e.g.

```nu

def foo [] : int -> int {$in + 3}

```

```bash

$ foo --help

Input/output types:

╭───┬───────┬────────╮

│ # │ input │ output │

├───┼───────┼────────┤

│ 0 │ any │ any │

╰───┴───────┴────────╯

```

# The Fix

Special thank you to @texastoland whose draft PR (#12358) I referenced

heavily while making this fix.

That PR seeks to fix the invalid parsing by disallowing whitespace

between `[]` and `:` in declarations, e.g. `def foo [] : int -> any {}`

This PR instead allows the whitespace while properly parsing the type

signature. I think this is the better choice for a few reasons:

- The parsing is still straightforward and the information is all there

anyway,

- It's more consistent with type annotations in other places, e.g. `do

{|nums : list<int>| $nums | describe} [ 1 2 3 ]` from the [Type

Signatures doc

page](https://www.nushell.sh/lang-guide/chapters/types/type_signatures.html)

- It's more consistent with the new nu parser, which allows `let x :

bool = false` (current nu doesn't, but this PR doesn't change that)

- It will be less disruptive and should only break code where the types

are actually wrong (if your types were correct, but you had a space

before the `:`, those declarations will still compile and now have more

type information vs. throwing an error in all cases and requiring spaces

to be deleted)

- It's the more intuitive syntax for most functional programmers like

myself (haskell/lean/coq/agda and many more either allow or require

whitespace for type annotations)

I don't use Rust a lot, so I tried to keep most things the same and the

rest I wrote as if it was Haskell (if you squint a bit). Code

review/suggestions very welcome. I added all the tests I could think of

and `toolkit check pr` gives it the all-clear.

# User-Facing Changes

This PR meets part of the goal of #13849, but doesn't do anything about

parsing signatures twice and doesn't do much to improve error messages,

it just enforces the existing errors and error messages.

This will no doubt be a breaking change, mostly because the code is

already broken and users don't realise yet (one of my personal scripts

stopped compiling after this fix because I thought `def foo [] -> string

{}` was valid syntax). It shouldn't break any type-correct code though.

# Description

fixes

[this](https://github.com/nushell/nushell/pull/14303#issuecomment-2525100480)

where lsp and ide integration would produce the following error

---

```sh

nu --ide-check 100 "/path/to/env.nu"

```

with

```nu

const const_env = path self

```

would lead to

```

Error: nu:🐚:file_not_found

× File not found

╭─[/path/to/env.nu:1:19]

1 │ const const_env = path self

· ────┬────

· ╰── Couldn't find current file

╰────

```

# Tests + Formatting

- 🟢 `cargo fmt --all`

- 🟢 `cargo clippy --workspace`

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

In this PR I exposed the struct `ToHtml` that comes from `nu-cmd-extra`.

I know this command isn't in a best state and should be changed in some

way in the future but having the struct exposed makes transforming data

to html way more simple for external tools as the `PipelineData` can

easily be placed in the `ToHtml::run` method.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

None.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

I did `fmt` and `check` but not `test`, shouldn't break any tests

regardless.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

For the demo page or my jupyter kernel would this make my life easiert.

Alternative solution to:

- #12195

The other approach:

- #14305

# Description

Adds ~`path const`~ `path self`, a parse-time only command for getting

the absolute path of the source file containing it, or any file relative

to the source file.

- Useful for any script or module that makes use of non nuscript files.

- Removes the need for `$env.CURRENT_FILE` and `$env.FILE_PWD`.

- Can be used in modules, sourced files or scripts.

# Examples

```nushell

# ~/.config/nushell/scripts/foo.nu

const paths = {

self: (path self),

dir: (path self .),

sibling: (path self sibling),

parent_dir: (path self ..),

cousin: (path self ../cousin),

}

export def main [] {

$paths

}

```

```nushell

> use foo.nu

> foo

╭────────────┬────────────────────────────────────────────╮

│ self │ /home/user/.config/nushell/scripts/foo.nu │

│ dir │ /home/user/.config/nushell/scripts │

│ sibling │ /home/user/.config/nushell/scripts/sibling │

│ parent_dir │ /home/user/.config/nushell │

│ cousin │ /home/user/.config/nushell/cousin │

╰────────────┴────────────────────────────────────────────╯

```

Trying to run in a non-const context

```nushell

> path self

Error: × this command can only run during parse-time

╭─[entry #1:1:1]

1 │ path self

· ─────┬────

· ╰── can't run after parse-time

╰────

help: try assigning this command's output to a const variable

```

Trying to run in the REPL i.e. not in a file

```nushell

> const foo = path self

Error: × Error: nu:🐚:file_not_found

│

│ × File not found

│ ╭─[entry #3:1:13]

│ 1 │ const foo = path self

│ · ─────┬────

│ · ╰── Couldn't find current file

│ ╰────

│

╭─[entry #3:1:13]

1 │ const foo = path self

· ─────┬────

· ╰── Encountered error during parse-time evaluation

╰────

```

# Comparison with #14305

## Pros

- Self contained implementation, does not require changes in the parser.

- More concise usage, especially with parent directories.

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

- fixes flakey tests from solving #14241

# Description

This is a preliminary fix for the flaky tests and also

shortened the `--max-time` in the tests.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

---------

Signed-off-by: Alex Kattathra Johnson <alex.kattathra.johnson@gmail.com>

The `--name` flag of `polars with-column` only works when used with an

eager dataframe. I will not work with lazy dataframes and it will not

work when used with expressions (which forces a conversion to a

lazyframe). This pull request adds better documentation to the flags and

errors messages when used in cases where it will not work.

# Description

This PR adds a `file` column to the `scope modules` output table.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- should close https://github.com/nushell/nushell/issues/14517

# Description

this will change `to ndnuon` so that newlines are encoded as a literal

`\n` which `from ndnuon` is already able to handle

# User-Facing Changes

users should be able to encode multiline strings in NDNUON

# Tests + Formatting

new tests have been added:

- they don't pass on the first commit

- they do pass with the fix

# After Submitting

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description