This PR fixes the `path type` command so that it resolves relative paths

using PWD from the engine state.

As a bonus, it also fixes the issue of `path type` returning an empty

string instead of an error when it fails.

# Description

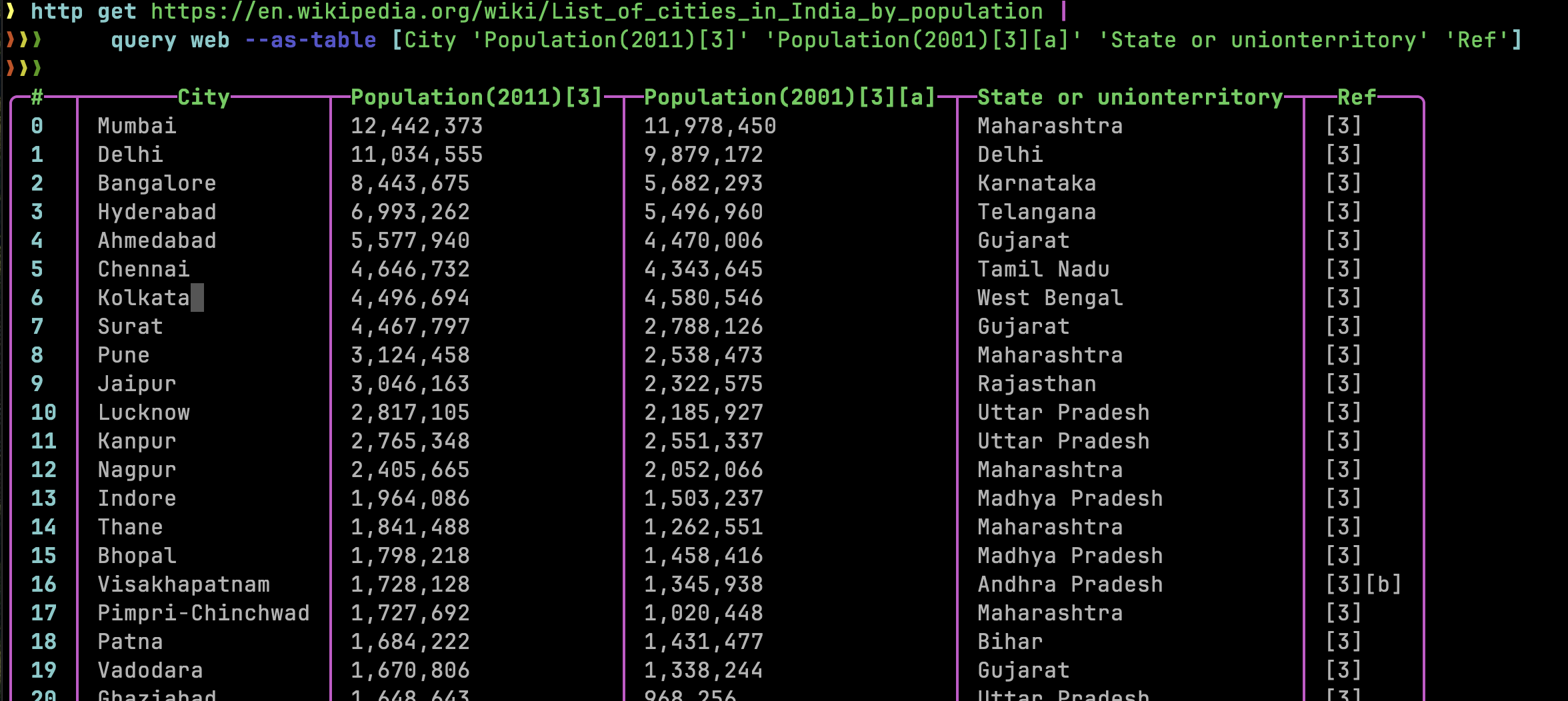

@maxim-uvarov did a ton of research and work with the dply-rs author and

ritchie from polars and found out that the allocator matters on macos

and it seems to be what was messing up the performance of polars plugin.

ritchie suggested to use jemalloc but i switched it to mimalloc to match

nushell and it seems to run better.

## Before (default allocator)

note - using 1..10 vs 1..100 since it takes so long. also notice how

high the `max` timings are compared to mimalloc below.

```nushell

❯ 1..10 | each {timeit {polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null}} | | {mean: ($in | math avg), min: ($in | math min), max: ($in | math max), stddev: ($in | into int | into float | math stddev | into int | $'($in)ns' | into duration)}

╭────────┬─────────────────────────╮

│ mean │ 4sec 999ms 605µs 995ns │

│ min │ 983ms 627µs 42ns │

│ max │ 13sec 398ms 135µs 791ns │

│ stddev │ 3sec 476ms 479µs 939ns │

╰────────┴─────────────────────────╯

❯ use std bench

❯ bench { polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null } -n 10

╭───────┬────────────────────────╮

│ mean │ 6sec 220ms 783µs 983ns │

│ min │ 1sec 184ms 997µs 708ns │

│ max │ 18sec 882ms 81µs 708ns │

│ std │ 5sec 350ms 375µs 697ns │

│ times │ [list 10 items] │

╰───────┴────────────────────────╯

```

## After (using mimalloc)

```nushell

❯ 1..100 | each {timeit {polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null}} | | {mean: ($in | math avg), min: ($in | math min), max: ($in | math max), stddev: ($in | into int | into float | math stddev | into int | $'($in)ns' | into duration)}

╭────────┬───────────────────╮

│ mean │ 103ms 728µs 902ns │

│ min │ 97ms 107µs 42ns │

│ max │ 149ms 430µs 84ns │

│ stddev │ 5ms 690µs 664ns │

╰────────┴───────────────────╯

❯ use std bench

❯ bench { polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null } -n 100

╭───────┬───────────────────╮

│ mean │ 103ms 620µs 195ns │

│ min │ 97ms 541µs 166ns │

│ max │ 130ms 262µs 166ns │

│ std │ 4ms 948µs 654ns │

│ times │ [list 100 items] │

╰───────┴───────────────────╯

```

## After (using jemalloc - just for comparison)

```nushell

❯ 1..100 | each {timeit {polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null}} | | {mean: ($in | math avg), min: ($in | math min), max: ($in | math max), stddev: ($in | into int | into float | math stddev | into int | $'($in)ns' | into duration)}

╭────────┬───────────────────╮

│ mean │ 113ms 939µs 777ns │

│ min │ 108ms 337µs 333ns │

│ max │ 166ms 467µs 458ns │

│ stddev │ 6ms 175µs 618ns │

╰────────┴───────────────────╯

❯ use std bench

❯ bench { polars open Data7602DescendingYearOrder.csv | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null } -n 100

╭───────┬───────────────────╮

│ mean │ 114ms 363µs 530ns │

│ min │ 108ms 804µs 833ns │

│ max │ 143ms 521µs 459ns │

│ std │ 5ms 88µs 56ns │

│ times │ [list 100 items] │

╰───────┴───────────────────╯

```

## After (using parquet + mimalloc)

```nushell

❯ 1..100 | each {timeit {polars open data.parquet | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null}} | | {mean: ($in | math avg), min: ($in | math min), max: ($in | math max), stddev: ($in | into int | into float | math stddev | into int | $'($in)ns' | into duration)}

╭────────┬──────────────────╮

│ mean │ 34ms 255µs 492ns │

│ min │ 31ms 787µs 250ns │

│ max │ 76ms 408µs 416ns │

│ stddev │ 4ms 472µs 916ns │

╰────────┴──────────────────╯

❯ use std bench

❯ bench { polars open data.parquet | polars group-by year | polars agg (polars col geo_count | polars sum) | polars collect | null } -n 100

╭───────┬──────────────────╮

│ mean │ 34ms 897µs 562ns │

│ min │ 31ms 518µs 542ns │

│ max │ 65ms 943µs 625ns │

│ std │ 3ms 450µs 741ns │

│ times │ [list 100 items] │

╰───────┴──────────────────╯

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Instead of returning an error, this PR changes `expand_glob` in

`run_external.rs` to return the original string arg if glob creation

failed. This makes it so that, e.g.,

```nushell

^echo `[`

^echo `***`

```

no longer fail with a shell error. (This follows from #12921.)

# Description

Currently, this pipeline doesn't work `open --raw file | take 100`,

since the type of the byte stream is `Unknown`, but `take` expects

`Binary` streams. This PR changes commands that expect

`ByteStreamType::Binary` to also work with `ByteStreamType::Unknown`.

This was done by adding two new methods to `ByteStreamType`:

`is_binary_coercible` and `is_string_coercible`. These return true if

the type is `Unknown` or matches the type in the method name.

# Description

Makes the `from json --objects` command produce a stream, and read

lazily from an input stream to produce its output.

Also added a helper, `PipelineData::get_type()`, to make it easier to

construct a wrong type error message when matching on `PipelineData`. I

expect checking `PipelineData` for either a string value or an `Unknown`

or `String` typed `ByteStream` will be very, very common. I would have

liked to have a helper that just returns a readable stream from either,

but that would either be a bespoke enum or a `Box<dyn BufRead>`, which

feels like it wouldn't be so great for performance. So instead, taking

the approach I did here is probably better - having a function that

accepts the `impl BufRead` and matching to use it.

# User-Facing Changes

- `from json --objects` no longer collects its input, and can be used

for large datasets or streams that produce values over time.

# Tests + Formatting

All passing.

# After Submitting

- [ ] release notes

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

This reverts commit 68adc4657f.

# Description

Reverts the lazyframe refactor (#12669) for the next release, since

there are still a few lingering issues. This temporarily solves #12863

and #12828. After the release, the lazyframes can be added back and

cleaned up.

Another very boring PR cleaning up and documenting some of `explore`'s

innards. Mostly renaming things that I found confusing or vague when

reading through the code, also adding some comments.

# Description

Fixes: #12941

~~The issue is cause by some columns(is_builtin, is_plugin, is_custom,

is_keyword) are removed in #10023~~

Edit: I'm wrong

# Tests + Formatting

Added one test for `std help`

# Description

Following from #12523, this PR removes support for lists of environments

variables in the `with-env` command. Rather, only records will be

supported now.

# After Submitting

Update examples using the list form in the docs and book.

Small change, removing 4 more configuration options from `explore`'s

binary viewer:

1. `show_index`

2. `show_data`

3. `show_ascii`

4. `show_split`

These controlled whether the 3 columns in the binary viewer (index, hex

data, ASCII) and the pipe separator (`|`) in between them are shown. I

don't think we need this level of configurability until the `explore`

command is more mature, and maybe even not then; we can just show them

all.

I think it's very unlikely that anyone is using these configuration

points.

Also, the row offset (e.g. how many rows we have scrolled down) was

being stored in config/settings when it's arguably not config; more like

internal state of the binary viewer. I moved it to a more appropriate

location and renamed it.

# Description

```nushell

❯ ls

╭───┬───────┬──────┬──────┬──────────╮

│ # │ name │ type │ size │ modified │

├───┼───────┼──────┼──────┼──────────┤

│ 0 │ a.txt │ file │ 0 B │ now │

╰───┴───────┴──────┴──────┴──────────╯

❯ ls a.

NO RECORDS FOUND

```

There is a completion issue on previous version, I think @amtoine have

reproduced it before. But currently I can't reproduce it on latest main.

To avoid such regression, I added some tests for completion.

---------

Co-authored-by: Antoine Stevan <44101798+amtoine@users.noreply.github.com>

# Description

Fixes: https://github.com/nushell/nushell/issues/7761

It's still unsure if we want to change the `range semantic` itself, but

it's good to keep range semantic consistent between nushell commands.

# User-Facing Changes

### Before

```nushell

❯ "abc" | str substring 1..=2

b

```

### After

```nushell

❯ "abc" | str substring 1..=2

bc

```

# Tests + Formatting

Adjust tests to fit new behavior

As discussed in https://github.com/nushell/nushell/pull/12749, we no

longer need to call `std::env::set_current_dir()` to sync `$env.PWD`

with the actual working directory. This PR removes the call from

`EngineState::merge_env()`.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

1. With the `-l` flag, `debug profile` now collects files and line

numbers of profiled pipeline elements

2. Error from the profiled closure will be reported instead of silently

ignored.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

New `--lines(-l)` flag to `debug profile`. The command will also fail if

the profiled closure fails, so technically it is a breaking change.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

Implements streaming for:

- `from csv`

- `from tsv`

- `to csv`

- `to tsv`

via the new string-typed ByteStream support.

# User-Facing Changes

Commands above. Also:

- `to csv` and `to tsv` now have `--columns <List(String)>`, to provide

the exact columns desired in the output. This is required for them to

have streaming output, because otherwise collecting the entire list is

necessary to determine the output columns. If we introduce

`TableStream`, this may become less necessary.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- [ ] release notes

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

I feel like it's a little sad that BSDs get to enjoy almost everything

other than the `ps` command, and there are some tests that rely on this

command, so I figured it would be fun to patch that and make it work.

The different BSDs have diverged from each other somewhat, but generally

have a similar enough API for reading process information via

`sysctl()`, with some slightly different args.

This supports FreeBSD with the `freebsd` module, and NetBSD and OpenBSD

with the `netbsd` module. OpenBSD is a fork of NetBSD and the interface

has some minor differences but many things are the same.

I had wanted to try to support DragonFlyBSD too, but their Rust version

in the latest release is only 1.72.0, which is too old for me to want to

try to compile rustc up to 1.77.2... but I will revisit this whenever

they do update it. Dragonfly is a fork of FreeBSD, so it's likely to be

more or less the same - I just don't want to enable it without testing

it.

Fixes#6862 (partially, we probably won't be adding `zfs list`)

# User-Facing Changes

`ps` added for FreeBSD, NetBSD, and OpenBSD.

# Tests + Formatting

The CI doesn't run tests for BSDs, so I'm not entirely sure if

everything was already passing before. (Frankly, it's unlikely.) But

nothing appears to be broken.

# After Submitting

- [ ] release notes?

- [ ] DragonflyBSD, whenever they do update Rust to something close

enough for me to try it

Bumps [shadow-rs](https://github.com/baoyachi/shadow-rs) from 0.27.1 to

0.28.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/baoyachi/shadow-rs/releases">shadow-rs's

releases</a>.</em></p>

<blockquote>

<h2>fix cargo clippy</h2>

<p><a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/160">#160</a></p>

<p>Thx <a href="https://github.com/qartik"><code>@qartik</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="ba9f8b0c2b"><code>ba9f8b0</code></a>

Update Cargo.toml</li>

<li><a

href="d1b724c1e7"><code>d1b724c</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/160">#160</a>

from qartik/patch-1</li>

<li><a

href="505108d5d6"><code>505108d</code></a>

Allow missing_docs for deprecated CLAP_VERSION constant</li>

<li>See full diff in <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.27.1...v0.28.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

While each of the `help <subcommands>` in `std` had completers, there

wasn't one for the main `help` command.

This adds all internals and custom commands (as with `help commands`) as

possible completions.

# User-Facing Changes

`help ` + <kbd>Tab</kbd> will now suggest completions for both the `help

<subcommands>` as well as all internal and custom commands.

# Tests + Formatting

Note: Cannot add tests for completion functions since they are

module-internal and not visible to test cases, that I can see.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

- **Remove unused `pathdiff` dep in `nu-cli`**

- **Remove unused `serde_json` dep on `nu-protocol`**

- Unnecessary after moving the plugin file to msgpack (still a

dev-dependency)

Some minor changes to `explore`, continuing on my mission to simplify

the command in preparation for a larger UX overhaul:

1. Consolidate padding configuration. I don't think we need separate

config points for the (optional) index column and regular data columns

in the normal pager, they can share padding configuration. Likewise, in

the binary viewer all 3 columns (index, data, ASCII) had their

left+right padding configured independently.

2. Update `explore` so we use the binary viewer for the new `ByteStream`

type. `cat foo.txt | into binary | explore` was not using the binary

viewer after the `ByteStream` changes.

3. Tweak the naming of a few helper functions, add a comment

I've put the changes in separate commits to make them easier to review.

---------

Co-authored-by: Stefan Holderbach <sholderbach@users.noreply.github.com>

# Description

Removes the old `nu-cmd-dataframe` crate in favor of the polars plugin.

As such, this PR also removes the `dataframe` feature, related CI, and

full releases of nushell.

# Description

This PR adds min and max to the bench command.

```nushell

❯ use std bench

❯ bench { dply -c 'parquet("./data.parquet") | group_by(year) | summarize(count = n(), sum = sum(geo_count)) | show()' | complete | null } --rounds 100 --verbose

100 / 100

╭───────┬───────────────────╮

│ mean │ 71ms 358µs 850ns │

│ min │ 66ms 457µs 583ns │

│ max │ 120ms 338µs 167ns │

│ std │ 6ms 553µs 949ns │

│ times │ [list 100 items] │

╰───────┴───────────────────╯

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR allows byte streams to optionally be colored as being

specifically binary or string data, which guarantees that they'll be

converted to `Binary` or `String` appropriately on `into_value()`,

making them compatible with `Type` guarantees. This makes them

significantly more broadly usable for command input and output.

There is still an `Unknown` type for byte streams coming from external

commands, which uses the same behavior as we previously did where it's a

string if it's UTF-8.

A small number of commands were updated to take advantage of this, just

to prove the point. I will be adding more after this merges.

# User-Facing Changes

- New types in `describe`: `string (stream)`, `binary (stream)`

- These commands now return a stream if their input was a stream:

- `into binary`

- `into string`

- `bytes collect`

- `str join`

- `first` (binary)

- `last` (binary)

- `take` (binary)

- `skip` (binary)

- Streams that are explicitly binary colored will print as a streaming

hexdump

- example:

```nushell

1.. | each { into binary } | bytes collect

```

# Tests + Formatting

I've added some tests to cover it at a basic level, and it doesn't break

anything existing, but I do think more would be nice. Some of those will

come when I modify more commands to stream.

# After Submitting

There are a few things I'm not quite satisfied with:

- **String trimming behavior.** We automatically trim newlines from

streams from external commands, but I don't think we should do this with

internal commands. If I call a command that happens to turn my string

into a stream, I don't want the newline to suddenly disappear. I changed

this to specifically do it only on `Child` and `File`, but I don't know

if this is quite right, and maybe we should bring back the old flag for

`trim_end_newline`

- **Known binary always resulting in a hexdump.** It would be nice to

have a `print --raw`, so that we can put binary data on stdout

explicitly if we want to. This PR doesn't change how external commands

work though - they still dump straight to stdout.

Otherwise, here's the normal checklist:

- [ ] release notes

- [ ] docs update for plugin protocol changes (added `type` field)

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

Changes `get_full_help` to take a `&dyn Command` instead of multiple

arguments (`&Signature`, `&Examples` `is_parser_keyword`). All of these

arguments can be gathered from a `Command`, so there is no need to pass

the pieces to `get_full_help`.

This PR also fixes an issue where the search terms are not shown if

`--help` is used on a command.

# Description

There is a bug when `hide-env` is used on environment variables that

were present at shell startup. Namely, child processes still inherit the

hidden environment variable. This PR fixes#12900, fixes#11495, and

fixes#7937.

# Tests + Formatting

Added a test.

# Description

Kind of a vague title, but this PR does two main things:

1. Rather than overriding functions like `Command::is_parser_keyword`,

this PR instead changes commands to override `Command::command_type`.

The `CommandType` returned by `Command::command_type` is then used to

automatically determine whether `Command::is_parser_keyword` and the

other `is_{type}` functions should return true. These changes allow us

to remove the `CommandType::Other` case and should also guarantee than

only one of the `is_{type}` functions on `Command` will return true.

2. Uses the new, reworked `Command::command_type` function in the `scope

commands` and `which` commands.

# User-Facing Changes

- Breaking change for `scope commands`: multiple columns (`is_builtin`,

`is_keyword`, `is_plugin`, etc.) have been merged into the `type`

column.

- Breaking change: the `which` command can now report `plugin` or

`keyword` instead of `built-in` in the `type` column. It may also now

report `external` instead of `custom` in the `type` column for known

`extern`s.

# Description

This PR makes some commands and areas of code preserve pipeline

metadata. This is in an attempt to make the issue described in #12599

and #9456 less likely to occur. That is, reading and writing to the same

file in a pipeline will result in an empty file. Since we preserve

metadata in more places now, there will be a higher chance that we

successfully detect this error case and abort the pipeline.

# Description

This changes the `collect` command so that it doesn't require a closure.

Still allowed, optionally.

Before:

```nushell

open foo.json | insert foo bar | collect { save -f foo.json }

```

After:

```nushell

open foo.json | insert foo bar | collect | save -f foo.json

```

The closure argument isn't really necessary, as collect values are also

supported as `PipelineData`.

# User-Facing Changes

- `collect` command changed

# Tests + Formatting

Example changed to reflect.

# After Submitting

- [ ] release notes

- [ ] we may want to deprecate the closure arg?

# Description

We have been building `nu_plugin_polars` unnecessarily during `cargo

test`, which is very slow. All of its tests are run within its own

crate, which happens during the plugins CI phase.

This should speed up the CI a bit.

# Description

Forgot that I fixed this already on my branch, but when printing without

a display output hook, the implicit call to `table` gets its output

mangled with newlines (since #12774). This happens when running `nu -c`

or a script file.

Here's that fix in one PR so it can be merged easily.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# Description

Fixes: #12690

The issue is happened after

https://github.com/nushell/nushell/pull/12056 is merged. It will raise

error if user doesn't supply required parameter when run closure with

do.

And parser adds a `$it` parameter when parsing closure or block

expression.

I believe the previous behavior is because we allow such syntax on

previous version(0.44):

```nushell

let x = { print $it }

```

But it's no longer allowed after 0.60. So I think they can be removed.

# User-Facing Changes

```nushell

let tmp = {

let it = 42

print $it

}

do -c $tmp

```

should be possible again.

# Tests + Formatting

Added 1 test

# Description

Restores `bytes starts-with` so that it is able to work with byte

streams once again. For parity/consistency, this PR also adds byte

stream support to `bytes ends-with`.

# User-Facing Changes

- `bytes ends-with` now supports byte streams.

# Tests + Formatting

Re-enabled tests for `bytes starts-with` and added tests for `bytes

ends-with`.

# Description

This PR adds a few functions to `Span` for merging spans together:

- `Span::append`: merges two spans that are known to be in order.

- `Span::concat`: returns a span that encompasses all the spans in a

slice. The spans must be in order.

- `Span::merge`: merges two spans (no order necessary).

- `Span::merge_many`: merges an iterator of spans into a single span (no

order necessary).

These are meant to replace the free-standing `nu_protocol::span`

function.

The spans in a `LiteCommand` (the `parts`) should always be in order

based on the lite parser and lexer. So, the parser code sees the most

usage of `Span::append` and `Span::concat` where the order is known. In

other code areas, `Span::merge` and `Span::merge_many` are used since

the order between spans is often not known.

# Description

sync-up nushell to reedline's latest minor changes. Not quite sure why

itertools downgraded to 0.11.0 when nushell and reedline have it set to

0.12.0.

# Description

This PR introduces a `ByteStream` type which is a `Read`-able stream of

bytes. Internally, it has an enum over three different byte stream

sources:

```rust

pub enum ByteStreamSource {

Read(Box<dyn Read + Send + 'static>),

File(File),

Child(ChildProcess),

}

```

This is in comparison to the current `RawStream` type, which is an

`Iterator<Item = Vec<u8>>` and has to allocate for each read chunk.

Currently, `PipelineData::ExternalStream` serves a weird dual role where

it is either external command output or a wrapper around `RawStream`.

`ByteStream` makes this distinction more clear (via `ByteStreamSource`)

and replaces `PipelineData::ExternalStream` in this PR:

```rust

pub enum PipelineData {

Empty,

Value(Value, Option<PipelineMetadata>),

ListStream(ListStream, Option<PipelineMetadata>),

ByteStream(ByteStream, Option<PipelineMetadata>),

}

```

The PR is relatively large, but a decent amount of it is just repetitive

changes.

This PR fixes#7017, fixes#10763, and fixes#12369.

This PR also improves performance when piping external commands. Nushell

should, in most cases, have competitive pipeline throughput compared to,

e.g., bash.

| Command | Before (MB/s) | After (MB/s) | Bash (MB/s) |

| -------------------------------------------------- | -------------:|

------------:| -----------:|

| `throughput \| rg 'x'` | 3059 | 3744 | 3739 |

| `throughput \| nu --testbin relay o> /dev/null` | 3508 | 8087 | 8136 |

# User-Facing Changes

- This is a breaking change for the plugin communication protocol,

because the `ExternalStreamInfo` was replaced with `ByteStreamInfo`.

Plugins now only have to deal with a single input stream, as opposed to

the previous three streams: stdout, stderr, and exit code.

- The output of `describe` has been changed for external/byte streams.

- Temporary breaking change: `bytes starts-with` no longer works with

byte streams. This is to keep the PR smaller, and `bytes ends-with`

already does not work on byte streams.

- If a process core dumped, then instead of having a `Value::Error` in

the `exit_code` column of the output returned from `complete`, it now is

a `Value::Int` with the negation of the signal number.

# After Submitting

- Update docs and book as necessary

- Release notes (e.g., plugin protocol changes)

- Adapt/convert commands to work with byte streams (high priority is

`str length`, `bytes starts-with`, and maybe `bytes ends-with`).

- Refactor the `tee` code, Devyn has already done some work on this.

---------

Co-authored-by: Devyn Cairns <devyn.cairns@gmail.com>

# Description

Fixes: #12691

In `parse_short_flag`, it only checks special cases for

`SyntaxShape::Int`, `SyntaxShape::Number` to allow a flag to be a

number. This pr adds `SyntaxShape::Float` to allow a flag to be float

number.

# User-Facing Changes

This is possible after this pr:

```nushell

def spam [val: float] { $val };

spam -1.4

```

# Tests + Formatting

Added 1 test

# Description

In order for `Stack::unwrap_unique` to work as intended, we currently

manually track all references to the parent stack and ensure that they

are cleared before calling `Stack::unwrap_unique` in the REPL. We also

only call `Stack::unwrap_unique` after all code from the current REPL

entry has finished executing. Since `Value`s cannot store `Stack`

references, then this should have worked in theory. However, we forgot

to account for threads. `run-external` (and maybe the plugin writers)

can spawn threads that clone the `Stack`, holding on to references of

the parent stack. These threads are not waited/joined upon, and so may

finish after the eval has already returned. This PR removes the

`Stack::unwrap_unique` function and associated debug assert that was

[causing

panics](https://gist.github.com/cablehead/f3d2608a1629e607c2d75290829354f7)

like @cablehead found.

# After Submitting

Make values cheaper to clone as a more robust solution to the

performance issues with cloning the stack.

---------

Co-authored-by: Wind <WindSoilder@outlook.com>

Fix for #12730

All of the code expected a list of floats, but the syntax shape expected

a table. Resolved by changing the syntax shape to list of floats.

cc: @maxim-uvarov

# Description

Following from #12867, this PR replaces usages of `Call::positional_nth`

with existing spans. This removes several `expect`s from the code.

Also remove unused `positional_nth_mut` and `positional_iter_mut`

# Description

So minor, but had to be fixed sometime. `help each while` used the term

"block" in the "usage", but the argument type is a closure.

# User-Facing Changes

help-only

# Description

A common question we get is what config files are loaded when and with

what parameters. It's for this reason that I wrote [this

gist](https://gist.github.com/fdncred/b87b784f04984dc31a150baed9ad2447).

Another way to figure this out is to use `nu --log-level info`. This

will show some performance timings but will also show what is being

loaded when. For the most part the `[INFO]` lines show the performance

timings and the `[WARN]` lines show the files.

This PR tries to make things a little bit clearer when using the

`--log-level info` parameter.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This should fix#10155 where the `sys` command can panic due to date

math in certain cases / on certain systems.

# User-Facing Changes

The `boot_time` column now has a date value instead of a formatted date

string. This is technically a breaking change.

# Description

This PR adds a single test to assert interactivity on slow pipelines

Currently the timeout is set to 6 seconds, as the test can sometimes

take ~3secs to run on my local m1 mac air, which I don't think is an

indication of a slow pipeline, but rather slow test start up time...

# Description

Fixes: #12795

The issue is caused by an empty position of `ParseError::UnexpectedEof`.

So no detailed message is displayed.

To fix the issue, I adjust the start of span to `span.end - 1`. In this

way, we can make sure that it never points to an empty position.

After lexing item, I also reorder the unclosed character checking . Now

it will be checking unclosed opening delimiters first.

# User-Facing Changes

After this pr, it outputs detailed error message for incomplete string

when running scripts.

## Before

```

❯ nu -c "'ab"

Error: nu::parser::unexpected_eof

× Unexpected end of code.

╭─[source:1:4]

1 │ 'ab

╰────

> ./target/debug/nu -c "r#'ab"

Error: nu::parser::unexpected_eof

× Unexpected end of code.

╭─[source:1:6]

1 │ r#'ab

╰────

```

## After

```

> nu -c "'ab"

Error: nu::parser::unexpected_eof

× Unexpected end of code.

╭─[source:1:3]

1 │ 'ab

· ┬

· ╰── expected closing '

╰────

> ./target/debug/nu -c "r#'ab"

Error: nu::parser::unexpected_eof

× Unexpected end of code.

╭─[source:1:5]

1 │ r#'ab

· ┬

· ╰── expected closing '#

╰────

```

# Tests + Formatting

Added some tests for incomplete string.

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

Bumps [rust-embed](https://github.com/pyros2097/rust-embed) from 8.3.0

to 8.4.0.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/pyrossh/rust-embed/blob/master/changelog.md">rust-embed's

changelog</a>.</em></p>

<blockquote>

<h2>[8.4.0] - 2024-05-11</h2>

<ul>

<li>Re-export RustEmbed as Embed <a

href="https://redirect.github.com/pyrossh/rust-embed/pull/245/files">#245</a>.

Thanks to <a href="https://github.com/pyrossh">pyrossh</a></li>

<li>Do not build glob matchers repeatedly when include-exclude feature

is enabled <a

href="https://redirect.github.com/pyrossh/rust-embed/pull/244/files">#244</a>.

Thanks to <a href="https://github.com/osiewicz">osiewicz</a></li>

<li>Add <code>metadata_only</code> attribute <a

href="https://redirect.github.com/pyrossh/rust-embed/pull/241/files">#241</a>.

Thanks to <a href="https://github.com/ddfisher">ddfisher</a></li>

<li>Replace <code>expect</code> with a safer alternative that returns

<code>None</code> instead <a

href="https://redirect.github.com/pyrossh/rust-embed/pull/240/files">#240</a>.

Thanks to <a href="https://github.com/costinsin">costinsin</a></li>

<li>Eliminate unnecessary <code>to_path</code> call <a

href="https://redirect.github.com/pyrossh/rust-embed/pull/239/files">#239</a>.

Thanks to <a href="https://github.com/smoelius">smoelius</a></li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li>See full diff in <a

href="https://github.com/pyros2097/rust-embed/commits">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [interprocess](https://github.com/kotauskas/interprocess) from

2.0.1 to 2.1.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/kotauskas/interprocess/releases">interprocess's

releases</a>.</em></p>

<blockquote>

<h2>2.1.0 – listeners are now iterators</h2>

<ul>

<li>Fixes <a

href="https://redirect.github.com/kotauskas/interprocess/issues/49">#49</a></li>

<li>Adds <code>Iterator</code> impl on local socket listeners (closes <a

href="https://redirect.github.com/kotauskas/interprocess/issues/64">#64</a>)</li>

<li>Miscellaneous documentation fixes</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="b79d363615"><code>b79d363</code></a>

Thank you Windows, very cool</li>

<li><a

href="2ab1418db7"><code>2ab1418</code></a>

Move a bunch of goalposts</li>

<li><a

href="d26ed39bd2"><code>d26ed39</code></a>

Use macro in Windows <code>UnnamedPipe</code> builder</li>

<li><a

href="f9528885e4"><code>f952888</code></a>

I'm not adding a <code>build.rs</code> to silence a warning</li>

<li><a

href="5233ffb7c9"><code>5233ffb</code></a>

Fix <a

href="https://redirect.github.com/kotauskas/interprocess/issues/49">#49</a>

and add test</li>

<li><a

href="85d3e1861a"><code>85d3e18</code></a>

Complete half-done move to <code>os</code> module in tests</li>

<li><a

href="7715dbdd49"><code>7715dbd</code></a>

Listeners are now iterators</li>

<li><a

href="8a47261ddc"><code>8a47261</code></a>

Bump version</li>

<li>See full diff in <a

href="https://github.com/kotauskas/interprocess/compare/2.0.1...2.1.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

The `char` command can panic due to a failed `expect`: `char --integer

...[77 78 79]`

This PR fixes the panic for the `--integer` flag and also the

`--unicode` flag.

# After Submitting

Check other commands and places where similar bugs can occur due to

usages of `Call::positional_nth` and related methods.

# Description

There was a question in Discord today about how to remove empty rows

from a table. The user found the `compact` command on their own, but I

realized that there were no search terms on the command. I've added

'empty' and 'remove', although I subsequently figured out that 'empty'

is found in the "usage" anyway. That said, I don't think it hurts to

have good search terms behind it regardless.

# User-Facing Changes

Just the help

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- fixes#12841

# Description

Add boundary checks to ensure that the row and column chosen in

RecordView are not over the length of the possible row and columns. If

we are out of bounds, we default to Value::nothing.

# Tests + Formatting

Tests ran and formatting done

- fixes#12764

Replaced the custom logic with values_to_sql method that is already used

in crate::database.

This will ensure that handling of parameters is the same between sqlite

and stor.

# Description

In this PR I added two new methods to `Stack`, `stdout_file` and

`stderr_file`. These two modify the inner `StackOutDest` and set a

`File` into the `stdout` and `stderr` respectively. Different to the

`push_redirection` methods, these do not require to hold a guard up all

the time but require ownership of the stack.

This is primarly useful for applications that use `nu` as a language but

not the `nushell`.

This PR replaces my first attempt #12851 to add a way to capture

stdout/-err of external commands. Capturing the stdout without having to

write into a file is possible with crates like

[`os_pipe`](https://docs.rs/os_pipe), an example for this is given in

the doc comment of the `stdout_file` command and can be executed as a

doctest (although it doesn't validate that you actually got any data).

This implementation takes `File` as input to make it easier to implement

on different operating systems without having to worry about

`OwnedHandle` or `OwnedFd`. Also this doesn't expose any use `os_pipe`

to not leak its types into this API, making it depend on it.

As in my previous attempt, @IanManske guided me here.

# User-Facing Changes

This change has no effect on `nushell` and therefore no user-facing

changes.

# Tests + Formatting

This only exposes a new way of using already existing code and has

therefore no further testing. The doctest succeeds on my machine at

least (x86 Windows, 64 Bit).

# After Submitting

All the required documentation is already part of this PR.

# Description

It's commonly forgotten or overlooked that a lot of `std repeat`

functionality can be handled with the built-in `fill`. Added 'repeat` as

a search term for `fill` to improve discoverability.

Also replaced one of the existing examples with one `fill`ing an empty

string, a la `repeat`. There were 6 examples already, and 3 of them

pretty much were variations on the same theme, so I repurposed one of

those rather than adding a 7th.

# User-Facing Changes

Changes to `help` only

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

I assume the "Commands" doc is auto-generated from the `help`, but I'll

double-check that assumption.

# Description

This PR resolves an inconsistency between different `str` subcommands,

notably `str contains`, `str starts-with` and `str ends-with`. Only the

`str contains` command has the `--not` flag and a desicion was made in

this #12781 PR to remove the `--not` flag and use the `not` operator

instead.

Before:

`"blob" | str contains --not o`

After:

`not ("blob" | str contains o)` OR `"blob" | str contains o | not $in`

> Note, you can currently do all three, but the first will be broken

after this PR is merged.

# User-Facing Changes

- remove `--not(-n)` flag from `str contains` command

- This is a breaking change!

# Tests + Formatting

- [x] Added tests

- [x] Ran `cargo fmt --all`

- [x] Ran `cargo clippy --workspace -- -D warnings -D

clippy::unwrap_used`

- [x] Ran `cargo test --workspace`

- [ ] Ran `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"`

- I was unable to get this working.

```

Error: nu::parser::export_not_found

× Export not found.

╭─[source:1:9]

1 │ use std testing; testing run-tests --path crates/nu-std

· ───┬───

· ╰── could not find imports

╰────

```

^ I still can't figure out how to make this work 😂

# After Submitting

Requires update of documentation

# Description

Fixes#12796 where a combined out and err pipe redirection (`o+e>|`)

into `complete` still provides separate `stdout` and `stderr` columns in

the record. Now, the combined output will be in the `stdout` column.

This PR also fixes a similar error with the `e>|` pipe redirection.

# Tests + Formatting

Added two tests.

This PR has two parts. The first part is the addition of the

`Stack::set_pwd()` API. It strips trailing slashes from paths for

convenience, but will reject otherwise bad paths, leaving PWD in a good

state. This should reduce the impact of faulty code incorrectly trying

to set PWD.

(https://github.com/nushell/nushell/pull/12760#issuecomment-2095393012)

The second part is implementing a PWD recovery mechanism. PWD can become

bad even when we did nothing wrong. For example, Unix allows you to

remove any directory when another process might still be using it, which

means PWD can just "disappear" under our nose. This PR makes it possible

to use `cd` to reset PWD into a good state. Here's a demonstration:

```sh

mkdir /tmp/foo

cd /tmp/foo

# delete "/tmp/foo" in a subshell, because Nushell is smart and refuse to delete PWD

nu -c 'cd /; rm -r /tmp/foo'

ls # Error: × $env.PWD points to a non-existent directory

# help: Use `cd` to reset $env.PWD into a good state

cd /

pwd # prints /

```

Also, auto-cd should be working again.

# Description

Refactors the code in `nu-cli`, `main.rs`, `run.rs`, and few others.

Namely, I added `EngineState::generate_nu_constant` function to

eliminate some duplicate code. Otherwise, I changed a bunch of areas to

return errors instead of calling `std::process::exit`.

# User-Facing Changes

Should be none.

# Description

Fixes#12813 where a panic occurs when syntax highlighting `not`. Also

fixes#12814 where syntax highlighting for `not` no longer works.

# User-Facing Changes

Bug fix.

# Description

Adds an additional `&Stack` parameter to `Completer::fetch` so that the

completers don't have to store a `Stack` themselves. I also removed

unnecessary `EngineState`s from the completers, since the same

`EngineState` is available in the `working_set.permanent_state` also

passed to `Completer::fetch`.

# Description

Changes the iterator in `rm` to be an iterator over

`Result<Option<String>, ShellError>` (an optional message or error)

instead of an iterator over `Value`. Then, the iterator is consumed and

each message is printed. This allows the

`PipelineData::print_not_formatted` method to be removed.

# Description

On 64-bit platforms the current size of `Value` is 56 bytes. The

limiting variants were `Closure` and `Range`. Boxing the two reduces the

size of Value to 48 bytes. This is the minimal size possible with our

current 16-byte `Span` and any 24-byte `Vec` container which we use in

several variants. (Note the extra full 8-bytes necessary for the

discriminant or other smaller values due to the 8-byte alignment of

`usize`)

This is leads to a size reduction of ~15% for `Value` and should overall

be beneficial as both `Range` and `Closure` are rarely used compared to

the primitive types or even our general container types.

# User-Facing Changes

Less memory used, potential runtime benefits.

(Too late in the evening to run the benchmarks myself right now)

# Description

This PR is a continuation of #12629 and meant to address [Reilly's

stated

issue](https://github.com/nushell/nushell/pull/12629#issuecomment-2099660609).

With this PR, nushell should work more consistently with WezTerm on

Windows. However, that means continued scrolling with typing if osc133

is enabled. If it's possible to run WezTerm inside of vscode, then

having osc633 enabled will also cause the display to scroll with every

character typed. I think the cause of this is that reedline paints the

entire prompt on each character typed. We need to figure out how to fix

that, but that's in reedline.

For my purposes, I keep osc133 and osc633 set to true and don't use

WezTerm on Windows.

Thanks @rgwood for reporting the issue. I found several logic errors.

It's often good to come back to PRs and look at them with fresh eyes. I

think this is pretty close to logically correct now. However, I'm

approaching burn out on ansi escape codes so i could've missed

something.

Kudos to [escape-artist](https://github.com/rgwood/escape-artist) for

helping me debug an ansi escape codes that are actually being sent to

the terminal. It was an invaluable tool.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

- Switches the `excess` in the `ParserStream` and

`ParseStreamerExternal` types from a `Vec` to a `VecDeque`

- Removes unnecessary clones to `stream_helper`

- Other simplifications and loop restructuring

- Merges the `ParseStreamer` and `ParseStreamerExternal` types into a

common `ParseIter`

- `parse` now streams for list values

# Description

This changes the message that shows up when running a plugin executable

directly rather than as a plugin to direct the user to run `plugin add

--help`, which should have enough information to figure out what's going

on. The message previously just vaguely suggested that the user needs to

run the plugin "from within Nushell", which is not really enough - it

has to be added with `plugin add` to be used as a plugin.

Also fix docs for `plugin add` to mention `plugin use` rather than

`register` (oops)

Refer to #12603 for part 1.

We need to be careful when migrating to the new API, because the new API

has slightly different semantics (PWD can contain symlinks). This PR

handles the "obviously safe" part of the migrations. Namely, it handles

two specific use cases:

* Passing PWD into `canonicalize_with()`

* Passing PWD into `EngineState::merge_env()`

The first case is safe because symlinks are canonicalized away. The

second case is safe because `EngineState::merge_env()` only uses PWD to

call `std::env::set_current_dir()`, which shouldn't affact Nushell. The

commit message contains detailed stats on the updated files.

Because these migrations touch a lot of files, I want to keep these PRs

small to avoid merge conflicts.

# Description

Add a new `sys users` command which returns a table of the users of the

system. This is the same table that is currently present as

`(sys).host.sessions`. The same table has been removed from the recently

added `sys host` command.

# User-Facing Changes

Adds a new command. (The old `sys` command is left as is.)

# Description

Refactors `describe` a bit. Namely, I added a `Description` enum to get

rid of `compact_primitive_description` and its awkward `Value` pattern

matching.

# Description

Adds subcommands to `sys` corresponding to each column of the record

returned by `sys`. This is to alleviate the fact that `sys` now returns

a regular record, meaning that it must compute every column which might

take a noticeable amount of time. The subcommands, on the other hand,

only need to compute and return a subset of the data which should be

much faster. In fact, it should be as fast as before, since this is how

the lazy record worked (it would compute only each column as necessary).

I choose to add subcommands instead of having an optional cell-path

parameter on `sys`, since the cell-path parameter would:

- increase the code complexity (can access any value at any row or

nested column)

- prevents discovery with tab-completion

- hinders type checking and allows users to pass potentially invalid

columns

# User-Facing Changes

Deprecates `sys` in favor of the new `sys` subcommands.

This moves to predominantly supporting only lazy dataframes for most

operations. It removes a lot of the type conversion between lazy and

eager dataframes based on what was inputted into the command.

For the most part the changes will mean:

* You will need to run `polars collect` after performing operations

* The into-lazy command has been removed as it is redundant.

* When opening files a lazy frame will be outputted by default if the

reader supports lazy frames

A list of individual command changes can be found

[here](https://hackmd.io/@nucore/Bk-3V-hW0)

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

Fixes: #12744

This pr is moving raw string lex logic into `lex_item` function, so we

can use raw string inside subexpression, list, closure.

```nushell

> [r#'abc'#]

╭───┬─────╮

│ 0 │ abc │

╰───┴─────╯

> (r#'abc'#)

abc

> do {r#'aa'#}

aa

```

# Tests + Formatting

Done

# After Submitting

NaN

Time to expand our developer documentation, as some of this is still

tribal knowledge or things could otherwise slip through the cracks and

are costly to fix later.

# Description

Fixes: #12429

To fix the issue, we need to pass the `input pattern` itself to

`glob_from` function, but currently on latest main, nushell pass

`expanded path of input pattern` to `glob_from` function.

It causes globbing failed if expanded path includes `[]` brackets.

It's a pity that I have to duplicate `nu_engine::glob_from` function

into `ls`, because `ls` might convert from `NuGlob::NotExpand` to

`NuGlob::Expand`, in that case, `nu_engine::glob_from` won't work if

user want to ls for a directory which includes tilde:

```

mkdir "~abc"

ls "~abc"

```

So I need to duplicate `glob_from` function and pass original

`expand_tilde` information.

# User-Facing Changes

Nan

# Tests + Formatting

Done

# After Submitting

Nan

# Description

Does some misc changes to `ListStream`:

- Moves it into its own module/file separate from `RawStream`.

- `ListStream`s now have an associated `Span`.

- This required changes to `ListStreamInfo` in `nu-plugin`. Note sure if

this is a breaking change for the plugin protocol.

- Hides the internals of `ListStream` but also adds a few more methods.

- This includes two functions to more easily alter a stream (these take

a `ListStream` and return a `ListStream` instead of having to go through

the whole `into_pipeline_data(..)` route).

- `map`: takes a `FnMut(Value) -> Value`

- `modify`: takes a function to modify the inner stream.

# Description

This PR migrates the benchmark suit to Tango. Its different compared to

other framework because it require 2 binaries, to run to do A/B

benchmarking, this is currently limited to Linux, Max, (Windows require

rustc nightly flag), by switching between two suits it can reduce noise

and run the code "almost" concurrently. I have have been in contact with

the maintainer, and bases this on the dev branch, as it had a newer API

simular to criterion. This framework compared to Divan also have a

simple file dump system if we want to generate graphs, do other analysis

on later. I think overall this crate is very nice, a lot faster to

compile and run then criterion, that's for sure.

# Description

this PR

- moves the documentation from `lib.rs` to `README.md` while still

including it in the lib file, so that both the [crates.io

page](https://crates.io/crates/nuon) and the

[documentation](https://docs.rs/nuon/latest/nuon/) show the top-level

doc

- mention that comments are allowed in NUON

- add a JSON-NUON example

- put back the formatting of NOTE blocks in the doc

# User-Facing Changes

# Tests + Formatting

# After Submitting

# Description

Our current flattening code creates a bunch of intermediate `Vec`s for

each function call. These intermediate `Vec`s are then usually appended

to the current `output` `Vec`. By instead passing a mutable reference of

the `output` `Vec` to each flattening function, this `Vec` can be

reused/appended to directly thereby eliminating the need for

intermediate `Vec`s in most cases.

Followup to #12757

Always ensure that the `Cargo.toml` specifies the full minimum version

required to have the correct behavior or used features. Otherwise a

missing semver specifier is equal to `0` and could downgrade.

# Description

Make typos config more strict: ignore false positives where they occur.

1. Ignore only files with typos

2. Add regexp-s with context

3. Ignore variable names only in Rust code

4. Ignore only 1 "identifier"

5. Check dot files

🎁 Extra bonus: fix typos!!

# Description

Spaces were causing an issue with into_sqlite when they appeared in

column names.

This is because the column names were not properly wrapped with

backticks that allow sqlite to properly interpret the column.

The issue has been addressed by adding backticks to the column names of

into sqlite. The output of the column names when using open is

unchanged, and the column names appear without backticks as expected.

fixes#12700

# User-Facing Changes

N/A

# Tests + Formatting

Formatting has been respected.

Repro steps from the issue have been done, and ran multiple times. New

values get added to the correct columns as expected.

# Description

Bumps `base64` to 0.22.1 which fixes the alphabet used for binhex

encoding and decoding. This required updating some test expected output.

Related to PR #12469 where `base64` was also bumped and ran into the

failing tests.

# User-Facing Changes

Bug fix, but still changes binhex encoding and decoding output.

# Tests + Formatting

Updated test expected output.

PR https://github.com/nushell/nushell/pull/12603 made it so that PWD can

never contain a trailing slash. However, a root path (such as `/` or

`C:\`) technically counts as "having a trailing slash", so now `cd /`

doesn't work.

I feel dumb for missing such an obvious edge case. Let's just merge this

quickly before anyone else finds out...

EDIT: It appears I'm too late.

# Description

Fixes#12758.

#12662 introduced a bug where calling `cd` with a path with a trailing

slash would cause `PWD` to be set to a path including a trailing slash,

which is not allowed. This adds a helper to `nu_path` to remove this,

and uses it in the `cd` command to clean it up before setting `PWD`.

# Tests + Formatting

I added some tests to make sure we don't regress on this in the future.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

- fixes#11922

- fixes#12203

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This is a rewrite for some parts of the recursive completion system. The

Rust `std::path` structures often ignores things like a trailing `.`

because for a complete path, it implies the current directory. We are

replacing the use of some of these structs for Strings.

A side effect is the slashes being normalized in Windows. For example if

we were to type `foo/bar/b`, it would complete it to `foo\bar\baz`

because a backward slash is the main separator in windows.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Relative paths are preserved. `..`s in the paths won't eagerly show

completions from the parent path. For example, `asd/foo/../b` will now

complete to `asd/foo/../bar` instead of `asd/bar`.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

I added some more tests to our mighty `polars` ~~, yet I don't know how

to add expected results in some of them. I would like to ask for help.~~

~~My experiments are in the last commit: [polars:

experiments](f7e5e72019).

Without those experiments `cargo test` goes well.~~

UPD. I moved out my unsuccessful test experiments into a separate

[branch](https://github.com/maxim-uvarov/nushell/blob/polars-tests-broken2/).

So, this branch seems ready for a merge.

@ayax79, maybe you'll find time for me please? It's not urgent for sure.

P.S. I'm very new to git. Please feel free to give me any suggestions on

how I should use it better

# Description

Judiciously try to avoid allocations/clone by changing the signature of

functions

- **Don't pass str by value unnecessarily if only read**

- **Don't require a vec in `Sandbox::with_files`**

- **Remove unnecessary string clone**

- **Fixup unnecessary borrow**

- **Use `&str` in shape color instead**

- **Vec -> Slice**

- **Elide string clone**

- **Elide `Path` clone**

- **Take &str to elide clone in tests**

# User-Facing Changes

None

# Tests + Formatting

This touches many tests purely in changing from owned to borrowed/static

data

Currently errors just create empty entries inside of resulting

dataframes.

This changeset is meant to help debug #12004, though generally speaking

I do think it's worth having ways to make errors be visible in this kind

of pipeline be visible

An example of what this looks like

<img width="954" alt="image"

src="https://github.com/nushell/nushell/assets/1408472/2c3c9167-2aaf-4f87-bab5-e8302d7a1170">

This is the first PR towards migrating to a new `$env.PWD` API that

returns potentially un-canonicalized paths. Refer to PR #12515 for

motivations.

## New API: `EngineState::cwd()`

The goal of the new API is to cover both parse-time and runtime use

case, and avoid unintentional misuse. It takes an `Option<Stack>` as

argument, which if supplied, will search for `$env.PWD` on the stack in

additional to the engine state. I think with this design, there's less

confusion over parse-time and runtime environments. If you have access

to a stack, just supply it; otherwise supply `None`.

## Deprecation of other PWD-related APIs

Other APIs are re-implemented using `EngineState::cwd()` and properly

documented. They're marked deprecated, but their behavior is unchanged.

Unused APIs are deleted, and code that accesses `$env.PWD` directly

without using an API is rewritten.

Deprecated APIs:

* `EngineState::current_work_dir()`

* `StateWorkingSet::get_cwd()`

* `env::current_dir()`

* `env::current_dir_str()`

* `env::current_dir_const()`

* `env::current_dir_str_const()`

Other changes:

* `EngineState::get_cwd()` (deleted)

* `StateWorkingSet::list_env()` (deleted)

* `repl::do_run_cmd()` (rewritten with `env::current_dir_str()`)

## `cd` and `pwd` now use logical paths by default

This pulls the changes from PR #12515. It's currently somewhat broken

because using non-canonicalized paths exposed a bug in our path

normalization logic (Issue #12602). Once that is fixed, this should

work.

## Future plans

This PR needs some tests. Which test helpers should I use, and where

should I put those tests?

I noticed that unquoted paths are expanded within `eval_filepath()` and

`eval_directory()` before they even reach the `cd` command. This means

every paths is expanded twice. Is this intended?

Once this PR lands, the plan is to review all usages of the deprecated

APIs and migrate them to `EngineState::cwd()`. In the meantime, these

usages are annotated with `#[allow(deprecated)]` to avoid breaking CI.

---------

Co-authored-by: Jakub Žádník <kubouch@gmail.com>

# Description

This fixes#12724. NetBSD confirmed to work with this change.

The update also behaves a bit better in some ways - it automatically

unlinks and reclaims sockets on Unix, and doesn't try to flush/sync the

socket on Windows, so I was able to remove that platform-specific logic.