# Description

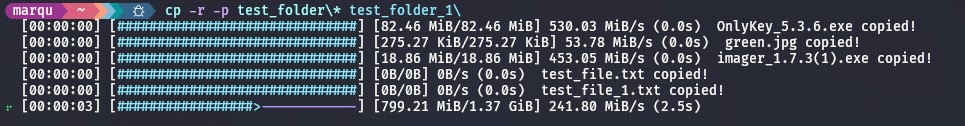

This PR updates the toolkit and the build/install scripts to include

`--locked`, also added `extra` feature to the _all_ scripts, and

`--force` to the install scripts.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR changes the signature of the `help` command so that it can

return a `Type::Table`.

closes#10077

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

We allowed two default lints due to false positives. This should be

locally addressed with explicit information what causes either a false

positive or why a particular design choice in the code is made.

# User-Facing Changes

None

# Tests + Formatting

We should detect a few more things with clippy out of the box. If you

encounter a false positive you will need to address it on a case by case

basis.

If you have a `config` variable defined at some point after reading

config files, Nushell would print

```

warning: use `$env.config = ...` instead of `let config = ...`

```

I think it's long enough since we've used `$env.config` that we can

remove this. Furthermore, it should be printed during `let` parsing

because you can end up with a `config` constant after importing a

`config` module (that was my case). The warning thus can be misleading.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

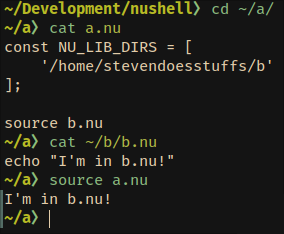

https://github.com/nushell/nushell/pull/9773 introduced constants to

modules and allowed to export them, but only within one level. This PR:

* allows recursive exporting of constants from all submodules

* fixes submodule imports in a list import pattern

* makes sure exported constants are actual constants

Should unblock https://github.com/nushell/nushell/pull/9678

### Example:

```nushell

module spam {

export module eggs {

export module bacon {

export const viking = 'eats'

}

}

}

use spam

print $spam.eggs.bacon.viking # prints 'eats'

use spam [eggs]

print $eggs.bacon.viking # prints 'eats'

use spam eggs bacon viking

print $viking # prints 'eats'

```

### Limitation 1:

Considering the above `spam` module, attempting to get `eggs bacon` from

`spam` module doesn't work directly:

```nushell

use spam [ eggs bacon ] # attempts to load `eggs`, then `bacon`

use spam [ "eggs bacon" ] # obviously wrong name for a constant, but doesn't work also for commands

```

Workaround (for example):

```nushell

use spam eggs

use eggs [ bacon ]

print $bacon.viking # prints 'eats'

```

I'm thinking I'll just leave it in, as you can easily work around this.

It is also a limitation of the import pattern in general, not just

constants.

### Limitation 2:

`overlay use` successfully imports the constants, but `overlay hide`

does not hide them, even though it seems to hide normal variables

successfully. This needs more investigation.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Allows recursive constant exports from submodules.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR tries to fix `into datetime`. The problem was that it didn't

support many input formats and the `--format` was clunky. `--format` is

still a bit clunky but can work. The big change here is that it first

tries to use `dtparse` to convert text into datetime.

### Before

```nushell

❯ '20220604' | into datetime

Thu, 01 Jan 1970 00:00:00 +0000 (53 years ago)

```

### After

```nushell

❯ '20220604' | into datetime

Sat, 04 Jun 2022 00:00:00 -0500 (a year ago)

```

## Supported Input Formats

`dtparse` should support all these formats. Taken from their

[repo](https://github.com/bspeice/dtparse/blob/master/build_pycompat.py).

```python

'test_parse_default': [

"Thu Sep 25 10:36:28",

"Sep 10:36:28", "10:36:28", "10:36", "Sep 2003", "Sep", "2003",

"10h36m28.5s", "10h36m28s", "10h36m", "10h", "10 h 36", "10 h 36.5",

"36 m 5", "36 m 5 s", "36 m 05", "36 m 05 s", "10h am", "10h pm",

"10am", "10pm", "10:00 am", "10:00 pm", "10:00am", "10:00pm",

"10:00a.m", "10:00p.m", "10:00a.m.", "10:00p.m.",

"October", "31-Dec-00", "0:01:02", "12h 01m02s am", "12:08 PM",

"01h02m03", "01h02", "01h02s", "01m02", "01m02h", "2004 10 Apr 11h30m",

# testPertain

'Sep 03', 'Sep of 03',

# test_hmBY - Note: This appears to be Python 3 only, no idea why

'02:17NOV2017',

# Weekdays

"Thu Sep 10:36:28", "Thu 10:36:28", "Wed", "Wednesday"

],

'test_parse_simple': [

"Thu Sep 25 10:36:28 2003", "Thu Sep 25 2003", "2003-09-25T10:49:41",

"2003-09-25T10:49", "2003-09-25T10", "2003-09-25", "20030925T104941",

"20030925T1049", "20030925T10", "20030925", "2003-09-25 10:49:41,502",

"199709020908", "19970902090807", "2003-09-25", "09-25-2003",

"25-09-2003", "10-09-2003", "10-09-03", "2003.09.25", "09.25.2003",

"25.09.2003", "10.09.2003", "10.09.03", "2003/09/25", "09/25/2003",

"25/09/2003", "10/09/2003", "10/09/03", "2003 09 25", "09 25 2003",

"25 09 2003", "10 09 2003", "10 09 03", "25 09 03", "03 25 Sep",

"25 03 Sep", " July 4 , 1976 12:01:02 am ",

"Wed, July 10, '96", "1996.July.10 AD 12:08 PM", "July 4, 1976",

"7 4 1976", "4 jul 1976", "7-4-76", "19760704",

"0:01:02 on July 4, 1976", "0:01:02 on July 4, 1976",

"July 4, 1976 12:01:02 am", "Mon Jan 2 04:24:27 1995",

"04.04.95 00:22", "Jan 1 1999 11:23:34.578", "950404 122212",

"3rd of May 2001", "5th of March 2001", "1st of May 2003",

'0099-01-01T00:00:00', '0031-01-01T00:00:00',

"20080227T21:26:01.123456789", '13NOV2017', '0003-03-04',

'December.0031.30',

# testNoYearFirstNoDayFirst

'090107',

# test_mstridx

'2015-15-May',

],

'test_parse_tzinfo': [

'Thu Sep 25 10:36:28 BRST 2003', '2003 10:36:28 BRST 25 Sep Thu',

],

'test_parse_offset': [

'Thu, 25 Sep 2003 10:49:41 -0300', '2003-09-25T10:49:41.5-03:00',

'2003-09-25T10:49:41-03:00', '20030925T104941.5-0300',

'20030925T104941-0300',

# dtparse-specific

"2018-08-10 10:00:00 UTC+3", "2018-08-10 03:36:47 PM GMT-4", "2018-08-10 04:15:00 AM Z-02:00"

],

'test_parse_dayfirst': [

'10-09-2003', '10.09.2003', '10/09/2003', '10 09 2003',

# testDayFirst

'090107',

# testUnambiguousDayFirst

'2015 09 25'

],

'test_parse_yearfirst': [

'10-09-03', '10.09.03', '10/09/03', '10 09 03',

# testYearFirst

'090107',

# testUnambiguousYearFirst

'2015 09 25'

],

'test_parse_dfyf': [

# testDayFirstYearFirst

'090107',

# testUnambiguousDayFirstYearFirst

'2015 09 25'

],

'test_unspecified_fallback': [

'April 2009', 'Feb 2007', 'Feb 2008'

],

'test_parse_ignoretz': [

'Thu Sep 25 10:36:28 BRST 2003', '1996.07.10 AD at 15:08:56 PDT',

'Tuesday, April 12, 1952 AD 3:30:42pm PST',

'November 5, 1994, 8:15:30 am EST', '1994-11-05T08:15:30-05:00',

'1994-11-05T08:15:30Z', '1976-07-04T00:01:02Z', '1986-07-05T08:15:30z',

'Tue Apr 4 00:22:12 PDT 1995'

],

'test_fuzzy_tzinfo': [

'Today is 25 of September of 2003, exactly at 10:49:41 with timezone -03:00.'

],

'test_fuzzy_tokens_tzinfo': [

'Today is 25 of September of 2003, exactly at 10:49:41 with timezone -03:00.'

],

'test_fuzzy_simple': [

'I have a meeting on March 1, 1974', # testFuzzyAMPMProblem

'On June 8th, 2020, I am going to be the first man on Mars', # testFuzzyAMPMProblem

'Meet me at the AM/PM on Sunset at 3:00 AM on December 3rd, 2003', # testFuzzyAMPMProblem

'Meet me at 3:00 AM on December 3rd, 2003 at the AM/PM on Sunset', # testFuzzyAMPMProblem

'Jan 29, 1945 14:45 AM I going to see you there?', # testFuzzyIgnoreAMPM

'2017-07-17 06:15:', # test_idx_check

],

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

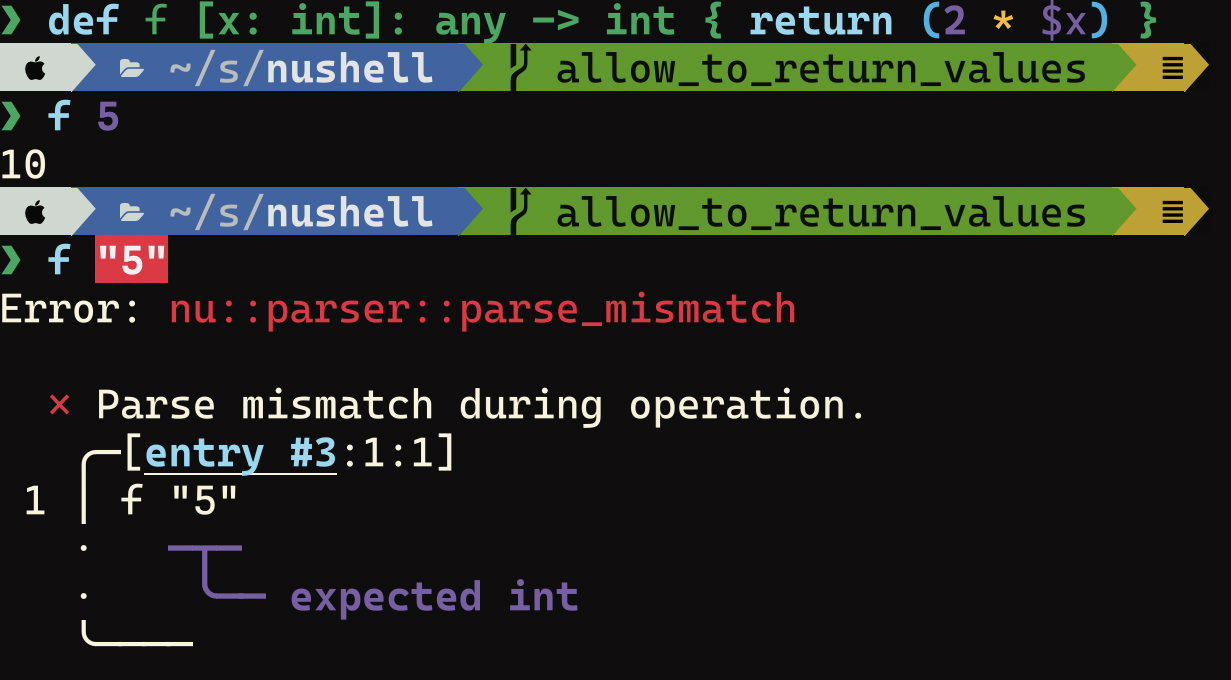

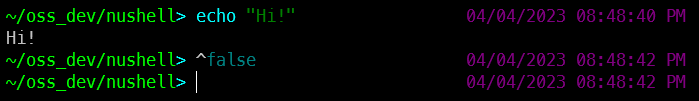

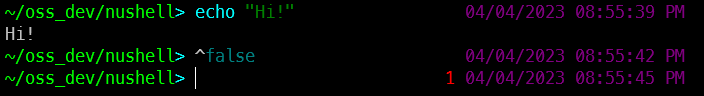

# Description

This PR allows the `return` command to return any nushell value.

### Before

### After

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Context: https://github.com/serde-rs/serde/issues/2538

As other projects are investigating, this should pin serde to the last

stable release before binary requirements were introduced.

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

related to

-

https://discord.com/channels/601130461678272522/615253963645911060/1142060647358668841

# Description

in order to make the charpage for Windows as general as possible, `chcp`

will only run on Windows when `--charpage` is given an integer.

while i was at it, i fixed the system messages given to

`check-clipboard` because some of the were incorrect => see the second

commit 6865ec9a5

# User-Facing Changes

this is a breaking change as users relying on the fact that `std clip`

changed the page to `65001` by itself is not true anymore => they will

have to add `--charpage 65001`.

# Tests + Formatting

# After Submitting

# Description

This PR updates some `Example` tests so that they work again. The only

one I couldn't figure out is the one in the `filter` command. It should

work but does not. However, I left the test in because it's valuable, it

just has a `None` result. I'd like to fix this but I'm not sure how.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR tries to document some of the more obscure testbin parameters

and what they do. I still don't grok all of them.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

default env.nu is currently broken after the changes to `str replace`.

This PR should help fix it.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

I did only few manual tests, so maybe shall be run before.

todo: maybe need to add a test case for it.

fix#9993

cc: @fdncred

---------

Signed-off-by: Maxim Zhiburt <zhiburt@gmail.com>

Description

This PR allows ints to be used as cell paths.

### Before

```nushell

❯ let index = 0

❯ locations | select $index

Error: nu:🐚:cant_convert

× Can't convert to cell path.

╭─[entry #26:1:1]

1 │ locations | select $index

· ───┬──

· ╰── can't convert int to cell path

╰────

```

### After

```nushell

❯ let index = 0

❯ locations | select $index

╭#┬───────location────────┬city_column┬state_column┬country_column┬lat_column┬lon_column╮

│0│http://ip-api.com/json/│city │region │countryCode │lat │lon │

╰─┴───────────────────────┴───────────┴────────────┴──────────────┴──────────┴──────────╯

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

related to

-

https://discord.com/channels/601130461678272522/614593951969574961/1141009665266831470

# Description

this PR

- prints a colorful warning when a user uses either `--format` or

`--list` on `into datetime`

- does NOT remove the features for now, i.e. the two options still work

- redirect to the `format date` command instead

i propose to

- land this now

- prepare a removal PR right after this

- land the removal PR in between 0.84 and 0.85

# User-Facing Changes

`into datetime --format` and `into datetime --list` will be deprecated

in 0.85.

## how it looks

- `into datetime --list` in the REPL

```nushell

> into datetime --list | first

Error: × Deprecated option

╭─[entry #1:1:1]

1 │ into datetime --list | first

· ──────┬──────

· ╰── `into datetime --list` is deprecated and will be removed in 0.85

╰────

help: see `format datetime --list` instead

╭───────────────┬────────────────────────────────────────────╮

│ Specification │ %Y │

│ Example │ 2023 │

│ Description │ The full proleptic Gregorian year, │

│ │ zero-padded to 4 digits. │

╰───────────────┴────────────────────────────────────────────╯

```

- `into datetime --list` in a script

```nushell

> nu /tmp/foo.nu

Error: × Deprecated option

╭─[/tmp/foo.nu:4:1]

4 │ #

5 │ into datetime --list | first

· ──────┬──────

· ╰── `into datetime --list` is deprecated and will be removed in 0.85

╰────

help: see `format datetime --list` instead

╭───────────────┬────────────────────────────────────────────╮

│ Specification │ %Y │

│ Example │ 2023 │

│ Description │ The full proleptic Gregorian year, │

│ │ zero-padded to 4 digits. │

╰───────────────┴────────────────────────────────────────────╯

```

- `help into datetime`

# Tests + Formatting

# After Submitting

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Removes some dead code that was left over

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Winget Releaser now supports non-Windows runners, and `ubuntu-latest` is

generally faster.

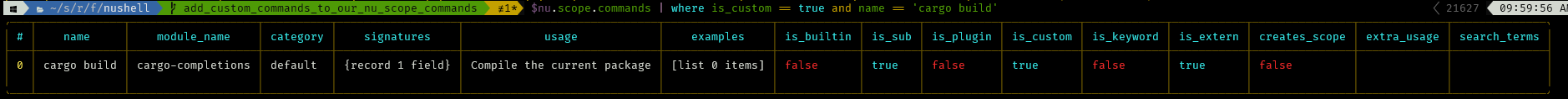

# Description

* All output of `scope` commands is sorted by the "name" column. (`scope

externs` and some other commands had entries in a weird/random order)

* The output of `scope externs` does not have extra newlines (that was

due to wrong usage creation of known externals)

# Description

As described in https://github.com/nushell/nushell/issues/9912, the

`http` command could display the request headers with the `--full` flag,

which could help in debugging the requests. This PR adds such

functionality.

# User-Facing Changes

If `http get` or other `http` command which supports the `--full` flag

is invoked with the flag, it used to display the `headers` key which

contained an table of response headers. Now this key contains two nested

keys: `response` and `request`, each of them being a table of the

response and request headers accordingly.

# Description

This PR enables `select` to take a constructed list of columns as a

variable.

```nushell

> let cols = [name type];[[name type size]; [Cargo.toml toml 1kb] [Cargo.lock toml 2kb]] | select $cols

╭#┬───name───┬type╮

│0│Cargo.toml│toml│

│1│Cargo.lock│toml│

╰─┴──────────┴────╯

```

and rows

```nushell

> let rows = [0 2];[[name type size]; [Cargo.toml toml 1kb] [Cargo.lock toml 2kb] [file.json json 3kb]] | select $rows

╭#┬───name───┬type┬size╮

│0│Cargo.toml│toml│1kb │

│1│file.json │json│3kb │

╰─┴──────────┴────┴────╯

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

- Adds support for conversion between nushell lists and polars lists

instead of treating them as a polars object.

- Fixed explode and flatten to work both as expressions or lazy

dataframe commands. The previous item was required to make this work.

---------

Co-authored-by: Jack Wright <jack.wright@disqo.com>

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

# Description

In the past we named the process of completely removing a command and

providing a basic error message pointing to the new alternative

"deprecation".

But this doesn't match the expectation of most users that have seen

deprecation _warnings_ that alert to either impending removal or

discouraged use after a stability promise.

# User-Facing Changes

Command category changed from `deprecated` to `removed`

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

I've been investigating the [issue

mentioned](https://github.com/nushell/nushell/pull/9976#issuecomment-1673290467)

in my prev pr and I've found that plugin.nu file that is used to cache

plugins signatures gets overwritten on every nushell startup and that

may actually mess up with the file content if 2 or more instances of

nushell will run simultaneously.

To reproduce:

1. register at least 2 plugins in your local nushell

2. remember how many entries you have in plugin.nu with `open

$nu.plugin-path | find nu_plugin`

3. run

- either `cargo test` inside nushell repo

- or run smth like this `1..100 | par-each {|it| $"(random integer

1..100)ms" | into duration | sleep $in; nu -c "$nu.plugin-path"}` to

simulate parallel access. This approach is not so reliable to reproduce

as running test but still a good point that it may effect users actually

4. validate that your `plugin.nu` file was stripped

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# Solution

In this pr I've refactored the code of handling the `register` command

to minimize code duplications and make sure that overwrite of

`plugin.nu` file is happen only when user calls the command and not on

nu startup

Another option would be to use temp `plugin.nu` when running tests, but

as the issue actually can affect users I've decided to prevent

unnecessary writing at all. Although having isolated `plugin.nu` still

worth of doing

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

It changes the behaviour actually as the call `register <plugin>

<signature>` now doesn't updates `plugin.nu` and just reads signatures

to the memory. But as I understand that kind of call with explicit

signature is meant to use only by nushell itself in the `plugin.nu` file

only. I've asked about it in

[discord](https://discordapp.com/channels/601130461678272522/615962413203718156/1140013448915325018)

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Actually, I think the way plugins are stored might be reworked to

prevent or mitigate possible issues further:

- problem with writing to file may still arise if we try to register in

parallel as several instances will write to the same file so the lock

for the file might be required

- using additional parameters to command like `register` to implement

some internal logic could be misleading to the users

- `register` call actually affects global state of nushell that sounds a

little bit inconsistent with immutability and isolation of other parts

of the nu. See issues

[1](https://github.com/nushell/nushell/issues/8581),

[2](https://github.com/nushell/nushell/issues/8960)

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Fixes#9627

Related nushell/reedline#602nushell/reedline#612

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Running tests locally from nushell with customizations (i.e.

$env.PROMPT_COMMAND etc) may lead to failing tests as that customization

leaks to the sandboxed nu itself.

Remove `FILE_PWD` from env

# Tests + Formatting

Tests are now passing locally without issue in my case

# Description

- Add identity cast to `into decimal` (float->float)

- Correct `into decimal` output to concrete float

# User-Facing Changes

`1.23 | into decimal` will now work.

By fixing the output type it can now be used in conjunction with

commands that expect `float`/`list<float>`

# Tests + Formatting

Adapts example to do identity cast and heterogeneous cast

# Description

This may be easy to find/confuse with `drop`

# User-Facing Changes

Users coming from SQL will be happier when using `help -f` or `F1`

# Tests + Formatting

None

Closes https://github.com/nushell/nushell/issues/9910

I noticed that`watch` was not catching all filesystem changes, because

some are reported as `ModifyKind::Data(DataChange::Any)` and we were

only handling `ModifyKind::Data(DataChange::Content)`. Easy fix.

This was happening on Ubuntu 23.04, ext4.

# Description

This PR does three related changes:

* Keeps the originally declared name in help outputs.

* Updates the name of the commands called `main` in the user script to

the name of the script.

* Fixes the source of signature information in multiple places. This

allows scripts to have more complete help output.

Combined, the above allow the user to see the script name in the help

output of scripts, like so:

NOTE: You still declare and call the definition `main`, so from inside

the script `main` is still the correct name. But multiple folks agreed

that seeing `main` in the script help was confusing, so this PR changes

that.

# User-Facing Changes

One potential minor breaking change is that module renames will be shown

as their originally defined name rather than the renamed name. I believe

this to be a better default.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

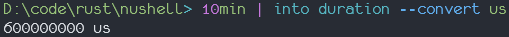

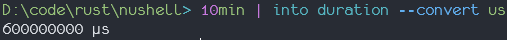

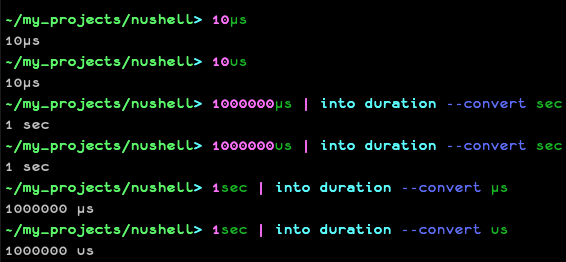

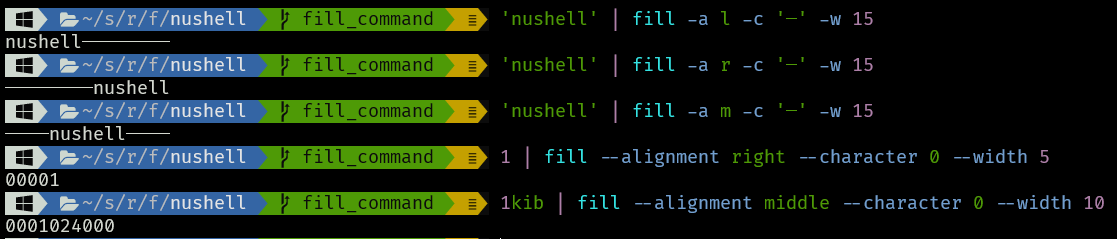

# Description

Those two commands are very complementary to `into duration` and `into

filesize` when you want to coerce a particular string output.

This keeps the old `format` command with its separate formatting syntax

still in `nu-cmd-extra`.

# User-Facing Changes

`format filesize` is back accessible with the default build. The new

`format duration` command is also available to everybody

# Tests + Formatting

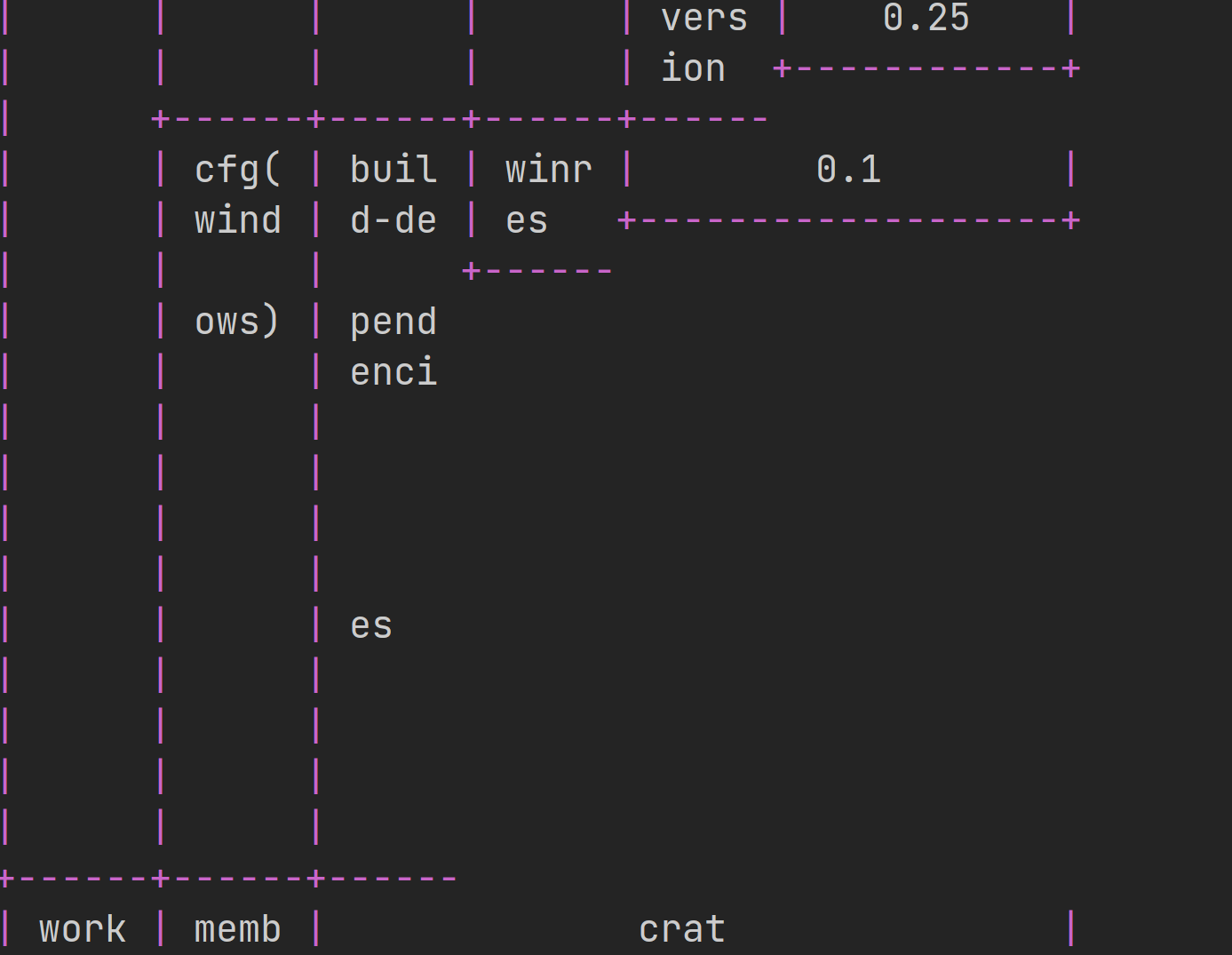

# Description

in its documentation, `input list` says it only accepts the following

signatures

- `list<any> -> list<any>`

- `list<string> -> string`

however this is incorrect as the following is allowed and even in the

help page

```nushell

[1 2 3] | input list # -> returns an `int`

```

this PR fixes the signature of `input list`.

- with no option or `--fuzzy`, `input list` takes a `list<any>` and

outputs a single `any`

- with `--multi`, `input list` takes a `list<any>` and outputs a

`list<any>`

# User-Facing Changes

the input output signature of `input list` is now

```

╭───┬───────────┬───────────╮

│ # │ input │ output │

├───┼───────────┼───────────┤

│ 0 │ list<any> │ list<any> │

│ 1 │ list<any> │ any │

╰───┴───────────┴───────────╯

```

# Tests + Formatting

this shouldn't change anything as `[1 2 3] | input list` already works.

# After Submitting

# Description

Building broke silently.

`nu` needs to enable the `dataframe` feature of `nu-cmd-dataframe`

# User-Facing Changes

Building with `cargo build --features dataframe` works again.

# Tests + Formatting

Still worth investigating if we can harden the CI against this.

Context from Discord:

https://discord.com/channels/601130461678272522/615962413203718156/1138694933545504819

I was working on Nu for the first time in a while and I noticed that

sometimes rust-analyzer takes a really long time to run `cargo check` on

the entire workspace. I dug in and it was checking a bunch of

dataframe-related dependencies even though the `dataframe` feature is

not built by default.

It looks like this is a regression of sorts, introduced by

https://github.com/nushell/nushell/pull/9241. Thankfully the fix is

pretty easy, we can make it so everything important in

`nu-cmd-dataframe` is only used when the `dataframe` feature is enabled.

### Impact on `cargo check --workspace`

Before this PR: 635 crates, 33.59s

After this PR: 498 crates, ~20s

(with the `mold` linker and a `cargo clean` before each run, the

relative difference for incremental checks will likely be much larger)

# Description

This PR fixes the semver issues with `strip-ansi-escapes` and updates it

to 0.2.0 as well as updating to the latest reedline which just landed an

identical patch.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

should close https://github.com/nushell/nushell/issues/9965

# Description

this PR implements the `todo!()` left in `lines`.

# User-Facing Changes

### before

```nushell

> open . | lines

thread 'main' panicked at 'not yet implemented', crates/nu-command/src/filters/lines.rs:248:35

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

```

### after

```nushell

> open . | lines

Error: nu:🐚:io_error

× I/O error

help: Is a directory (os error 21)

```

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- ⚫ `toolkit test`

- ⚫ `toolkit test stdlib`

this PR adds the `lines_on_error` test to make sure this does not happen

again 😌

# After Submitting

# Description

This PR updates `strip-ansi-escapes` to support their new API. This also

updates nushell to the latest reedline after the same fix

https://github.com/nushell/reedline/pull/617closes#9957

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

related to

- https://github.com/nushell/nushell/pull/9935

# Description

this PR just adds a test to make sure type annotations in `def`s show as

`nothing` in the help pages of commands.

# User-Facing Changes

# Tests + Formatting

adds a new test `nothing_type_annotation`.

# After Submitting

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

-->

# Description

This PR implements the workaround discussed in #9795, i.e. having

`parse` collect an external stream before operating on it with a regex.

- Should close#9795

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

- `parse` will give the correct output for external streams

- increased memory and time overhead due to collecting the entire stream

(no short-circuiting)

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- formatting is checked

- clippy is happy

- no tests that weren't already broken fail

- added test case

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

This PR should close#8036, #9028 (in the negative) and #9118.

Fix for #9118 is a bit pedantic. As reported, the issue is:

```

> 2023-05-07T04:08:45+12:00 - 2019-05-10T09:59:12+12:00

3yr 12month 2day 18hr 9min 33sec

```

with this PR, you now get:

```

> 2023-05-07T04:08:45+12:00 - 2019-05-10T09:59:12+12:00

208wk 1day 18hr 9min 33sec

```

Which is strictly correct, but could still fairly be called "weird date

arithmetic".

# Description

* [x] Abide by constraint that Value::Duration remains a number of

nanoseconds with no additional fields.

* [x] `to_string()` only displays weeks .. nanoseconds. Duration doesn't

have base date to compute months or years from.

* [x] `duration | into record` likewise only has fields for weeks ..

nanoseconds.

* [x] `string | into duration` now accepts compound form of duration

to_string() (e.g '2day 3hr`, not just '2day')

* [x] `duration | into string` now works (and produces the same

representation as to_string(), which may be compound).

# User-Facing Changes

## duration -> string -> duration

Now you can "round trip" an arbitrary duration value: convert it to a

string that may include multiple time units (a "compound" value), then

convert that string back into a duration. This required changes to

`string | into duration` and the addition of `duration | into string'.

```

> 2day + 3hr

2day 3hr # the "to_string()" representation (in this case, a compound value)

> 2day + 3hr | into string

2day 3hr # string value

> 2day + 3hr | into string | into duration

2day 3hr # round-trip duration -> string -> duration

```

Note that `to nuon` and `from nuon` already round-tripped durations, but

use a different string representation.

## potentially breaking changes

* string rendering of a duration no longer has 'yr' or 'month' phrases.

* record from `duration | into record` no longer has 'year' or 'month'

fields.

The excess duration is all lumped into the `week` field, which is the

largest time unit you can

convert to without knowing the datetime from which the duration was

calculated.

Scripts that depended on month or year time units on output will need to

be changed.

### Examples

```

> 365day

52wk 1day

## Used to be:

## 1yr

> 365day | into record

╭──────┬────╮

│ week │ 52 │

│ day │ 1 │

│ sign │ + │

╰──────┴────╯

## used to be:

##╭──────┬───╮

##│ year │ 1 │

##│ sign │ + │

##╰──────┴───╯

> (365day + 4wk + 5day + 6hr + 7min + 8sec + 9ms + 10us + 11ns)

56wk 6day 6hr 7min 8sec 9ms 10µs 11ns

## used to be:

## 1yr 1month 3day 6hr 7min 8sec 9ms 10µs 11ns

## which looks reasonable, but was actually only correct in 75% of the years and 25% of the months in the last 4 years.

> (365day + 4wk + 5day + 6hr + 7min + 8sec + 9ms + 10us + 11ns) | into record

╭─────────────┬────╮

│ week │ 56 │

│ day │ 6 │

│ hour │ 6 │

│ minute │ 7 │

│ second │ 8 │

│ millisecond │ 9 │

│ microsecond │ 10 │

│ nanosecond │ 11 │

│ sign │ + │

╰─────────────┴────╯

```

Strictly speaking, these changes could break an existing user script.

Losing years and months as time units is arguably a regression in

behavior.

Also, the corrected duration calculation could break an existing script

that was calibrated using the old algorithm.

# Tests + Formatting

```

> toolkit check pr

```

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

---------

Co-authored-by: Bob Hyman <bobhy@localhost.localdomain>

# Description

This PR adds back the functionality to auto-expand tables based on the

terminal width, using the logic that if the terminal is over 100 columns

to expand.

This sets the default config value in both the Rust and the default

nushell config.

To do so, it also adds back the ability for hooks to be strings of code

and not just code blocks.

Fixed a couple tests: two which assumed that the builtin display hook

didn't use a table -e, and one that assumed a hook couldn't be a string.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

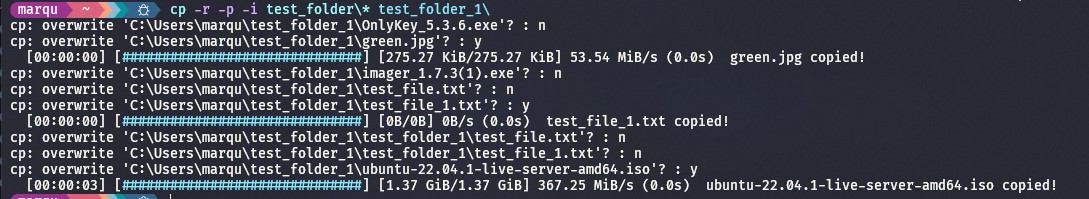

# Description

Signatures in `help commands` will now have more structure for params

and input/output pairs.

Example:

Improved params

Improved input/output pairs

# User-Facing Changes

This is technically a breaking change if previous code assumed the shape

of things in `help commands`.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR changes `Value::columns` to return a slice of columns instead of

cloning said columns. If the caller needs an owned copy, they can use

`slice::to_vec` or the like. This eliminates unnecessary Vec clones

(e.g., in `update cells`).

# User-Facing Changes

Breaking change for `nu_protocol` API.

# Description

`version` has always been a bit off regarding the `commit_hash`

😕

i think it was @fdncred who found this trick: `touch`ing the

`crates/nu-cmd-lang/build.rs` file

- won't change the Git index

- will force Nushell to recompile the `version` information correctly

this PR adds a call to `touch` on that file to `toolkit install`.

# User-Facing Changes

`version` should be correct when installing locally with the `toolkit`.

# Tests + Formatting

# After Submitting

* histogram to chart

* version to core

This completes moving commands out of the *Default* category...

When you run

```rust

nu -n --no-std-lib

```

```rust

help commands | where category == "default"

```

You now get an *Empty List* 😄

The following *Filters* commands were incorrectly in the category

*Default*

* group-by

* join

* reduce

* split-by

* transpose

This continues the effort of moving commands out of default and into

their proper category...

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

@jntrnr and I discussed the fact that we can now *graduate* nuon to be a

first class citizen...

This PR moves

* from nuon

* to nuon

out of the *experimental* stage and into *formats*

# Description

Add a few tests to ensure that you can add subcommands to scripts. We've

supported this for a long time, though I'm not sure if anyone has

actually tried it. As we weren't testing the support, this PR adds a few

tests to ensure it stays working.

Example script subcommand:

```

def "main addten" [x: int] {

print ($x + 10)

}

```

then call it with:

```

> nu ./script.nu addten 5

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

In an effort to go through and review all of the remaining commands to

find anything else that could possibly

be moved to *nu-cmd-extra*

I noticed that there are still some commands that have not been

categorized...

I am going to *Categorize* the remaining commands that still *do not

have Category homes*

In PR land I will call this *Categorification* as a play off of

*Cratification*

* str substring

* str trim

* str upcase

were in the *default* category because for some reason they had not yet

been categorized.

I went ahead and moved them to the

```rust

.category(Category::Strings)

```

I am moving the following str case commands to nu-cmd-extra (as

discussed in the core team meeting the other day)

* camel-case

* kebab-case

* pascal-case

* screaming-snake-case

* snake-case

* title-case

# Description

This PR adds a keybinding in the rust code for `search-history` aka

reverse-search as `ctrl+q` so it does not overwrite `history-search`

with `ctrl+r` as it does now.

This PR supercedes #9862. Thanks to @SUPERCILEX for bringing this to our

attention.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #issue

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Currently `parse` acts like a `.filter` over an iterator, except that it

emits `None` for elements that can't be parsed. This causes consumers of

the adapted iterator to stop iterating too early. The correct behaviour

is to keep pulling the inner iterator until either the end of it is

reached or an element can be parsed.

- this PR should close#9906

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

List streams won't be truncated anymore after the first parse failure.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- [x] `cargo fmt --all -- --check` to check standard code formatting

(`cargo fmt --all` applies these changes)

- [x] `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- [-] `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- [x] `cargo fmt --all -- --check` to check standard code formatting

(`cargo fmt --all` applies these changes)

- [x] `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- [x] `cargo test --workspace` to check that all tests pass

- 11 tests fail, but the same 11 tests fail on main as well

- [x] `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Currently, foreground process management is disabled for macOS, since

the original code had issues (see #7068).

This PR re-enables process management on macOS in combination with the

changes from #9693.

# User-Facing Changes

Fixes hang on exit for nested nushells on macOS (issue #9859). Nushell

should now manage processes in the same way on macOS and other unix

systems.

# Description

This PR adds the `header_on_separator` table option as `false` to the

`default_config.nu` file.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR changes the signature of the deprecated command `let-env` so

that it does not mislead people when invoking it without parameters.

### Before

```nushell

> let-env

Error: nu::parser::missing_positional

× Missing required positional argument.

╭─[entry #2:1:1]

1 │ let-env

╰────

help: Usage: let-env <var_name> = <initial_value>

```

### After

```nushell

❯ let-env

Error: nu:🐚:deprecated_command

× Deprecated command let-env

╭─[entry #1:1:1]

1 │ let-env

· ───┬───

· ╰── 'let-env' is deprecated. Please use '$env.<environment variable> = ...' instead.

╰────

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

fix#9796

Sorry that you've had the issues.

I've actually encountered them yesterday too (seems like they have

appeared after some refactoring in the middle) but was not able to fix

that rapid.

Created a bunch of tests.

cc: @fdncred

Note:

This option will be certainly slower then a default ones. (could be

fixed but ... maybe later).

Maybe it shall be cited somewhere.

PS: Haven't tested on a wrapped/expanded tables.

---------

Signed-off-by: Maxim Zhiburt <zhiburt@gmail.com>

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

related to

- https://github.com/nushell/nushell/pull/9907

# Description

https://github.com/nushell/nushell/pull/9907 removed the front space

from all `PROMPT_INDICATOR`s but this is not what the default behaviour

of Nushell is, i.e. in `nu --no-config-file`.

this PR

- removes the space that is prepended by Nushell before the prompt

indicator to match the `default_env.nu`

- swaps INSERT and NORMAL in the Rust code to match the `:` and `>`