Fixesnushell/nushell#8723

# Description

The example was showing the flag that no longer exists.

# User-Facing Changes

Help no longer shows the example with `-d` flag.

# Tests + Formatting

I trust in CI.

# After Submitting

Nothing.

Fixesnushell/nushell#7995

# Description

This dependency is no longer used by nushell itself.

# User-Facing Changes

None.

# Tests + Formatting

Pased.

# After Submitting

None.

# Description

Prefer process name over executable path. This in practice causes the

`name` column to use just the base executable name.

Also set start_time to nothing on error, because why not.

# User-Facing Changes

Before:

> /opt/google/chrome/chrome

After:

> chrome

Also picks up changes due to `echo test-proc > /proc/$$/comm`.

# Tests + Formatting

No new coverage.

# Description

Fixes issue [12828](https://github.com/nushell/nushell/issues/12828).

When attempting a `polars collect` on an eager dataframe, we return

dataframe as is. However, before this fix I failed to increment the

internal cache reference count. This caused the value to be dropped from

the internal cache when the references were decremented again.

This fix adds a call to cache.get to increment the value before

returning.

# Description

Previously when nushell failed to parse the content type header, it

would emit an error instead of returning the response. Now it will fall

back to `text/plain` (which, in turn, will trigger type detection based

on file extension).

May fix (potentially) nushell/nushell#11927

Refs:

https://discord.com/channels/601130461678272522/614593951969574961/1272895236489613366

Supercedes: #13609

# User-Facing Changes

It's now possible to fetch content even if the server returns an invalid

content type header. Users may need to parse the response manually, but

it's still better than not getting the response at all.

# Tests + Formatting

Added a test for the new behaviour.

# After Submitting

Bumps [indexmap](https://github.com/indexmap-rs/indexmap) from 2.3.0 to

2.4.0.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/indexmap-rs/indexmap/blob/master/RELEASES.md">indexmap's

changelog</a>.</em></p>

<blockquote>

<h2>2.4.0</h2>

<ul>

<li>Added methods <code>IndexMap::append</code> and

<code>IndexSet::append</code>, moving all items from

one map or set into another, and leaving the original capacity for

reuse.</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="b66bbfe282"><code>b66bbfe</code></a>

Merge pull request <a

href="https://redirect.github.com/indexmap-rs/indexmap/issues/337">#337</a>

from cuviper/append</li>

<li><a

href="a288bf3b3a"><code>a288bf3</code></a>

Add a README note for <code>ordermap</code></li>

<li><a

href="0b2b4b9a78"><code>0b2b4b9</code></a>

Release 2.4.0</li>

<li><a

href="8c0a1cd4be"><code>8c0a1cd</code></a>

Add <code>append</code> methods</li>

<li>See full diff in <a

href="https://github.com/indexmap-rs/indexmap/compare/2.3.0...2.4.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [shadow-rs](https://github.com/baoyachi/shadow-rs) from 0.30.0 to

0.31.1.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/baoyachi/shadow-rs/releases">shadow-rs's

releases</a>.</em></p>

<blockquote>

<h2>[Improvement] Correct git command directory</h2>

<p>ref: <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/170">#170</a></p>

<p>Thx <a

href="https://github.com/MichaelScofield"><code>@MichaelScofield</code></a></p>

<h2>Make build_with function public</h2>

<p>ref:<a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/169">#169</a></p>

<p>Thx <a

href="https://github.com/MichaelScofield"><code>@MichaelScofield</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="aa804ec8a2"><code>aa804ec</code></a>

Update Cargo.toml</li>

<li><a

href="b3fbe36403"><code>b3fbe36</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/170">#170</a>

from MichaelScofield/find-right-branch</li>

<li><a

href="fe6f940f8b"><code>fe6f940</code></a>

execute "git" command in the right path</li>

<li><a

href="458be25e74"><code>458be25</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/169">#169</a>

from MichaelScofield/flexible-for-submodule</li>

<li><a

href="1521a288b4"><code>1521a28</code></a>

Expose the "build" function to let projects with submodules

control where to ...</li>

<li><a

href="ee12741fa0"><code>ee12741</code></a>

Merge pull request <a

href="https://redirect.github.com/baoyachi/shadow-rs/issues/168">#168</a>

from baoyachi/issue/149</li>

<li><a

href="dfb8b24adb"><code>dfb8b24</code></a>

cargo fmt</li>

<li><a

href="a3be8680aa"><code>a3be868</code></a>

fix clippy</li>

<li><a

href="c8e7cd5704"><code>c8e7cd5</code></a>

Fix compilation failures caused by unwrap</li>

<li>See full diff in <a

href="https://github.com/baoyachi/shadow-rs/compare/v0.30.0...v0.31.1">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [sysinfo](https://github.com/GuillaumeGomez/sysinfo) from 0.30.11

to 0.30.13.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/GuillaumeGomez/sysinfo/blob/master/CHANGELOG.md">sysinfo's

changelog</a>.</em></p>

<blockquote>

<h1>0.30.13</h1>

<ul>

<li>macOS: Fix segfault when calling

<code>Components::refresh_list</code> multiple times.</li>

<li>Windows: Fix CPU arch retrieval.</li>

</ul>

<h1>0.30.12</h1>

<ul>

<li>FreeBSD: Fix network interfaces retrieval (one was always

missing).</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="1639d74765"><code>1639d74</code></a>

Merge pull request <a

href="https://redirect.github.com/GuillaumeGomez/sysinfo/issues/1306">#1306</a>

from GuillaumeGomez/update</li>

<li><a

href="4bcded7fa7"><code>4bcded7</code></a>

Update crate version to 0.30.13</li>

<li><a

href="9feeff00a3"><code>9feeff0</code></a>

Add CHANGELOG for 0.30.13</li>

<li><a

href="3e7c8f23d2"><code>3e7c8f2</code></a>

Merge pull request <a

href="https://redirect.github.com/GuillaumeGomez/sysinfo/issues/1305">#1305</a>

from GuillaumeGomez/backport</li>

<li><a

href="5ec06633a0"><code>5ec0663</code></a>

Fix new clippy lints</li>

<li><a

href="53d066ebf1"><code>53d066e</code></a>

Fix fmt</li>

<li><a

href="6a6838bc81"><code>6a6838b</code></a>

Fix minor typo in a doc comment</li>

<li><a

href="0662e60ac4"><code>0662e60</code></a>

Fix CPU arch retrieval on Windows (<a

href="https://redirect.github.com/GuillaumeGomez/sysinfo/issues/1296">#1296</a>)</li>

<li><a

href="57ea114d10"><code>57ea114</code></a>

Fix segfault when call calling Components::refresh_list multiple times

on M-...</li>

<li><a

href="57d6b32300"><code>57d6b32</code></a>

Merge pull request <a

href="https://redirect.github.com/GuillaumeGomez/sysinfo/issues/1271">#1271</a>

from GuillaumeGomez/update</li>

<li>Additional commits viewable in <a

href="https://github.com/GuillaumeGomez/sysinfo/compare/v0.30.11...v0.30.13">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

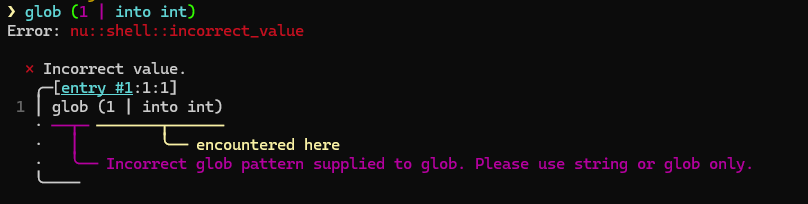

# Description

@sholderbach pointed out that I could've made this error message better.

So, here's my attempt to make it better.

This should work. I had a hard time figuring out how to trigger the

error anyway because the type checker doesn't allow "bad" parameters to

begin with.

### Before

### After

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR changes glob to take either a string or a glob as a parameter.

Closes#13611

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR is meant to provide a more helpful error message when using http

get and the content type can't be parsed.

### Before

### After

The span isn't perfect but there's no way to get the span of the content

type that I can see.

In the middle of fixing this error, I also discovered how to fix the

problem in general. Since you can now see the error message complaining

about double quotes (char 22 at position 0. 22 hex is `"`). The fix is

just to remove all the double quotes from the content_type and then you

get this.

### After After

The discussion on Discord about this is that `--raw` or

`--ignore-errors` should eat this error and it "just work" as well as

default to text or binary when the mime parsing fails. I agree but this

PR does not implement that.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Fixes: #13479

# User-Facing Changes

Given the following setup:

```

cd /tmp

touch src_file.txt

ln -s src_file.txt link1

```

### Before

```

ls -lf link1 | get target.0 # It outputs src_file.txt

```

### After

```

ls -lf link1 | get target.0 # It outputs /tmp/src_file.txt

```

# Tests + Formatting

Added a test for the change

# Description

The original code assumed a full single-string command line could be

split by space to get the original argv.

# User-Facing Changes

Fixes an issue where `ps` would display incomplete process name if it

contained space(s).

# Tests + Formatting

Fixes existing code, no new coverage. Existing code doesn't seem to be

covered, we could be it would be somewhat involved and the fix was

simple, so didn't bother..

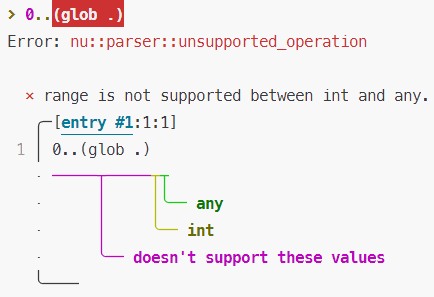

# Description

As part of fixing https://github.com/nushell/nushell/issues/13586, this

PR checks the types of the operands when creating a range. Stuff like

`0..(glob .)` will be rejected at parse time. Additionally, `0..$x` will

be treated as a range and rejected if `x` is not defined, rather than

being treated as a string. A separate PR will need to be made to do

reject streams at runtime, so that stuff like `0..(open /dev/random)`

doesn't hang.

Internally, this PR adds a `ParseError::UnsupportedOperationTernary`

variant, for when you have a range like `1..2..(glob .)`.

# User-Facing Changes

Users will now receive an error if any of the operands in the ranges

they construct have types that aren't compatible with `Type::Number`.

Additionally, if a piece of code looks like a range but some parse error

is encountered while parsing it, that piece of code will still be

treated as a range and the user will be shown the parse error. This

means that a piece of code like `0..$x` will be treated as a range no

matter what. Previously, if `x` weren't the expression would've been

treated as a string `"0..$x"`. I feel like it makes the language less

complicated if we make it less context-sensitive.

Here's an example of the error you get:

```

> 0..(glob .)

Error: nu::parser::unsupported_operation

× range is not supported between int and any.

╭─[entry #1:1:1]

1 │ 0..(glob .)

· ─────┬─────┬┬

· │ │╰── any

· │ ╰── int

· ╰── doesn't support these values

╰────

```

And as an image:

Note: I made the operands themselves (above, `(glob .)`) be garbage,

rather than the `..` operator itself. This doesn't match the behavior of

the math operators (if you do `1 + "foo"`, `+` gets highlighted red).

This is because with ranges, the range operators aren't `Expression`s

themselves, so they can't be turned into garbage. I felt like here, it

makes more sense to highlight the individual operand anyway.

# Description

Fixes: #13253

The issue is because nushell use `parse_value` to parse named args, but

`parse_value` doesn't parse `OneOf` syntax shape.

# User-Facing Changes

`OneOf` in named args should works again.

# Tests + Formatting

I think it's hard to add a test, because nushell doesn't support `oneof`

syntax in custom command yet.

# After Submitting

NaN

# Description :

- This pull request addresses issue #13594 where any substring of the

path that matches the home directory is replaced with `~` in the title

bar. This was problematic because partial matches within the path were

also being replaced.

---------

Signed-off-by: Aakash788 <aakashparmar788@gmail.com>

Co-authored-by: sholderbach <sholderbach@users.noreply.github.com>

# Description

Fixes#13587

# User-Facing Changes

Files without ending or non-`*.nu` files will not be loaded as

vendor/configuration files.

# Tests + Formatting

So far we don't have any tests for that..

# Description

Fixes Issue #13477

This adds a check to see if a user is trying to invoke a

(non-executable) file as a command and returns a helpful error if so.

EDIT: this will not work on Windows, and is arguably not relevant there,

because of the different semantics of executables. I think the

equivalent on Windows would be if a user tries to invoke `./foo`, we

should look for `foo.exe` or `foo.bat` in the directory and recommend

that if it exists.

# User-Facing Changes

When a user invokes an unrecognized command that is the path to an

existing file, the error used to say:

`{name} is neither a Nushell built-in or a known external command`

This PR proposes to change the message to:

`{name} refers to a file that is not executable. Did you forget to to

set execute permissions?`

# Tests + Formatting

Ran cargo fmt, clippy and test on the workspace.

EDIT: added test asserting the new behavior

# Description

Something I meant to add a long time ago. We currently don't have a

convenient way to print raw binary data intentionally. You can pipe it

through `cat` to turn it into an unknown stream, or write it to a file

and read it again, but we can't really just e.g. generate msgpack and

write it to stdout without this. For example:

```nushell

[abc def] | to msgpack | print --raw

```

This is useful for nushell scripts that will be piped into something

else. It also means that `nu_plugin_nu_example` probably doesn't need to

do this anymore, but I haven't adjusted it yet:

```nushell

def tell_nushell_encoding [] {

print -n "\u{0004}json"

}

```

This happens to work because 0x04 is a valid UTF-8 character, but it

wouldn't be possible if it were something above 0x80.

`--raw` also formats other things without `table`, I figured the two

things kind of go together. The output is kind of like `to text`.

Debatable whether that should share the same flag, but it was easier

that way and seemed reasonable.

# User-Facing Changes

- `print` new flag: `--raw`

# Tests + Formatting

Added tests.

# After Submitting

- [ ] release notes (command modified)

# Description

As per our Wednesday meeting, this adds a parse error when something

that would be parsed as an external call is present at the top level,

unless the head of the external call begins with a caret (to make it

explicit).

I tried to make the error quite descriptive about what should be done.

# User-Facing Changes

These now cause a parse error:

```nushell

$foo = bar

$foo = `bar`

```

These would have been interpreted as strings before this version, but

now they'd be interpreted as external calls. This behavior is consistent

with `let`/`mut` (which is unaffected by this change).

Here is an example of the error:

```

Error: × External command calls must be explicit in assignments

╭─[entry #3:1:8]

1 │ $foo = bar

· ─┬─

· ╰── add a caret (^) before the command name if you intended to run and capture its output

╰────

help: the parsing of assignments was changed in 0.97.0, and this would have previously been treated as a string.

Alternatively, quote the string with single or double quotes to avoid it being interpreted as a command name. This

restriction may be removed in a future release.

```

# Tests + Formatting

Tests added to cover the change. Note made about it being temporary.

# Description

Cleanups:

- Add "key_press" to event reading function names to match reality

- Move the relevant comment about why only key press events are

interesting one layer up

- Remove code duplication in handle_events

- Make `try_next` try harder (instead of bail on a boring event); I

think that was the original intention

- Remove recursion from `next` (I think that's clearer? but maybe just

what I'm used to)

# User-Facing Changes

None

# Tests + Formatting

This cleans up existing code, no new test coverage.

# Description

This pull request merges `polars sink` and `polars to-*` into one

command `polars save`.

# User-Facing Changes

- `polars to-*` commands have all been replaced with `polars save`. When

saving a lazy frame to a type that supports a polars sink operation, a

sink operation will be performed. Sink operations are much more

performant, performing a collect while streaming to the file system.

Supersedes #13554

(not sure how to narrow the word ignore to a file, maybe the better

course of action would be to mark this file as an ignore with all the

cryptic fileendings)

This PR closes [Issue

#13482](https://github.com/nushell/nushell/issues/13482)

# Description

This PR tend to make all math function to be constant.

# User-Facing Changes

The math commands now can be used as constant methods.

### Some Example

```

> const MODE = [3 3 9 12 12 15] | math mode

> $MODE

╭───┬────╮

│ 0 │ 3 │

│ 1 │ 12 │

╰───┴────╯

> const LOG = [16 8 4] | math log 2

> $LOG

╭───┬──────╮

│ 0 │ 4.00 │

│ 1 │ 3.00 │

│ 2 │ 2.00 │

╰───┴──────╯

> const VAR = [1 3 5] | math variance

> $VAR

2.6666666666666665

```

# Tests + Formatting

Tests are added for all of the math command to test there constant

behavior.

I mostly focused on the actual user experience, not the correctness of

the methods and algorithms.

# After Submitting

I think this change don't require any additional documentation. Feel

free to correct me in this topic please.

# Description

This exposes the `LazyFrame::sink_*` functionality to allow a streaming

collect directly to the filesystem. This useful when working with data

that is too large to fit into memory.

# User-Facing Changes

- Introduction of the `polars sink` command

# Description

Prior this pull request `polars first` and `polars last` would collect a

lazy frame into an eager frame before performing operations. Now `polars

first` will to a `LazyFrame::limit` and `polars last` will perform a

`LazyFrame::tail`. This is really useful in working with very large

datasets.

# Description

When opening a dataframe the default operation will be to create a lazy

frame if possible. This works much better with large datasets and

supports hive format.

# User-Facing Changes

- `--lazy` is nolonger a valid option. `--eager` must be used to

explicitly open an eager dataframe.

Bumps [mockito](https://github.com/lipanski/mockito) from 1.4.0 to

1.5.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/lipanski/mockito/releases">mockito's

releases</a>.</em></p>

<blockquote>

<h2>1.5.0</h2>

<ul>

<li><strong>[Breaking]</strong> <a

href="https://redirect.github.com/lipanski/mockito/pull/198">Upgrade</a>

to hyper v1</li>

</ul>

<p>Thanks to <a

href="https://github.com/tottoto"><code>@tottoto</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="f1c3fe1b7f"><code>f1c3fe1</code></a>

Bump to 1.5.0</li>

<li><a

href="08f2fa322d"><code>08f2fa3</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/199">#199</a>

from tottoto/refactor-response-body</li>

<li><a

href="42e3efe734"><code>42e3efe</code></a>

Refactor response body</li>

<li><a

href="f477e54857"><code>f477e54</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/198">#198</a>

from tottoto/update-to-hyper-1</li>

<li><a

href="e8694ae991"><code>e8694ae</code></a>

Update to hyper 1</li>

<li><a

href="b152b76130"><code>b152b76</code></a>

Depend on some crate directly</li>

<li>See full diff in <a

href="https://github.com/lipanski/mockito/compare/1.4.0...1.5.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

# Description

By popular demand (a.k.a.

https://github.com/nushell/nushell.github.io/issues/1035), provide an

example of a type signature in the `def` help.

# User-Facing Changes

Help/Doc

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib

# After Submitting

N/A

Bumps [quick-xml](https://github.com/tafia/quick-xml) from 0.31.0 to

0.32.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/tafia/quick-xml/releases">quick-xml's

releases</a>.</em></p>

<blockquote>

<h2>v0.32.0</h2>

<h2>Significant Changes</h2>

<p>The method of reporting positions of errors has changed - use

<code>error_position()</code> to get an offset of the error position.

For <code>SyntaxError</code>s the range

<code>error_position()..buffer_position()</code> also will represent a

span of error.</p>

<h3>⚠️ Breaking Changes</h3>

<p>The way to configure parser has changed. Now all configuration is

contained in the <code>Config</code> struct and can be applied at once.

When <code>serde-types</code> feature is enabled, configuration is

serializable.</p>

<p>The way of resolve entities with <code>unescape_with</code> has

changed. Those methods no longer resolve predefined entities

(<code>lt</code>, <code>gt</code>, <code>apos</code>, <code>quot</code>,

<code>amp</code>). <code>NoEntityResolver</code> renamed to

<code>PredefinedEntityResolver</code>.</p>

<p><code>Writer::create_element</code> now accepts <code>impl

Into<Cow<str>></code> instead of <code>&impl

AsRef<str></code>.</p>

<p>Minimum supported version of serde raised to 1.0.139</p>

<p>The full changelog is below.</p>

<h2>What's Changed</h2>

<h3>New Features</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/513">#513</a>:

Allow to continue parsing after getting new

<code>Error::IllFormed</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/677">#677</a>:

Added methods <code>config()</code> and <code>config_mut()</code> to

inspect and change the parser configuration. Previous builder methods on

<code>Reader</code> / <code>NsReader</code> was replaced by direct

access to fields of config using

<code>reader.config_mut().<...></code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Added a method <code>Config::enable_all_checks</code> to turn on or off

all well-formedness checks.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>escape::minimal_escape()</code> which escapes only

<code>&</code> and <code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>BytesCData::minimal_escape()</code> which escapes only

<code>&</code> and <code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>Serializer::set_quote_level()</code> which allow to set

desired level of escaping.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/705">#705</a>:

Added <code>NsReader::prefixes()</code> to list all the prefixes

currently declared.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/629">#629</a>:

Added a default case to

<code>impl_deserialize_for_internally_tagged_enum</code> macro so that

it can handle every attribute that does not match existing cases within

an enum variant.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/722">#722</a>:

Allow to pass owned strings to <code>Writer::create_element</code>. This

is breaking change!</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/275">#275</a>:

Added <code>ElementWriter::new_line()</code> which enables pretty

printing elements with multiple attributes.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/743">#743</a>:

Added <code>Deserializer::get_ref()</code> to get XML Reader from serde

Deserializer</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/734">#734</a>:

Added helper functions to resolve predefined XML and HTML5 entities:

<ul>

<li><code>quick_xml::escape::resolve_predefined_entity</code></li>

<li><code>quick_xml::escape::resolve_xml_entity</code></li>

<li><code>quick_xml::escape::resolve_html5_entity</code></li>

</ul>

</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/753">#753</a>:

Added parser for processing instructions:

<code>quick_xml::reader::PiParser</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/754">#754</a>:

Added parser for elements:

<code>quick_xml::reader::ElementParser</code>.</li>

</ul>

<h3>Bug Fixes</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/622">#622</a>:

Fix wrong disregarding of not closed markup, such as lone

<code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Fix incorrect position reported for

<code>Error::IllFormed(DoubleHyphenInComment)</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Fix incorrect position reported for

<code>Error::IllFormed(MissingDoctypeName)</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/704">#704</a>:

Fix empty tags with attributes not being expanded when

<code>expand_empty_elements</code> is set to true.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/683">#683</a>:

Use local tag name when check tag name against possible names for

field.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/753">#753</a>:

Correctly determine end of processing instructions and XML

declaration.</li>

</ul>

<h3>Misc Changes</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/675">#675</a>:

Minimum supported version of serde raised to 1.0.139</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/675">#675</a>:

Rework the <code>quick_xml::Error</code> type to provide more accurate

information:</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/tafia/quick-xml/blob/master/Changelog.md">quick-xml's

changelog</a>.</em></p>

<blockquote>

<h2>0.32.0 -- 2024-06-10</h2>

<p>The way to configure parser is changed. Now all configuration is

contained in the

<code>Config</code> struct and can be applied at once. When

<code>serde-types</code> feature is enabled,

configuration is serializable.</p>

<p>The method of reporting positions of errors has changed - use

<code>error_position()</code>

to get an offset of the error position. For <code>SyntaxError</code>s

the range

<code>error_position()..buffer_position()</code> also will represent a

span of error.</p>

<p>The way of resolve entities with <code>unescape_with</code> are

changed. Those methods no longer

resolve predefined entities.</p>

<h3>New Features</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/513">#513</a>:

Allow to continue parsing after getting new

<code>Error::IllFormed</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/677">#677</a>:

Added methods <code>config()</code> and <code>config_mut()</code> to

inspect and change the parser

configuration. Previous builder methods on <code>Reader</code> /

<code>NsReader</code> was replaced by

direct access to fields of config using

<code>reader.config_mut().<...></code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Added a method <code>Config::enable_all_checks</code> to turn on or off

all

well-formedness checks.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>escape::minimal_escape()</code> which escapes only

<code>&</code> and <code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>BytesCData::minimal_escape()</code> which escapes only

<code>&</code> and <code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/362">#362</a>:

Added <code>Serializer::set_quote_level()</code> which allow to set

desired level of escaping.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/705">#705</a>:

Added <code>NsReader::prefixes()</code> to list all the prefixes

currently declared.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/629">#629</a>:

Added a default case to

<code>impl_deserialize_for_internally_tagged_enum</code> macro so that

it can handle every attribute that does not match existing cases within

an enum variant.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/722">#722</a>:

Allow to pass owned strings to <code>Writer::create_element</code>. This

is breaking change!</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/275">#275</a>:

Added <code>ElementWriter::new_line()</code> which enables pretty

printing elements with multiple attributes.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/743">#743</a>:

Added <code>Deserializer::get_ref()</code> to get XML Reader from serde

Deserializer</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/734">#734</a>:

Added helper functions to resolve predefined XML and HTML5 entities:

<ul>

<li><code>quick_xml::escape::resolve_predefined_entity</code></li>

<li><code>quick_xml::escape::resolve_xml_entity</code></li>

<li><code>quick_xml::escape::resolve_html5_entity</code></li>

</ul>

</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/753">#753</a>:

Added parser for processing instructions:

<code>quick_xml::reader::PiParser</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/754">#754</a>:

Added parser for elements:

<code>quick_xml::reader::ElementParser</code>.</li>

</ul>

<h3>Bug Fixes</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/622">#622</a>:

Fix wrong disregarding of not closed markup, such as lone

<code><</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Fix incorrect position reported for

<code>Error::IllFormed(DoubleHyphenInComment)</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/684">#684</a>:

Fix incorrect position reported for

<code>Error::IllFormed(MissingDoctypeName)</code>.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/704">#704</a>:

Fix empty tags with attributes not being expanded when

<code>expand_empty_elements</code> is set to true.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/683">#683</a>:

Use local tag name when check tag name against possible names for

field.</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/753">#753</a>:

Correctly determine end of processing instructions and XML

declaration.</li>

</ul>

<h3>Misc Changes</h3>

<ul>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/675">#675</a>:

Minimum supported version of serde raised to 1.0.139</li>

<li><a

href="https://redirect.github.com/tafia/quick-xml/issues/675">#675</a>:

Rework the <code>quick_xml::Error</code> type to provide more accurate

information:</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="8d38e4cc14"><code>8d38e4c</code></a>

Release 0.32.0</li>

<li><a

href="e6f7be47a7"><code>e6f7be4</code></a>

Add #[inline] to methods implementing XmlSource</li>

<li><a

href="33b9dc59c7"><code>33b9dc5</code></a>

Increase position outside of XmlSource::skip_one</li>

<li><a

href="704ce89239"><code>704ce89</code></a>

Generalize reading methods of PI and element</li>

<li><a

href="6f1a644dcc"><code>6f1a644</code></a>

Rewrite read_element like read_pi</li>

<li><a

href="0a6ecd6498"><code>0a6ecd6</code></a>

Add reusable parser for XML element and use it internally</li>

<li><a

href="02de8a51bf"><code>02de8a5</code></a>

Remove unnecessary block</li>

<li><a

href="0cb09fbda9"><code>0cb09fb</code></a>

Use <code>if let</code> instead of <code>match</code></li>

<li><a

href="6c58bef3ab"><code>6c58bef</code></a>

Implement XmlSource::read_pi like XmlSource::read_element</li>

<li><a

href="79b2fda9fe"><code>79b2fda</code></a>

Stop at the <code>></code> in PiParser which is consistent with other

search functions</li>

<li>Additional commits viewable in <a

href="https://github.com/tafia/quick-xml/compare/v0.31.0...v0.32.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [indexmap](https://github.com/indexmap-rs/indexmap) from 2.2.6 to

2.3.0.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/indexmap-rs/indexmap/blob/master/RELEASES.md">indexmap's

changelog</a>.</em></p>

<blockquote>

<h2>2.3.0</h2>

<ul>

<li>Added trait <code>MutableEntryKey</code> for opt-in mutable access

to map entry keys.</li>

<li>Added method <code>MutableKeys::iter_mut2</code> for opt-in mutable

iteration of map

keys and values.</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="22c0b4e0f3"><code>22c0b4e</code></a>

Merge pull request <a

href="https://redirect.github.com/indexmap-rs/indexmap/issues/335">#335</a>

from epage/mut</li>

<li><a

href="39f7cc097a"><code>39f7cc0</code></a>

Release 2.3.0</li>

<li><a

href="6049d518a0"><code>6049d51</code></a>

feat(map): Add MutableKeys::iter_mut2</li>

<li><a

href="65c3c46e37"><code>65c3c46</code></a>

feat(map): Add MutableEntryKey</li>

<li><a

href="7f7d39f734"><code>7f7d39f</code></a>

Merge pull request <a

href="https://redirect.github.com/indexmap-rs/indexmap/issues/332">#332</a>

from waywardmonkeys/missing-indentation-in-doc-comment</li>

<li><a

href="8222a59a85"><code>8222a59</code></a>

Fix missing indentation in doc comment.</li>

<li><a

href="1a71dde63d"><code>1a71dde</code></a>

Merge pull request <a

href="https://redirect.github.com/indexmap-rs/indexmap/issues/327">#327</a>

from waywardmonkeys/dep-update-dev-dep-itertools</li>

<li><a

href="ac2a8a5a40"><code>ac2a8a5</code></a>

deps(dev): Update <code>itertools</code></li>

<li>See full diff in <a

href="https://github.com/indexmap-rs/indexmap/compare/2.2.6...2.3.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

- Add `nu_plugin_polars` to the list of plugins in

`build-all-maclin.sh`.

- Add `nu_plugin_polars` to the list of plugins in `build-all.nu`.

- Add `nu_plugin_polars` to the list of plugins in `install-all.ps1`.

- Add `nu_plugin_polars` to the list of plugins in `install-all.sh`.

- Add `nu_plugin_polars` to the list of plugins in `uninstall-all.sh`.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

This PR will close#13501

# Description

This PR expands on [the relay of signals to running plugin

processes](https://github.com/nushell/nushell/pull/13181). The Ctrlc

relay has been generalized to SignalAction::Interrupt and when

reset_signal is called on the main EngineState, a SignalAction::Reset is

now relayed to running plugins.

# User-Facing Changes

The signal handler closure now takes a `signals::SignalAction`, while

previously it took no arguments. The handler will now be called on both

interrupt and reset. The method to register a handler on the plugin side

is now called `register_signal_handler` instead of

`register_ctrlc_handler`

[example](https://github.com/nushell/nushell/pull/13510/files#diff-3e04dff88fd0780a49778a3d1eede092ec729a1264b4ef07ca0d2baa859dad05L38).

This will only affect plugin authors who have started making use of

https://github.com/nushell/nushell/pull/13181, which isn't currently

part of an official release.

The change will also require all of user's plugins to be recompiled in

order that they don't error when a signal is received on the

PluginInterface.

# Testing

```

: example ctrlc

interrupt status: false

waiting for interrupt signal...

^Cinterrupt status: true

peace.

Error: × Operation interrupted

╭─[display_output hook:1:1]

1 │ if (term size).columns >= 100 { table -e } else { table }

· ─┬

· ╰── This operation was interrupted

╰────

: example ctrlc

interrupt status: false <-- NOTE status is false

waiting for interrupt signal...

^Cinterrupt status: true

peace.

Error: × Operation interrupted

╭─[display_output hook:1:1]

1 │ if (term size).columns >= 100 { table -e } else { table }

· ─┬

· ╰── This operation was interrupted

╰────

```

# Description

Attempt to guess the content type of a file when opening with --raw and

set it in the pipeline metadata.

<img width="644" alt="Screenshot 2024-08-02 at 11 30 10"

src="https://github.com/user-attachments/assets/071f0967-c4dd-405a-b8c8-f7aa073efa98">

# User-Facing Changes

- Content of files can be directly piped into commands like `http post`

with the content type set appropriately when using `--raw`.

# Description

When using a format string, `into datetime` would disallow an `int` even

when it logically made sense. This was mainly a problem when attempting

to convert a Unix epoch to Nushell `datetime`. Unix epochs are often

stored or returned as `int` in external data sources.

```nu

1722821463 | into datetime -f '%s'

Error: nu:🐚:only_supports_this_input_type

× Input type not supported.

╭─[entry #3:1:1]

1 │ 1722821463 | into datetime -f '%s'

· ─────┬──── ──────┬──────

· │ ╰── only string input data is supported

· ╰── input type: int

╰────

```

While the solution was simply to `| to text` the `int`, this PR handles

the use-case automatically.

Essentially a ~5 line change that just moves the current parsing to a

closure that is called for both Strings and Ints-converted-to-Strings.

# User-Facing Changes

After the change:

```nu

[

1722821463

"1722821463"

0

] | each { into datetime -f '%s' }

╭───┬──────────────╮

│ 0 │ 10 hours ago │

│ 1 │ 10 hours ago │

│ 2 │ 54 years ago │

╰───┴──────────────╯

```

# Tests + Formatting

Test case added.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

# Description

This PR updates nushell to the latest reedline commit which has some vi

keyboard mode changes.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Empty cells were being skipped, causing data to appear in the wrong

columns. By including the cells, data should appear in the correct

columns now. Fixes#10194.

Before:

```

$ [[a b c]; [1 null 3] [4 5 6]] | to html --partial | query web --as-table [a b c]

╭───┬───┬───┬─────────────────────╮

│ # │ a │ b │ c │

├───┼───┼───┼─────────────────────┤

│ 0 │ 1 │ 3 │ Missing column: 'c' │

│ 1 │ 4 │ 5 │ 6 │

╰───┴───┴───┴─────────────────────╯

```

After:

```

$ [[a b c]; [1 null 3] [4 5 6]] | to html --partial | query web --as-table [a b c]

╭───┬───┬───┬───╮

│ # │ a │ b │ c │

├───┼───┼───┼───┤

│ 0 │ 1 │ │ 3 │

│ 1 │ 4 │ 5 │ 6 │

╰───┴───┴───┴───╯

```

Co-authored-by: James Chen-Smith <jameschensmith@gmail.com>

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

Fixes#13204

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

When the completion mode is set to `prefix`, path completions explicitly

check for and prefer an exact match for a basename instead of longer or

similar names.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Exact match is inactive since there's no trailing slash

```

~/Public/nushell| ls crates/nu-plugin<tab>

crates/nu-plugin/ crates/nu-plugin-core/ crates/nu-plugin-engine/ crates/nu-plugin-protocol/

crates/nu-plugin-test-support/

```

Exact match is active

```

~/Public/nushell| ls crates/nu-plugin/<tab>

crates/nu-plugin/Cargo.toml crates/nu-plugin/LICENSE crates/nu-plugin/README.md crates/nu-plugin/src/

```

Fuzzy matching persists its behavior

```

~/Public/nushell> $env.config.completions.algorithm = "fuzzy";

~/Public/nushell| ls crates/nu-plugin/car

crates/nu-cmd-plugin/Cargo.toml crates/nu-plugin/Cargo.toml crates/nu-plugin-core/Cargo.toml crates/nu-plugin-engine/Cargo.toml

crates/nu-plugin-protocol/Cargo.toml crates/nu-plugin-test-support/Cargo.toml

```

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This seems to be a minor copy paste mistake. cc @Embers-of-the-Fire

Followup to #13526

# User-Facing Changes

(-)

# Tests + Formatting

(-)

# Description

Part 4 of replacing std::path types with nu_path types added in

https://github.com/nushell/nushell/pull/13115. This PR migrates various

tests throughout the code base.

In some `if let`s we ran the `SharedCow::to_mut` for the test and to get

access to a mutable reference in the happy path. Internally

`Arc::into_mut` has to read atomics and if necessary clone.

For else branches, where we still want to modify the record we

previously called this again (not just in rust, confirmed in the asm).

This would have introduced a `call` instruction and its cost (even if it

would be guaranteed to take the short path in `Arc::into_mut`).

Lifting it get's rid of this.

# Description

Since we make the promise that record keys/columns are exclusice we

don't have to go through all columns after we have found the first one.

Should permit some short-circuiting if the column is found early.

# User-Facing Changes

(-)

# Tests + Formatting

(-)

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Original implementation contains multiple `expect` which can cause

internal runtime panic.

This pr forks the `css` selector impl and make it return an error that

nushell can recognize.

**Note:** The original impl is still used in pre-defined selector

implementations, but they

should never fail, so the `css` fn is preserved.

Closes#13496.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Now `query web` will not panic when the `query` parameter is not given

or has syntax error.

```plain

❯ .\target\debug\nu.exe -c "http get https://www.rust-lang.org | query web"

Error: × CSS query parse error

╭─[source:1:38]

1 │ http get https://www.rust-lang.org | query web

· ────┬────

· ╰─┤ Unexpected error occurred. Please report this to the developer

· │ EmptySelector

╰────

help: cannot parse query as a valid CSS selector

```

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

-->

- [x] `cargo fmt --all -- --check` to check standard code formatting

(`cargo fmt --all` applies these changes)

- [x] `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used`

to check that you're using the standard code style

- [x] `cargo test --workspace` to check that all tests pass (on Windows

make sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- [x] `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

<!--

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- [x] Impl change, no doc update.

---------

Co-authored-by: Stefan Holderbach <sholderbach@users.noreply.github.com>

# Description

Updates `default` command description to be more clear and adds an

example for a missing values in a list-of-records.

# User-Facing Changes

Help/doc only

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- Update `nothing` doc in Book to reference `default` per

https://github.com/nushell/nushell.github.io/issues/1073 - This was a

bit of a rabbit trail on the path to that update. ;-)

---------

Co-authored-by: Stefan Holderbach <sholderbach@users.noreply.github.com>

# Description

With this PR, we should be able to close

https://github.com/nushell/nushell.github.io/issues/1225

Help/doc/examples updated for:

* `cd` to show multi-dot traversal

* `cd` to show implicit `cd` with bare directory path

* Fixed/clarified another example that mentioned `$OLDPATH` while I was

in there

* `mv` and `cp` examples for multi-dot traversal

* Updated `cp` examples to use more consistent (and clear) filenames

# User-Facing Changes

Help/doc only

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

N/A

# Description

Clarified `random chars` help/doc:

* Default string length in absence of a `--length` arg is 25

* Characters are *"uniformly distributed over ASCII letters and numbers:

a-z, A-Z and 0-9"* (copied from the [`rand` crate

doc](https://docs.rs/rand/latest/rand/distributions/struct.Alphanumeric.html).

# User-Facing Changes

Help/Doc only

# Description

This is mainly cleanup, but introduces a slight (positive, if anything)

behavior change:

Some menu layouts support only a subset of styles, but with this change

the user will still be able to configure them. This seems strictly

better - if reedline starts supporting one of the existing styles for a

particular layout, there won't be any need to update nushell code.

# User-Facing Changes

None

# Tests + Formatting

This is simply factoring out existing code, old tests should still cover

it.

---------

Co-authored-by: sholderbach <sholderbach@users.noreply.github.com>

Lints from stable or nightly toolchain that may have questionable added

value.

- **Contentious lint to contract into single `if let`**

- **Potential false positive around `AsRef`/`Deref` fun**

# Description

Part 3 of replacing `std::path` types with `nu_path` types added in

#13115. This PR targets the paths listed in `$nu`. That is, the home,

config, data, and cache directories.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Add `--upgrade, -u` switch for `mv` command, corresponding to `cp`.

Closes#13458.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

```plain

❯ help mv | find update

╭──────────┬───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ 0 │ -u, --update - move and overwite only when the SOURCE file is newer than the destination file or when the destination file is missing │

╰──────────┴───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

```

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

-->

- [x] `cargo fmt --all -- --check` to check standard code formatting

(`cargo fmt --all` applies these changes)

- [x] `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used`

to check that you're using the standard code style

- [x] `cargo test --workspace` to check that all tests pass (on Windows

make sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- [x] `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

<!--

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

P.S.

The standard test kit (`nu-test-support`) doesn't provide utility to

create file with modification timestamp, and I didn't find any test for

this in `cp` command. I had tested on my local machine but I'm not sure

how to integrate it into ci. If unit testing is required, I may need

your guidance.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- [x] Command docs are auto generated.

# Description

Before this change, `"hash sha256 123 ok" | split words` would return

`[hash sha ok]` - which is surprising to say the least.

Now it will return `[hash sha256 123 ok]`.

Refs:

https://discord.com/channels/601130461678272522/615253963645911060/1268151658572025856

# User-Facing Changes

`split words` will no longer remove digits.

# Tests + Formatting

Added a test for this specific case.

# After Submitting

# Description

Minor nitpicks, but the `random int` help and examples were a bit

ambiguous in several aspects. Updated to clarify that:

* An unconstrained `random int` returns a non-negative integer. That is,

the smallest potential value is 0. Technically integers include negative

numbers as well, and `random int` will never return one unless you pass

it a range with a negative lower-bound.