# Description

This PR fixes the typo in the parameter `--table-name` instead of

`--table_name` in the `into sqlite` command.

fixes#12067

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Hello! This is my first PR to nushell, as I was looking at things for

#5066. The usage text for the date commands seemed fine to me, so this

is just a bit of a tidy up of the examples, mostly the description text.

# Description

- Remove two incorrect examples for `date to-record` and `date to-table`

where nothing was piped in (which causes an error in actual use).

- Fix misleading descriptions in `date to-timezone` which erroneously

referred to Hawaii's time zone.

- Standardise on "time zone" in written descriptions.

- Generally tidy up example descriptions and improve consistency.

# User-Facing Changes

Only in related help text showing examples.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Fix typos in comments

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Signed-off-by: geekvest <cuimoman@sohu.com>

# Description

This improves the resolution of the sleep commands by simply not

clamping to the default 100ms ctrl+c signal checking loop if the

passed-in duration is shorter.

# User-Facing Changes

You can use smaller values in sleep.

```

# Before

timeit { 0..100 | each { |row| print $row; sleep 10ms; } } # +10sec

# After

timeit { 0..100 | each { |row| print $row; sleep 10ms; } } # +1sec

```

It still depends on the internal behavior of thread::sleep and the OS

timers. In windows it doesn't seem to go much lower than 15 or 10ms, or

0 if you asked for that.

# After Submitting

Sleep didn't have anything documenting its minimum value, so this should

be more in line with its standard procedure. It will still never sleep

for less time than allocated.

Did you know `sleep` can take multiple durations, and it'll add them up?

I didn't

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This PR makes sure `$nu.default-config-dir` and `$nu.plugin-path` are

canonicalized.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

`$nu.default-config-dir` (and `$nu.plugin-path`) will now give canonical

paths, with symlinks and whatnot resolved.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

I've added a couple of tests to check that even if the config folder

and/or any of the config files within are symlinks, the `$nu.*`

variables are properly canonicalized. These tests unfortunately only run

on Linux and MacOS, because I couldn't figure out how to change the

config directory on Windows. Also, given that they involve creating

files, I'm not sure if they're excessive, so I could remove one or two

of them.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Show an example of loading from a custom file, and an example of adding

multiple entry to PATH. Loading from a custom file will hopefully allow

for greater modularity of configuration files out of the box for new

users. Adding multiple paths to PATH is very common, and will help new

users to.

Adds this:

```

# To add multiple paths to PATH this may be simpler:

# use std "path add"

# $env.PATH = ($env.PATH | split row (char esep))

# path add /some/path

# path add ($env.CARGO_HOME | path join "bin")

# path add ($env.HOME | path join ".local" "bin")

# $env.PATH = ($env.PATH | uniq)

# To load from a custom file you can use:

# source ($nu.default-config-dir | path join 'custom.nu')

```

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

# Description

Replace panics with errors in thread spawning.

Also adds `IntoSpanned` trait for easily constructing `Spanned`, and an

implementation of `From<Spanned<std::io::Error>>` for `ShellError`,

which is used to provide context for the error wherever there was a span

conveniently available. In general this should make it more convenient

to do the right thing with `std::io::Error` and always add a span to it

when it's possible to do so.

# User-Facing Changes

Fewer panics!

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# Description

This PR allows `view source` to view aliases again. It looks like it's

been half broken for a while now.

fixes#12044

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Currently, in the test for interpolating strings at parse-time, the

formatted string includes `(X years ago)` (from formatting a date) (test

came from https://github.com/nushell/nushell/pull/11562). I didn't

realize when I was writing it that it would have to be updated every

year. This PR uses regex to check the output instead.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This command mixes input from multiple sources and sends items to the

final stream as soon as they're available. It can be called as part of a

pipeline with input, or it can take multiple closures and mix them that

way.

See `crates/nu-command/tests/commands/interleave.rs` for a practical

example. I imagine this will be most often used to run multiple commands

in parallel and print their outputs line-by-line. A stdlib command could

potentially use `interleave` to make this particular use case easier.

It's quite common to wish that nushell had a command for running things

in the background, and instead of providing job control, this provides

an alternative to some use cases for that by just allowing multiple

commands to run simultaneously and direct their output to the same

place.

This enables certain things that are not possible with `par-each` - for

example, you may wish to run `make` across several projects in parallel:

```nushell

(ls projects).name | par-each { |project| cd $project; make }

```

This works well enough, but the output will only be available after each

`make` command finishes. `interleave` allows you to get each line:

```nushell

interleave ...(

(ls projects).name | each { |project|

{

cd $project

make | lines | each { |line| {project: $project, out: $line} }

}

}

)

```

The result of this is a stream that you could process further - for

example, by saving to a text file.

Note that the closures themselves are not run in parallel. The initial

execution happens serially, and then the streams are consumed in

parallel.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Adds a new command.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Fixes#12020

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This is a test of changing out the current criterion microbenchmark tool

to [Divan](https://nikolaivazquez.com/blog/divan/), a new and more

straightforward microbenchmark suit.

Itself states it is robust to noise, and even allow it to be used in CI

settings. It by default has no external dependencies and is very fast to

run, the sampling method allows it to be a lot faster compared to

criterion requiring less samples.

The output is also nicely displayed and easy to get a quick overview of

the performance.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

Based off of #11760 to be mergable without conflicts.

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

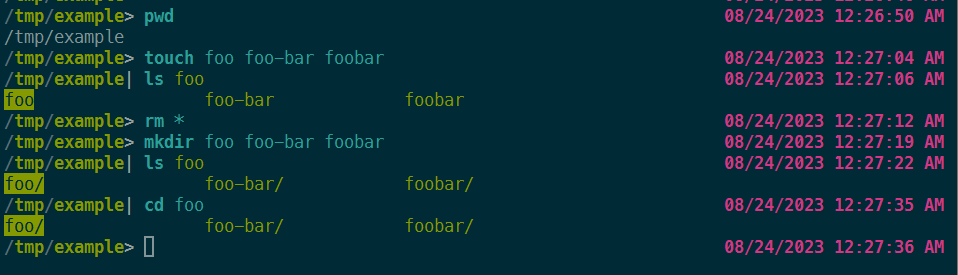

Fix for #11757.

The main issue in #11757 is I tried to copy the timestamp from one

directory to another only to realize that did not work whereas the

coreutils `^touch` had no problems. I thought `--reference` just did not

work, but apparently the whole `touch` command could not work on

directories because

`OpenOptions::new().write(true).create(true).open(&item)` tries to

create `touch`'s target in advance and then modify its timestamps. But

if the target is a directory that already exists then this would fail

even though the crate used for working with timestamps, `filetime`,

already works on directories.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

I don't believe this should change any existing valid behaviors. It just

changes a non-working behavior.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

~~I only could not run `cargo test` because I get compilation errors on

the latest main branch~~

All tests pass with `cargo test --features=sqlite`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- Fixes#11997

# Description

Fixes the issue that comments are not ignored in SSV formatted data.

# User-Facing Changes

If you have a comment in the beginning of SSV formatted data it is now

not included in the SSV table.

# Tests + Formatting

The PR adds one test in the ssv.rs file. All previous test-cases are

still passing. Clippy and Fmt have been ran.

# Description

This PR removes our old nushell `mv` command in favor of the

uutils/coreutils `uu_mv` crate's `mv` command which we integrated in

0.90.1.

# User-Facing Changes

# Tests + Formatting

# After Submitting

# Description

This patches `StreamReader`'s iterator implementation to not return any

values after an I/O error has been encountered.

Without this, it's possible for a protocol error to cause the channel to

disconnect, in which case every call to `recv()` returns an error, which

causes the iterator to produce error values infinitely. There are some

commands that don't immediately stop after receiving an error so it's

possible that they just get stuck in an infinite error. This fixes that

so the error is only produced once, and then the stream ends

artificially.

# Description

After some iteration on globbing rules, I don't think `str escape-glob`

is needed

# User-Facing Changes

```nushell

❯ let f = "[ab]*.nu"

❯ $f | str escape-glob

Error: × str escape-glob is deprecated

╭─[entry #1:1:6]

1 │ $f | str escape-glob

· ───────┬───────

· ╰── if you are trying to escape a variable, you don't need to do it now

╰────

help: Remove `str escape-glob` call

[[]ab[]][*].nu

```

# Tests + Formatting

NaN

# After Submitting

NaN

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

fixes#12006

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Process empty headers as well in `to md` command.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This fixes a race condition where all interfaces to a plugin might have

been dropped, but both sides are still expecting input, and the

`PluginInterfaceManager` doesn't get a chance to see that the interfaces

have been dropped and stop trying to consume input.

As the manager needs to hold on to a writer, we can't automatically

close the stream, but we also can't interrupt it if it's in a waiting to

read. So the best solution is to send a message to the plugin that we

are no longer going to be sending it any plugin calls, so that it knows

that it can exit when it's done.

This race condition is a little bit tricky to trigger as-is, but can be

more noticeable when running plugins in a tight loop. If too many plugin

processes are spawned at one time, Nushell can start to encounter "too

many open files" errors, and not be very useful.

# User-Facing Changes

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

I will need to add `Goodbye` to the protocol docs

# Description

This PR adds `is-not-empty` as a counterpart to `is-empty`. It's the

same code but negates the results. This command has been asked for many

times. So, I thought it would be nice for our community to add it just

as a quality-of-life improvement. This allows people to stop writing

their `def is-not-empty [] { not ($in | is-empty) }` custom commands.

I'm sure there will be some who disagree with adding this, I just think

it's like we have `in` and `not-in` and helps fill out the language and

makes it a little easier to use.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

[Related conversation on

Discord](https://discord.com/channels/601130461678272522/615329862395101194/1209951539901366292)

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This is inspired by the Unix tee command, but significantly more

powerful. Rather than just writing to a file, you can do any kind of

stream operation that Nushell supports within the closure.

The equivalent of Unix `tee -a file.txt` would be, for example, `command

| tee { save -a file.txt }` - but of course this is Nushell, and you can

do the same with structured data to JSON objects, or even just run any

other command on the system with it.

A `--stderr` flag is provided for operating on the stderr stream from

external programs. This may produce unexpected results if the stderr

stream is not then also printed by something else - nushell currently

doesn't. See #11929 for the fix for that.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

If someone was using the system `tee` command, they might be surprised

to find that it's different.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

As title, currently on latest main, nushell confused user if it allows

implicit casting between glob and string:

```nushell

let x = "*.txt"

def glob-test [g: glob] { open $g }

glob-test $x

```

It always expand the glob although `$x` is defined as a string.

This pr implements a solution from @kubouch :

> We could make it really strict and disallow all autocasting between

globs and strings because that's what's causing the "magic" confusion.

Then, modify all builtins that accept globs to accept oneof(glob,

string) and the rules would be that globs always expand and strings

never expand

# User-Facing Changes

After this pr, user needs to use `into glob` to invoke `glob-test`, if

user pass a string variable:

```nushell

let x = "*.txt"

def glob-test [g: glob] { open $g }

glob-test ($x | into glob)

```

Or else nushell will return an error.

```

3 │ glob-test $x

· ─┬

· ╰── can't convert string to glob

```

# Tests + Formatting

Done

# After Submitting

Nan

# Description

Fixes: #11912

# User-Facing Changes

After this change:

```

let x = '*.nu'; ^echo $x

```

will no longer expand glob.

If users still want to expand glob, there are also 3 ways to do this:

```

# 1. use spread operation with `glob` command

let x = '*.nu'; ^echo ...(glob $x)

```

# Tests + Formatting

Done

# After Submitting

NaN

This PR should close#11693.

# Description

This PR just adds a '--all' flag to the `clear` command in order to

clear the terminal and its history.

By default, the `clear` command only scrolls down.

In some cases, clearing the history as well can be useful.

Default behavior does not change.

Even if the `clear` command can be extended form within nushell, having

it in out of the box would allow to use it raw, without any

customization required.

Last but not least, it is pretty easy to implement as it is already

supported by the crate which is used to clear the terminal

(`crossterm`).

Providing relevant screenshot is pretty difficult because the result is

the same.

In the `clear --all` case, you just cannot scroll back anymore.

# User-Facing Changes

`clear` just scrolls down as usual without wiping the history of the

terminal.

` clear --all` scrolls down and wipe the terminal's history which means

scrolling back is no more possible.

# Tests + Formatting

General formatting and tests pass and have been executed on Linux only.

I don't have any way to test it on other systems.

There are no specific tests for the `clear` command so I didn't add any

(and I am not sure how to do if I had to).

Clear command is just a wrapper of the `crossterm` crate Clear command.

I would be more than happy if someone else was able to test it in other

context (even if it may be good as we rely on the crossterm crate).

# After Submitting

PR for documentation has been drafted:

https://github.com/nushell/nushell.github.io/pull/1266.

I'll update it with version if this PR is merged.

---------

Co-authored-by: Stefan Holderbach <sholderbach@users.noreply.github.com>

# Description

- enables `bits` commands to operate on binary data, where both inputs

are binary and can vary in length

- adds an `--endian` flag to `bits and`, `or`, `xor` for specifying

endianness (for binary values of different lengths)

# User-Facing Changes

- `bits` commands will no longer error for non-int inputs

- the default for `--number-bytes` is now `auto` (infer int size;

changed from 8)

# Tests + Formatting

> addendum: first PR, please inform if any changes are needed

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

`umkdir` was added in #10785, I think it's time to replace the default

one.

# After Submitting

Remove the old `mkdir` command and making coreutils' `umkdir` as the

default

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

This PR does a total of 3 things,

1. It fixes an error when running the `cargo bench` suit where nushell

constants where not set correctly ending in an error when running the

code.

2. It removes 2 redundant benchmark runs as these where duplicates of

existing ones.

3. It reduced encoding and decoding benchmark suit future, only having 4

benches instead of the previous 8.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

- adds a `--signed` flag to `into int` to allow parsing binary values as

signed integers, the integer size depends on the length of the binary

value

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

- attempting to convert binary values larger than 8 bytes into integers

now throws an error, with or without `--signed`

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

- wrote 3 tests and 1 example for `into int --signed` usage

- added an example for unsigned binary `into int`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

- will add examples from this PR to `into int` documentation

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

The test checks the time that has passed, bumped year by 1.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR bumps reedline to the latest main which has the

`executehostcommand` changes

https://github.com/nushell/reedline/pull/758 which essentially allows

reedline/nushell to call `executehostcommand` in key bindings and

rewrite the commandline buffer without inserting a newline.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Attempting to complete a directory with hidden files could cause a

variety of issues. When Rust parses the partial path to be completed

into components, it removes the trailing `.` since it interprets this to

mean "the current directory", but in the case of the completer we

actually want to treat the trailling `.` as a literal `.`. This PR fixes

this by adding a `.` back into the Path components if the last character

of the path is a `.` AND the path is longer than 1 character (eg., not

just a ".", since that correctly gets interpreted as Component::CurDir).

Here are some things this fixes:

- Panic when tab completing for hidden files in a directory with hidden

files (ex. `ls test/.`)

- Panic when tab completing a directory with only hidden files (since

the common prefix ends with a `.`, causing the previous issue)

- Mishandling of tab completing hidden files in directory (ex. `ls

~/.<TAB>` lists all files instead of just hidden files)

- Trailing `.` being inexplicably removed when tab completing a

directory without hidden files

While testing for this PR I also noticed there is a similar issue when

completing with `..` (ex. `ls ~/test/..<TAB>`) which is not fixed by

this PR (edit: see #11922).

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

N/A

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

Added a hidden-files-within-directories test to the `file_completions`

test.

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR tweaks the built-in `cal` command so that it's still nushell-y

but looks closer to the "expected" cal by abbreviating the name of the

days. I also added the ability to color the current day with the current

"header" color.

### Before

### After

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Currently, there's multiple places that look for a config directory, and

each of them has different error messages when it can't be found. This

PR makes a `ConfigDirNotFound` error to standardize the error message

for all of these cases.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Previously, the errors in `create_nu_constant()` would say which config

file Nushell was trying to get when it couldn't find the config

directory. Now it doesn't. However, I think that's fine, given that it

doesn't matter whether it couldn't find the config directory while

looking for `login.nu` or `env.nu`, it only matters that it couldn't

find it.

This is what the error looks like:

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

---------

Co-authored-by: Antoine Stevan <44101798+amtoine@users.noreply.github.com>

Bumps [mockito](https://github.com/lipanski/mockito) from 1.2.0 to

1.3.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/lipanski/mockito/releases">mockito's

releases</a>.</em></p>

<blockquote>

<h2>1.3.0</h2>

<ul>

<li><a

href="3e2d4662eb">Introduced</a>

<code>Server::new_with_opts</code>,

<code>Server::new_with_opts_async</code> and the <code>ServerOpts</code>

struct to allow configuring the server host, port and enabling

auto-asserts (see next item)</li>

<li><a

href="3e2d4662eb">Added</a>

the <code>assert_on_drop</code> server option that allows you to

automatically call <code>assert()</code> whenever your mocks go out of

scope (defaults to false)</li>

<li><a

href="2ed230b5e9">Expose</a>

<code>Server::socket_address()</code> to return the raw server

<code>SocketAddr</code></li>

<li><a

href="efc7da13c5">Use</a>

only required features for dependencies</li>

<li><a

href="bcdcb2a154">Accept</a>

<code>hyper::header::HeaderValue</code> as a <code>match_header()</code>

value</li>

</ul>

<p>Thanks to <a

href="https://github.com/andrewtoth"><code>@andrewtoth</code></a> <a

href="https://github.com/alexander-jackson"><code>@alexander-jackson</code></a></p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="a09f1f0009"><code>a09f1f0</code></a>

Bump to 1.3.0</li>

<li><a

href="0be6d7a184"><code>0be6d7a</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/191">#191</a>

from lipanski/server-opts</li>

<li><a

href="3e2d4662eb"><code>3e2d466</code></a>

Allow configuring the mock server (host, port, assert_on_drop)</li>

<li><a

href="12cb5d0786"><code>12cb5d0</code></a>

Add sponsor button</li>

<li><a

href="3cce903c0f"><code>3cce903</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/186">#186</a>

from alexander-jackson/feat/return-raw-socket-address</li>

<li><a

href="2ed230b5e9"><code>2ed230b</code></a>

feat: return raw socket address</li>

<li><a

href="496f26da87"><code>496f26d</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/185">#185</a>

from andrewtoth/less-deps</li>

<li><a

href="40138fe979"><code>40138fe</code></a>

Merge pull request <a

href="https://redirect.github.com/lipanski/mockito/issues/184">#184</a>

from andrewtoth/into-headername</li>

<li><a

href="efc7da13c5"><code>efc7da1</code></a>

Use only required features for dependencies</li>

<li><a

href="10d1081d80"><code>10d1081</code></a>

Add impl IntoHeaderName for &HeaderName</li>

<li>Additional commits viewable in <a

href="https://github.com/lipanski/mockito/compare/1.2.0...1.3.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [tempfile](https://github.com/Stebalien/tempfile) from 3.9.0 to

3.10.0.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/Stebalien/tempfile/blob/master/CHANGELOG.md">tempfile's

changelog</a>.</em></p>

<blockquote>

<h2>3.10.0</h2>

<ul>

<li>Drop <code>redox_syscall</code> dependency, we now use

<code>rustix</code> for Redox.</li>

<li>Add <code>Builder::permissions</code> for setting the permissions on

temporary files and directories (thanks to <a

href="https://github.com/Byron"><code>@Byron</code></a>).</li>

<li>Update rustix to 0.38.31.</li>

<li>Update fastrand to 2.0.1.</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="61531eae61"><code>61531ea</code></a>

chore: release v3.10.0</li>

<li><a

href="e246c4a004"><code>e246c4a</code></a>

chore: update deps (<a

href="https://redirect.github.com/Stebalien/tempfile/issues/275">#275</a>)</li>

<li><a

href="4a05e47d3b"><code>4a05e47</code></a>

feat: Add <code>Builder::permissions()</code> method. (<a

href="https://redirect.github.com/Stebalien/tempfile/issues/273">#273</a>)</li>

<li><a

href="184ab8f5ca"><code>184ab8f</code></a>

fix: drop redox_syscall dependency (<a

href="https://redirect.github.com/Stebalien/tempfile/issues/272">#272</a>)</li>

<li>See full diff in <a

href="https://github.com/Stebalien/tempfile/compare/v3.9.0...v3.10.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [strum_macros](https://github.com/Peternator7/strum) from 0.25.3

to 0.26.1.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a

href="https://github.com/Peternator7/strum/releases">strum_macros's

releases</a>.</em></p>

<blockquote>

<h2>v0.26.1</h2>

<h2>0.26.1</h2>

<ul>

<li><a

href="https://redirect.github.com/Peternator7/strum/pull/325">#325</a>:

use <code>core</code> instead of <code>std</code> in VariantArray.</li>

</ul>

<h2>0.26.0</h2>

<h3>Breaking Changes</h3>

<ul>

<li>The <code>EnumVariantNames</code> macro has been renamed

<code>VariantNames</code>. The deprecation warning should steer you in

the right direction for fixing the warning.</li>

<li>The Iterator struct generated by EnumIter now has new bounds on it.

This shouldn't break code unless you manually

added the implementation in your code.</li>

<li><code>Display</code> now supports format strings using named fields

in the enum variant. This should be a no-op for most code.

However, if you were outputting a string like <code>"Hello

{field}"</code>, this will now be interpretted as a format

string.</li>

<li>EnumDiscriminant now inherits the repr and discriminant values from

your main enum. This makes the discriminant type

closer to a mirror of the original and that's always the goal.</li>

</ul>

<h3>New features</h3>

<ul>

<li>

<p>The <code>VariantArray</code> macro has been added. This macro adds

an associated constant <code>VARIANTS</code> to your enum. The constant

is a <code>&'static [Self]</code> slice so that you can access all

the variants of your enum. This only works on enums that only

have unit variants.</p>

<pre lang="rust"><code>use strum::VariantArray;

<p>#[derive(Debug, VariantArray)]

enum Color {

Red,

Blue,

Green,

}</p>

<p>fn main() {

println!("{:?}", Color::VARIANTS); // prints:

["Red", "Blue", "Green"]

}

</code></pre></p>

</li>

<li>

<p>The <code>EnumTable</code> macro has been <em>experimentally</em>

added. This macro adds a new type that stores an item for each variant

of the enum. This is useful for storing a value for each variant of an

enum. This is an experimental feature because

I'm not convinced the current api surface area is correct.</p>

<pre lang="rust"><code>use strum::EnumTable;

<p>#[derive(Copy, Clone, Debug, EnumTable)]

enum Color {

Red,

Blue,

</code></pre></p>

</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/Peternator7/strum/blob/master/CHANGELOG.md">strum_macros's

changelog</a>.</em></p>

<blockquote>

<h2>0.26.1</h2>

<ul>

<li><a

href="https://redirect.github.com/Peternator7/strum/pull/325">#325</a>:

use <code>core</code> instead of <code>std</code> in VariantArray.</li>

</ul>

<h2>0.26.0</h2>

<h3>Breaking Changes</h3>

<ul>

<li>The <code>EnumVariantNames</code> macro has been renamed

<code>VariantNames</code>. The deprecation warning should steer you in

the right direction for fixing the warning.</li>

<li>The Iterator struct generated by EnumIter now has new bounds on it.

This shouldn't break code unless you manually

added the implementation in your code.</li>

<li><code>Display</code> now supports format strings using named fields

in the enum variant. This should be a no-op for most code.

However, if you were outputting a string like <code>"Hello

{field}"</code>, this will now be interpretted as a format

string.</li>

<li>EnumDiscriminant now inherits the repr and discriminant values from

your main enum. This makes the discriminant type

closer to a mirror of the original and that's always the goal.</li>

</ul>

<h3>New features</h3>

<ul>

<li>

<p>The <code>VariantArray</code> macro has been added. This macro adds

an associated constant <code>VARIANTS</code> to your enum. The constant

is a <code>&'static [Self]</code> slice so that you can access all

the variants of your enum. This only works on enums that only

have unit variants.</p>

<pre lang="rust"><code>use strum::VariantArray;

<p>#[derive(Debug, VariantArray)]

enum Color {

Red,

Blue,

Green,

}</p>

<p>fn main() {

println!("{:?}", Color::VARIANTS); // prints:

["Red", "Blue", "Green"]

}

</code></pre></p>

</li>

<li>

<p>The <code>EnumTable</code> macro has been <em>experimentally</em>

added. This macro adds a new type that stores an item for each variant

of the enum. This is useful for storing a value for each variant of an

enum. This is an experimental feature because

I'm not convinced the current api surface area is correct.</p>

<pre lang="rust"><code>use strum::EnumTable;

<p>#[derive(Copy, Clone, Debug, EnumTable)]

enum Color {

Red,

Blue,

Green,

</code></pre></p>

</li>

</ul>

<!-- raw HTML omitted -->

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Commits</summary>

<ul>

<li>See full diff in <a

href="https://github.com/Peternator7/strum/commits/v0.26.1">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

[Discord

context](https://discord.com/channels/601130461678272522/615962413203718156/1211158641793695744)

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

Span fields were previously renamed to `internal_span` to discourage

their use in Rust code, but this change also affected the serde I/O for

Value. I don't believe the Python plugin was ever updated to reflect

this change.

This effectively changes it back, but just for the serialized form.

There are good reasons for doing this:

1. `internal_span` is a much longer name, and would be one of the most

common strings found in serialized Value data, probably bulking up the

plugin I/O

2. This change was never really meant to have implications for plugins,

and was just meant to be a hint that `.span()` should be used instead in

Rust code.

When Span refactoring is complete, the serialized form of Value will

probably change again in some significant way, so I think for now it's

best that it's left like this.

This has implications for #11911, particularly for documentation and for

the Python plugin as that was already updated in that PR to reflect

`internal_span`. If this is merged first, I will update that PR.

This would probably be considered a breaking change as it would break

plugin I/O compatibility (but not Rust code). I think it can probably go

in any major release though - all things considered, it's pretty minor,

and users are already expected to recompile plugins for new major

versions. However, it may also be worth holding off to do it together

with #11911 as that PR makes breaking changes in general a little bit

friendlier.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Requires plugin recompile.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library